Silicon Valley Thinks TSMC is Braking the AI Boom

Now Playing

Silicon Valley Thinks TSMC is Braking the AI Boom

Transcript

451 segments

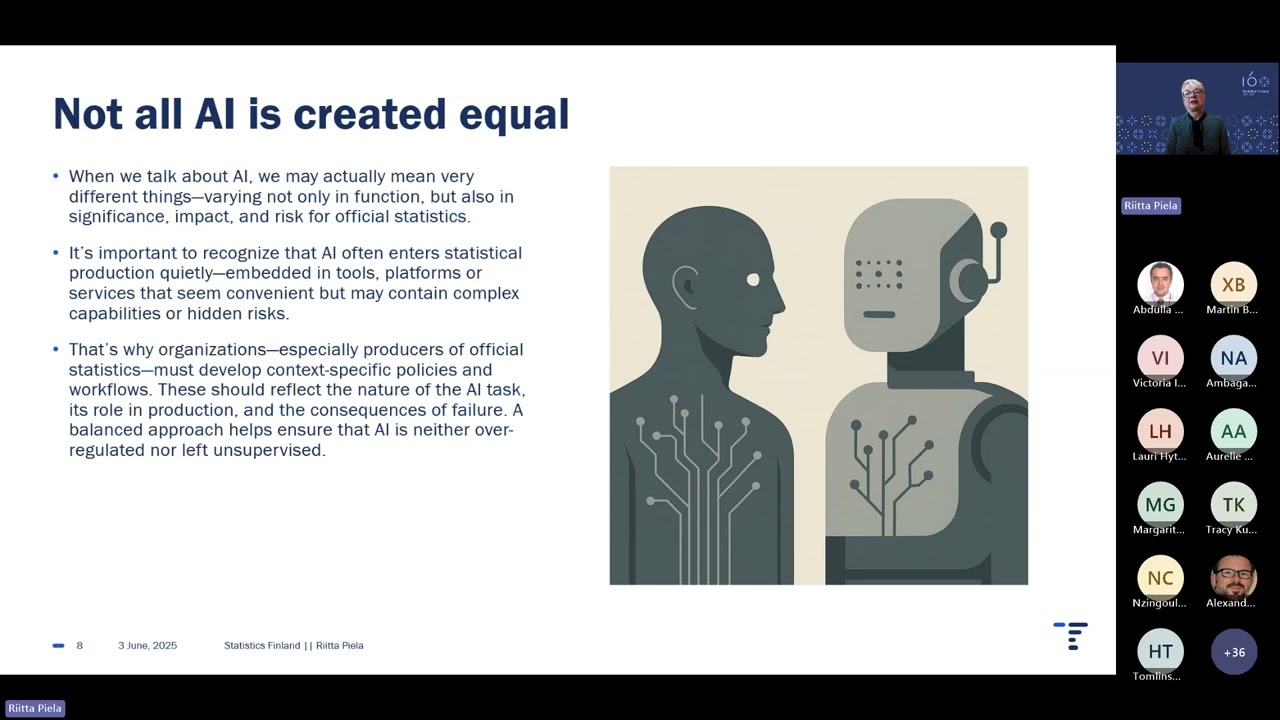

Ben Thompson of Stratecery has a recent

piece out titled TSMC risk in which he

calls out TSMC's conservatism has

costing the American hyperscalers

hundreds of billions in revenue. Before

we continue, I want to disclose that I

work with Ben. The Asianry newsletter

runs on his platform Passport and I am

friendly with him. I'm not trying to

flame him, but I'm hearing many similar

views in the Silicon Valley Borg that

TSMC is the break or limiter on the AI

boom as if they're the reason why we

don't have AGI yet. Because they didn't

and still don't believe.

If we can ever say that a company that

spent 41 billion on capital expenditure

in 2025 with another 53 to 56 billion in

2026 planned is sitting on its hands

doing nothing. Now TSMC is a trillion

dollar company. They don't need some

random YouTuber defending them. Though I

reckon people will still accusing me of

Taiwan bias in the comments. And to be

clear, I largely agree with Ben's final

message. TSMC having 90% share of the AI

chip market looks pretty unhealthy. That

should go down and it will. Samsung

seems to be doing well so far. The point

I want to make concerns the nature of

hardware. What Ben and others in Silicon

Valley are diagnosing as quote shortages

signify TSMC's failure. end quote is

really quote semiconductors are hard and

their supply chains are long end quote.

Having more foundry competition wouldn't

have averted this compute shortage. The

cold hard reality is that shortages are

a fact of life in semiconductors as are

horrific gluts. I was supposed to be

working on a video about bananas, but I

had to do this first. In today's video,

a few scattered thoughts on TSMC taking

away the AI punch bowl. I want to talk

about the beer game. No, it has nothing

to do with drinking beer, nor does it

promote drinking. Because this is Asian,

I will rename it to the boba game. It is

a game developed in the 1950s by the

famed MIT professor Jay Forester who

also did pioneering work on core

memories to demonstrate the concepts of

system dynamics. I was introduced to it

by a TSMC manager and friend. In the

game, people operate in a marketplace

for Boba. The game's players cosplay as

boba sellers in one of four divisions:

retailer, wholesaler, distributor, and

the factory. The players must work

together to minimize costs and maximize

revenue. A deck of cards represents

weekly customer demand for boba.

Retailers supply boba to the customers

pulling out of their inventory.

Retailers want to keep inventory levels

as low as possible because it costs

money to hold inventory, but also want

to avoid stockouts because that's lost

revenue. These incur a penalty in

negative dollars. The retailer refills

low inventories with orders from the

wholesaler. The wholesaler in turn must

go to the distributor to reload, who

then in turn purchases from the factory.

At each step, we have time delays for

order processing, shipping, or

production. At the start of the Boba

game, customer demand is steady. But as

the game progresses, the cards start

showing unannounced spikes in demand.

This simple boba supply chain has to

scramble to adjust, creating delays and

shortages or overreactions and overp

production and everyone thinking someone

else other than themselves messed up.

What we are flippantly labeling as TSMC

we really mean is the AI supply chain.

And that supply chain is as complicated

as you can possibly imagine. Like an

iceberg, it looks big enough on the

surface of the water, but goes way far

deeper underneath. TSMC has thousands of

suppliers in two categories. Equipment

like the famed ASML lithography tools

and materials like photoresist, silicon

wafers, acid etch gases and so on. These

are not generalized tools and materials.

They are not fungeible like AWS compute

units. Just within the bland term of

deposition, we have wild variations

between tools like low pressure chemical

vapor deposition, molecular beam

epitexi, atomic layer deposition and so

on. These are not interchangeable and

each need their own multi-million dollar

tool. And each tool category/niche

has maybe three major equipment players

like maybe applied lamb tell or so on. I

also have to mention the non

semiconductor stuff too. Power, water,

land and labor. Both Taiwan and the

United States have issues providing all

of this. Time is needed to build out the

infrastructure to provide it. And then

there are the memory guys. You cannot

ship an AI system without memory. DAM

and NAND. Nvidia's AI chips use a

special form of DRAM called high

bandwidth memory and they use quite a

lot of it. The memory industry is just

as consolidated as the logic industry

with the major players being Samsung,

SKH Highix and Micron. There are also

the Chinese memory makers, but they're

not being used for AI chips for the West

because they are so deep down. The chip

guys are last to know when the party is

getting started. But first, they get

batoned in the face when the police shut

things down. Batoned, I mean bullhipped.

The bullhip effect is an effect of the

boba gain that says that a demand signal

tends to amplify as it travels up the

various levels of the supply chain. From

1961 to 2006, electronics consumption in

the United States grew positively but

with wild volatility swings between 0 to

20%. But for the semiconductor makers,

that translates to swings anywhere from

- 20% to 40%. And for the equipment

makers, it is amplified even more, plus

or minus 60%. The whip hits particularly

hard in the semiconductor industry

because of the industry's long lead

times. It takes 4.5 months to fabricate

and package a chip. It takes 18 months

to 2 years to build a fab. Meaning from

shovels down to producing chips, and it

takes 12 to 18 months to produce and

install something like an EUV machine

into the fab. Another 6 months before

that machine actually starts patterning

wafers.

Long lead times mean having to make very

long demand forecasts which leads to

extreme volatility swings during up and

downturns even if those up or downturns

are relatively small. ASML just reported

2025 earnings and we see the bull whip

in full effect. TSMC raised capital

expenditure 35% but ASML announced 13.2

billion e of net new bookings. analysts

had expected just 6.32 billion. This is

because ASML collected orders not just

from TSMC but also Samsung, Intel and

the memory guys. When it rains it pours,

right? Again, this is why I fear that

another AI foundry would not mean our

compute shortage is solved because

ultimately when those foundaries start

scaling their capacity, they all go to

the same suppliers. Those suppliers then

go to their suppliers and everyone gets

slammed. We literally just went through

all of this a few years ago during the

COVID PC and remote working boom. Did we

not forget? Remember when the New York

Times, Wall Street Journal, and the Blog

Boys ran headlines about how the

American economy was grinding to a halt

because they couldn't get these little

trailing edge microprocessors,

that the car factories are all shutting

down. Asometry was around at this time.

I remember how tortured the supply chain

was. The car makers canled orders during

the first lockdowns, but then the

economy came back to life over the

summer and everyone needed their chips

back. TSMC was trying to discern between

double booked orders and real demand,

which is not an uncommon experience for

them. Customers lie about their own

demand all the time, or at least we can

say that they are eternally optimistic.

TSMC tried to respond in 2022. The

Taiwanese giant poured $36 billion into

capital expenditure. They went to their

suppliers and pushed like no tomorrow.

Mark Hyink's excellent 2024 book focus

details an extremely tense interaction

with the TSMC R&D SVP who is now at

Intel by the way. They even announced

new trailing edge fabs. For instance,

the original plans for the FAB in

Gaoong, as announced in late November

2022, would have it run a 28 nanometer

process node, a trailing edge process

node. How weird is that?

Well, it turned out those customers

really were double booking orders and

artificially inflating demand. When the

macro environment turned in 2022, the

automotive, smartphone, and PC chips

that were so hot during the COVID era

fell out of vogue and customers started

cutting orders. By the end of 2022,

Silicon Valley people though had already

moved on to the next shiny thingy, chat

GBT. People losing their minds over bots

writing poems and code. and the

hyperscalers started to figure that they

needed a bigger boat/data center.

Meanwhile, deeper down in the supply

chain, TSMC and the rest of the

semiconductor industry were getting

bullhipped by COVID hangover.

Utilization at TSMC's multi-billion

dollar N7 fabs crashed. Semi analysis

wrote in April 2023.

Now, semi-analysis data indicates that

the 7nanmter utilization rates were

below 70% in Q1. Furthermore, Q2 gets

even worse with 7 nanometer utilization

rates falling to below 60%. This is

primarily due to weakness in both

smartphones and PCs, but there is a

broader weakness in most segments.

A FAB's break even utilization rates are

about 60 to 70%. So those N7 TYON fabs

were taking financial losses potentially

on the order of hundreds of millions,

maybe even billions. The financial

burdens of low utilization are another

reason why I'm skeptical another AI

foundry could have rushed into the AI

chip fray to save the day. Having slack

advanced node capacity means taking

massive depreciation losses. Having such

pricey non-performing 7nanmter fabs

could have been crippling. The TSMC

stock in 2022 and 2023 looked pretty

precarious. But TSMC pivoted to AI and

survived. It's an indication that their

product diversification strategy works.

There was another semiconductor company

that did not do so well during this

time. Intel. Between 2021 and 2023, they

hired 20,000 people, announced billions

of dollars of fabs and expansions around

the world, and set forth an

ultraaggressive process node rollout

schedule. Then the COVID PC and remote

working boom abruptly ended. And then

the hyperscalers started buying GPUs

instead of CPUs. As a result, tens of

thousands of layoffs, executive turmoil

with CEO Pat Gellzinger being forced

out, and Intel took themselves

competitively out of the market for what

seems like years. A situation that

eventually required Japan style state

intervention and the mustering of market

players to try and reverse the slide. We

shall see if such efforts do better than

Japan's efforts to save Alpeta.

Ben points the TSMC's stagnant capital

expenditure in 2023 and 2024

and makes a gentle criticism. ChatBT was

released in November 2022 and that

kicked off a massive increase in capex

amongst the hyperscalers in particular,

but it sure seems like TSMC didn't buy

the hype. That lack of increased

investment earlier this decade is why

there is a shortage today and is why

TSMC has been a de facto break on the AI

buildout/bubble.

It is true that the hyperscalers started

growing their capex in late 2022.

But remember the boa game again. When

does that filter down to TSMC and the

rest of the industry? And when could

they have known? They certainly didn't

know in 2023. In the April 2023 earnings

call, which took place some five months

after Chat GPT's release, CC says he

noticed Chat GPT's growth, but repeats

multiple times that he has no idea what

AI's impact on TSMC will be.

He also mentioned getting what seems to

be the first orders from presumably

Nvidia for more co-ass capacity. quote,

"Just recently in these two years, I

received a customer's phone call

requesting a big increase on the

back-end capacity, especially in the

co-as. We are still evaluating that."

End quote. At the next earnings call in

July 2023, he says that AI accelerators

were about 6% of TSMC revenue and

projected to grow to quote low teens

percent and quote over the next few

years. Wall Street was looking for such

numbers. So I presume they got those

projections straight from customers.

TSMC also projected their overall 2023

revenue to decline 10%. Citing the

revenue declines due to macro postcoid

and China issues to be bigger than AI.

Of course this changed by the end of the

year as AI surged so much. So nobody

knew or thought to scale in early 2023.

But what about 2024?

Well, that year had all the technical

issues. I recall news in mid 2024 of

TSMC struggling with co-ass capacity

bottlenecks and yield problems,

including one design issue that caused

cracks in the Nvidia chips packaging.

Nvidia stock dropped when the news came

out and everyone thought that we were so

over. A former TSMC packaging engineer

told me a frantic late night experiments

to figure out the right tweaks to fix

the problem. And Nvidia going so hard as

to tell them to take every tweak option

and run them on live wafers, the

semiconductor version of pushing direct

to prod. I also recall news in late 2024

noting how the vendors in charge of

making the server racks for Nvidia's

Blackwell servers struggled with

overheating, liquid cooling leaks,

software bugs, and connectivity issues.

Such technical difficulties delayed

server deployment until early to mid

2025,

creating a weird situation for several

months where TSMC was pumping out chips

that just went into storage. So that

gated things because you don't scale

until you first fix the technical

problems. I also want to add that in

2024, TSMC and the rest of the chip

industry did not know if those buying AI

chips would make money on them. Recall

those famous Seoia Capital articles AI's

$200 billion question and then the $600

billion question. Those came out in

September 2023 and June 2024,

respectively. I don't think any sensible

foundry would have then committed

billions to new fabs.

So I argue that the optimal time for

TSMC and the rest of the semiconductor

industry to really scale capex was 2025

where upon the boba game kicked into

effect. Some things just take time.

Ben writes that it is chips not power

behind the shortage of compute capacity

that the hyperscalers are complaining

about. He points the comments from CCway

as support. CC said talking about to

build a lot of AI data center all over

the world. I use one of my customers

customers answer. I asked the same

question. So they say that they work on

the power supply 5 to 6 years ago. So

today their message to me is silicon

from TSMC is a bottleneck and asked me

not to pay attention to all others

because they have to solve the silicon

bottleneck first. I don't interpret

those comments the same way Ben does.

TSMC is not a power company. I read that

as basically meaning quote TSMC should

be focusing on what they can do and they

make chips not power. End quote. Also,

CC Way doesn't speak as carefully as

Morris does, but there is no way he is

going to say on an earnings call, "Yeah,

dude, they can't get the power

connection, so they don't need TSMC

chips right now." And if this customer's

customer is making electricity

parameters based on assumptions from

five to six years ago, then they

definitely got a power shortage because

AI data centers suck way more power than

a CPUcentric data center specked out in

2021.

And if you want to hear words from a

TSMC executive, I point to you to a

deleted LinkedIn post from TSMC

Arizona's CFO. I don't have a screenshot

because she scrubbed that fast, but the

URL reads, "AI's real bottleneck isn't

chips, it's power."

In the end, I think the power shortages

are real and way more serious than the

silicon ones. Elon is bringing in

truckmounted gas turbines to his data

centers, and new gas turbines aren't

available until 2029.

At least the semiconductor people are

trying. semi analysis said in report

that the various legacy gas turbine

makers will not greatly expand their

factory footprints. They seem a bit

grumpy that the turbine boys aren't AGI

pled.

I want to close with a thesis that's

been percolating in me for a while. The

gap between the hardware and software

worlds are wider than ever before. I

reckon that it's been a good 30 years

since Silicon Valley was actually about

making silicon and there's still many

Silicon people living in Santa Clara,

Sunnyville, Palo Alto, but they tend to

be older, retired even. I often go to

the Bay Area to talk to people, software

people and AI people on occasion, and I

ask them how much they know about how

their hardware is made. For almost all

of them, even the smartest in their

domain, they know virtually nothing. It

is a hard silicon line. I feel like both

sides know so little about the other. My

message to Silicon Valley is this. I'm

sorry that claude code is a little slow

for you right now, but the chips are

coming. People are torturing themselves

to make them, put them into racks, and

start up the data centers. Let's

exercise a little patience. All right,

everyone. That's it for tonight. Thanks

for watching. Subscribe to the channel.

Sign up for the Patreon. And I'll see

you guys next time.

Interactive Summary

Ask follow-up questions or revisit key timestamps.

The video discusses the perceived bottleneck in AI development, attributing it to TSMC's conservatism and supply chain issues. It uses the "Boba Game" analogy to explain how demand signals amplify up the supply chain, leading to shortages and overproduction. The speaker argues that semiconductor manufacturing is inherently complex and prone to cyclical shortages and gluts, and that simply adding more foundries would not solve the problem. The video also touches upon the critical role of memory chips, the long lead times in semiconductor manufacturing, and the challenges faced by companies like Intel. It highlights that power supply might be a more significant bottleneck for AI data centers than chip availability, and concludes by emphasizing the widening gap between hardware and software expertise in Silicon Valley.

Suggested questions

5 ready-made promptsRecently Distilled

Videos recently processed by our community