The 5 Levels of AI Coding (Why Most of You Won't Make It Past Level 2)

Now Playing

The 5 Levels of AI Coding (Why Most of You Won't Make It Past Level 2)

Transcript

1123 segments

90% of cloud code was written by claude

code. Codeex is releasing features

entirely written by codecs. And yet most

developers using AI empirically get

slower, at least at first. The gap

between these two facts is where the

future of software lives. Imagine

hearing this at work. Code must not be

written by humans. Code must not be even

reviewed by humans. Those are the first

two principles of a real production

software team called Strong DM and their

software factory. They're just three

engineers. No one writes code. No one

reviews code. The system is a set of AI

agents orchestrated by markdown

specification files. The system is

designed to take a specification, build

the software, test the software against

real behavior scenarios, and

independently ship it. All the humans do

is write the specs and evaluate the

outcomes. The machines do absolutely

everything in between. As I was saying,

meanwhile, 90% and yes, it's true. Over

at Anthropic, 90% of Claude Code's

codebase was written by Claude Code

itself. Boris Triny, who leads the

Claude Code project at Anthropic, hasn't

personally written code in months. And

Anthropic's leadership is now estimating

that functionally 100% the entirety of

code produced at the company is AI

generated. And yet at the same time, in

the same industry, with us here on the

same planet, a rigorous 2025 randomized

control trial by METR found that

experienced open-source developers using

AI tools took 19% longer to complete

tasks than developers working without

them. There is a mystery here. They're

not going faster, they're going slower.

And here's the part that should really

unsettle you. Those developers are bad

at estimation. They believed AI had made

them 24% faster. They were wrong not

just about the direction but about the

magnitude of the change. Three teams are

running lights out software factories.

The rest of the industry is getting

measurably slower. Just a few teams

around tech are running truly lights out

software factories. The rest of the

industry tends to get measurably slower

while convincing themselves and everyone

around them with press releases that

they're speeding up. The distance

between these two realities is the most

important gap in tech right now and

almost nobody is talking honestly about

it and what it takes to cross it. That

is what this video is about. Dan

Shapiro, the CEO over at Glow Forge and

the veteran of multiple companies built

on the boundary between software and

physical products, just published a

framework earlier this year in 2026 that

maps where the industry stands. He calls

it the five levels of vibe coding. And

the name is deliberately informal

because the underlying reality is what

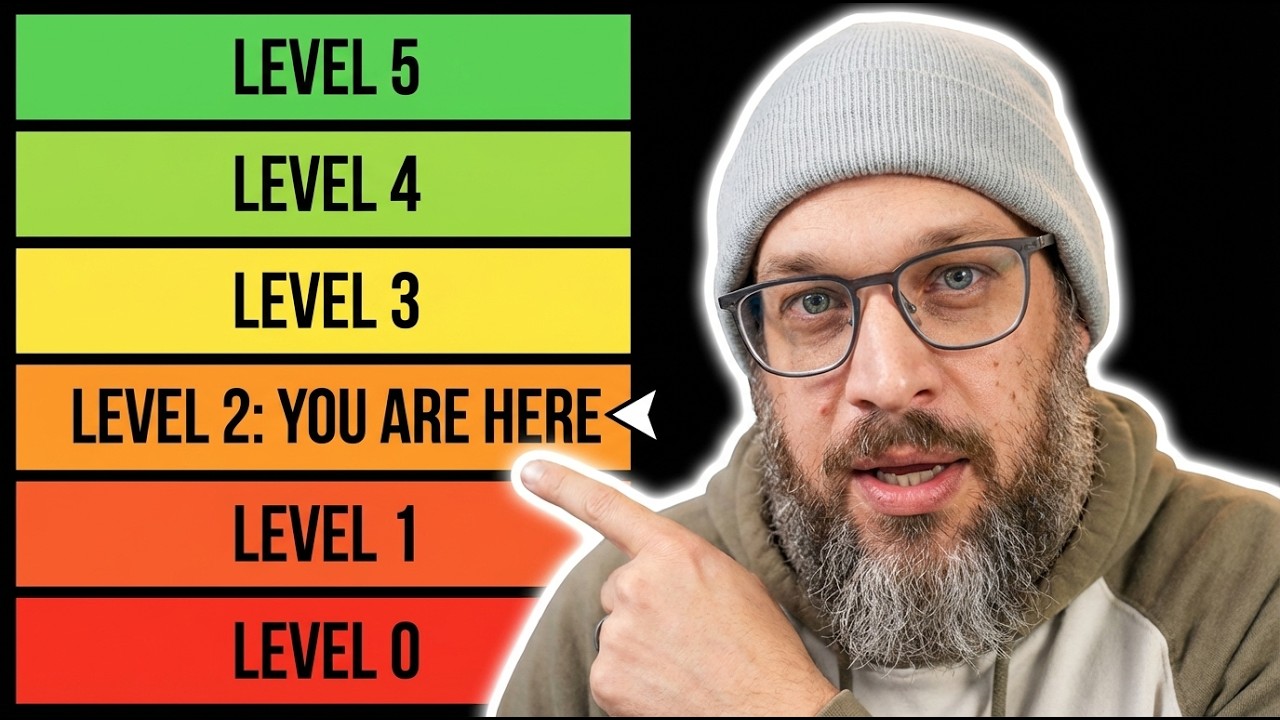

matters. Level zero is what he calls

spicy autocomplete. You type the code,

the AI suggests the next line. You

accept or reject. This is GitHub copilot

in its original format. Just a faster

tab key. The human is really writing the

software here. And the AI is just

reducing the keystrokes and the effort

your fingers have. Level one is coding

intern. You hand the AI a discrete well

scoped task. You write the function. You

build the component. You refactor the

module. That's the task you give the AI.

You hand the AI a discrete and well

scoped task like write this function or

build this component or refactor this

module. You then review as the human

everything that comes back. The AI

handles the tasks. The human handles the

architecture, the judgment and the

integration. Do you see the pattern

here? Do you see how the human is

stepping back more and more through

these levels? Let's keep going. Level

two is the junior developer. The AI

handles multifile changes. It can

navigate a codebase. It can understand

dependencies. It can build features that

span modules. You're reviewing more

complicated output, but you as a human

are still reading all of the code.

Shapiro estimates that 90% of developers

who say they are AI native are operating

at this level. And I think from what

I've seen, he's right. Software

developers who operate here think

they're farther along than they are.

Let's move on. Level three, the

developer is now the manager. This is

where the relationship starts to flip.

This is where it gets interesting.

You're now not writing code and having

the AI help. You're simply directing the

AI and you're reviewing what it

produces. Your day is whether you want

to read, whether you want to approve,

whether you want to reject, but at the

feature level, at the PR level. The

model is doing the implementation. The

model is submitting PRs for your review.

You have to have the judgment. Almost

everybody tops out here right now. Most

developers, Shapiro says, hit that

ceiling at level three because they are

struggling with the psychological

difficulty of letting go of the code.

But there are more levels. And this is

where it gets spicy and exciting. Level

four is the developer as the product

manager. You write a specification, you

leave, you come back hours later and

check whether the tests pass. You're not

really reading the code anymore. You're

just evaluating the outcomes. The code

is a black box. you care whether it

works, but because you have written your

eval so completely, you don't have to

worry too much about how it's written if

it passes. This requires a level of

trust both in the system and in your

ability to write spec. And that quality

of spec writing almost nobody has

developed well yet. Level five, the dark

factory. This is effectively a black box

that turns specs into software. It is

where the industry is going. No human

writes the code. No human even reviews

the code. The factory runs autonomously

with the lights off. Specification goes

in, working software comes out. And you

know, Shapiro is correct. Almost nobody

on the planet operates at this level.

The rest of the industry is mostly

between level one and level three, and

most of them are treating AI kind of

like a junior developer. I like this

framework because it gives us really

honest language for a conversation

that's been drowning in hype. When a

vendor tells you their tool writes code

for you, they often mean level one. When

a startup says they're doing agentic

software development, they often mean

level two or three. But when strong DM

says their code must not be written by

humans, they really do mean level five,

the dark factory, and they actually

operate there. The gap between marketing

language and operating reality is

enormous. and collapsing that gap into

what is actually going on on the ground

requires changes that go way beyond

picking a better AI tool. So many people

look at this problem and think this is a

tool problem. It's not a tool problem.

It's a people problem. So what does

level five software development actually

look like? I think strong DM software

factory is the most thoroughly

documented example of level five in

production. Simon Willis, one of the

most careful and credible observers in

the developer tooling space, calls

StrongDm Software Factory, quote, "The

most ambitious form of AI assisted

software development that I've seen

yet." The details are really worth

digging into here because they reveal

what it looks like to run a dark factory

for software on today's agents. And as

we have this discussion, I want you to

keep in mind that for most of us

listening, we are getting to time

travel. We are seeing how a bold vision

for the future can be translated into

reality with today's agents and today's

agent harnesses. It is only going to get

easier as we go into 2026 which is one

of the reasons I think this is going to

be a massive center of gravity for

future agentic software development

practices. We are all going to level

five. So what does strong DM do? The

team is three people. Justin McCarthy,

CTO, Jay Taylor, and Nan Chowan. They've

been running the factory since July of

last year, actually. And the inflection

point they identify is Claude 3.5

Sonnet, which shipped actually in the

fall of 2024. That's when long horizon

agentic coding started compounding

correctness more than compounding

errors. Give them credit for thinking

ahead. Almost no one was thinking in

terms of dark factories that far back.

But they found that 3.5 sonnet could

sustain coherent work across sessions

long enough that the output was reliable

and it wasn't just a flash in the pan.

It wasn't just demo worthy and so they

built around it. The factory runs on an

open-source coding agent called

attractor. The repo is just three

markdown specification files and that's

it. That's the agent. The specifications

describe what the software should do.

The agent reads them. It writes the code

and it tests it. And here's where it

gets really interesting and where most

people's mental model really starts to

break down. Strong DM doesn't actually

use traditional software tests. They use

what they call scenarios. And the

distinction is important. Tests

typically live inside the codebase. The

AI agent can read them, which means the

AI agent can intentionally or not

optimize for passing the tests rather

than building correct software. It's the

same problem as teaching to the test in

education. You can get perfect scores

and shallow understanding. Scenarios are

different. Scenarios live outside the

codebase. They're behavioral

specifications that describe what the

software should do from an external

perspective, stored separately so the

agent cannot see them during

development. They function as a holdout

set. The same concept that machine

learning users use to prevent

overfitting. The agent builds the

software and the scenarios evaluate

whether the software actually works. The

agent never sees the evaluation

criteria. It can't game the system. This

is really a new idea in software

development and I don't see it

implemented very frequently yet. But it

solves a problem that nobody was

thinking about when all the code was

written by humans. When humans write

code, we don't tend to worry about the

developer gaming their own test suite

unless incentives are really, really

skewed at that organization and then you

have bigger problems. When AI writes the

code, optimizing for test passage is the

default behavior unless you deliberately

architect around it. And it's one of the

most important differences to really

understand as you start to think about

AI as a code builder. Strongdm

architected around that with external

scenarios. The other major piece of the

architecture is what StrongDM calls

their digital twin universe. Behavioral

clones of every external service the

software interacts with. a simulated

octa, a simulated Jira, a simulated

Slack, Google Docs, Google Drive, Google

Sheets. The AI agents develop against

these digital twins, which means they

can run full integration testing

scenarios without ever touching real

production systems, real APIs, or real

data. It's a complete simulated

environment purpose-built for autonomous

software development. And the output is

real. CXDB, their AI context store, has

16,000 lines of Rust, nine and a half

thousand lines of Go, and 700 lines of

TypeScript. It's shipped, it's in

production, it works, it's real

software, and it's built by agents end

to end. And then the metric that tells

you how seriously they take it. They say

if you haven't spent $1,000 per human

engineer, your software factory has room

for improvement. I think they're right.

That's not a joke. $1,000 per engineer

per day enables AI agents to run at a

volume that makes the cost of compute

meaningful if you are giving them a

mission to build software that has real

scale and real utility in production use

cases and it's often still cheaper than

the humans they're replacing. Let's hop

over and look at what the hyperscalers

are doing. The self-referential loop has

taken hold at both anthropic and open

AAI and it's stranger than the hype

might make it sound. Codex 5.3 is the

first frontier AI model that was

instrumental in creating itself. And

that's not a metaphor. Earlier builds of

Codeex would analyze training logs,

would flag failing tests, and might

suggest fixes to training scripts. But

this model shipped as a direct product

of its own predecessors coding labor.

OpenAI reported a 25% speed improvement

and 93% fewer wasted tokens in the

effort to build Codeex 5.3. And those

improvements came in part from the model

identifying its own inefficiencies

during the build process. Isn't that

wild? Cloud code is doing something

similar. 90% of the code in Claude Code,

including the tool itself, was built by

Claude Code, and that number is rapidly

converging toward 100%.

Boris Churny isn't joking when he talks

about not writing code in the last few

months. He's simply saying his role has

shifted to specification, to direction,

to judgment. Anthropic is estimating all

of their company moving to entirely AI

generated code about now. Everyone at

Anthropic is architecting and the

machines are implementing. And the

downstream numbers tell the same story.

When I made a video on co-work and

talked about how it was written in 10

days by four engineers, what I want you

to remember is it wasn't just four

engineers hyperting so that they could

get that out super fast and write every

line by hand. No, no, no. They were

directing machines to build the code for

co-work. And that's why it was so fast.

4% of public commits on GitHub are now

directly authored by Claude Code, a

number that Anthropic thinks will exceed

20% by the end of this year. I think

they're probably right. Claude Code by

itself has hit a billion dollar run rate

just 6 months since launch. This is all

real today in February of 2026. The

tools are building themselves. They're

improving themselves. is they're

enabling us to go faster at improving

themselves and that means the next

generation is going to be faster and

better than it would have been otherwise

and we're going to keep compounding. The

feedback loop on AI has closed and the

question is not whether we're going to

start using AI to improve AI. The

question is how fast that loop is going

to accelerate and what it means for the

40 or 50 million of us around the world

who currently build software for a

living. This is true for vendors as much

as it's true for software developers.

And I don't think we talk about that

enough because the gap between what's

possible at the frontier in February of

2026 and what tends to happen in

practice and what vendors want to sell

has never been wider. That MER study, a

randomized control trial, by the way,

not a survey, found that open source

developers using AI coding tools

completed their task 19% slower. We

talked about that, right? The

researchers controlled for task

difficulty. They controlled for

developer experience. They controlled

even for tool familiarity and none of it

mattered. AI made even experienced

developers slower. Why? In a world where

co-work can ship that fast. Why? Because

the workflow disruption outweighed the

generation speed. Developers spent time

evaluating AI suggestions, correcting

almost right code, context switching

between their own mental model and the

model's output, and debugging really

subtle errors introduced by generated

code that looked correct but weren't.

46% of developers in broader surveys say

they don't fully trust AI generated

code. These guys aren't lites, right?

This is experienced engineers running

into a consistent problem. The AI is

fast, but it struggles with the

reliability to trust without what they

view as vital human review. And this

irony is the J curve that adoption

researchers keep identifying. When you

bolt an AI coding assistant onto an

existing workflow, productivity dips

before it gets better. It goes down like

the bottom of a J. Sometimes for a

while, sometimes for months. And the dip

happens because the tool changes the

workflow, but the workflow has not been

redesigned around the tool explicitly.

And so you're kind of running a new

engine on old transmission. The gears

are going to grind. Most organizations

are sitting in the bottom of that J

curve right now. And many of them are

interpreting the dip as evidence that AI

tools don't work, that the vendors did

not tell them the truth, and that the

evidence that their workflows haven't

adapted is really evidence that AI is

hype and not real. I think GitHub

Copilot might be the clearest

illustration of this. It has 20 million

users, 42% market share among AI coding

tools, apparently. Uh, and lab studies

show 55% faster code completion on

isolated tasks. I'm sure that makes the

people driving GitHub Copilot happy in

their slide decks. But in production,

the story is much more complicated.

There are larger poll requests. There

are higher review costs. There's more

security vulnerabilities introduced by

generated code. And developers are

wrestling with how to do it well. One

senior engineer put it really sharply.

C-Ilot makes writing code cheaper but

owning it more expensive. And that is

actually a very common sentiment I've

heard across a lot of engineers in the

industry. not just for co-pilot but for

AI generated code in general. The

organizations that are seeing

significant call it 25 30% or more

productivity gains with AI are not the

ones that just installed co-pilot had a

one-day seminar and called it done.

They're the ones that thought carefully

went back to the whiteboard and

redesigned their entire development

workflow around AI capabilities.

changing how they write their specs,

changing how they review their code,

changing what they expect from junior

versus senior engineers, changing their

CI/CD pipelines to catch the new

category of errors that AI generated

code introduces. End to end process

transformation. It's not about tool

adoption. And end toend transformation

is hard. It's sometimes it's politically

contentious. It's expensive. It's slow

and most companies don't have the

stomach for it. Which is why most

companies are stuck at the bottom of the

J curve. Which is why the gap between

frontier teams and everyone else is not

just widening, it's accelerating

rapidly. Because those teams on the edge

that are running dark factories, they

are positioned to gain the most. As

tools like Opus 4.6 and Codeex 5.3

enable widespread agentic powers for

every software engineer on the planet.

95% of those software engineers don't

know what to do with that. It's the ones

that are actually operating at level

four, level five that truly get the

multiplicative value of these tools. So

if this is a politically contentious

problem, if this is not just a tool

problem but a people problem, we need to

look at the nature of our software

organizations. Most software

organizations were designed to

facilitate people building software.

every process, every ceremony, every

role. They exist because humans building

software in teams need coordination

structures. Stand-up meetings exist

because developers working on the same

codebase, they got to synchronize every

single day. Sprint planning exists

because humans can only hold a certain

number of tasks in working memory and

then they need a regular cadence to rep

prioritize. Code review exists because

humans make mistakes that other humans

can catch. QA teams exist because the

people who build software, they can't

evaluate it objectively. You get the

idea. Every one of these structures is a

response to a human limitation. And when

the human is no longer the one writing

the code, the structures, they're not

optional, they're friction. So what does

sprint planning look like when the

implementation happens in hours, not

weeks? What does code review look like

when no human wrote the code and no

human can really review the diff that AI

produced in 20 minutes because it's

going to produce another one in 20 more

minutes. So what does a QA team do when

the AI already tested against scenarios

it was never shown? Strong BM's

threeperson team doesn't have sprints.

They don't have standups. They don't

have a Jiraa board. They write specs and

they evaluate outcomes. That is it.

The entire coordination layer that

constitutes the operating system of a

modern software organization. The layer

that most managers spend 60% of their

time maintaining is just deleted. It

does not exist. Not because it was

eliminated as a cost-saving measure, but

because it no longer serves a purpose.

This is the structural shift that's

harder to see than the tech shift, and

it might matter more. The question is

becoming what happens to the

organizational structures that were

built for a world where humans write

code? What happens to the engineering

manager whose primary value is

coordination? What happens to the scrum

master, the release manager, the

technical program manager whose job is

to make sure a dozen teams ship on time?

Look, those roles don't disappear

overnight, but the center of gravity is

shifting. The engineering manager's

value is moving from coordinate the team

building the feature to define the

specification clearly enough that agents

build the feature. The program manager's

value is moving from track dependencies

between human teams to architect the

pipeline of specs that flow through the

factory. The skills that matter are

shifting very rapidly from coordination

to articulation. From making sure people

are rowing in the same direction to

making sure the direction is described

precisely enough that machines can go do

it. And oh, by the way, for engineering

managers, there's an extra challenge.

How do you coach your engineers to do

the same thing? It's a people challenge.

If you think this is a trivial shift,

you have never tried to write a

specification detailed enough for an AI

agent to implement it correctly without

human intervention. And you've certainly

never sat down and tried to coach an

engineer to do the same. It is a

different skill. It requires the kind of

rigorous systems thinking that most

organizations have never needed from

most of their people because the humans

on the other end of the spec could fill

in the gaps with judgment, with context,

with a slack message that says, "Did you

mean X or Y?" The machines don't have

that layer of human context. They build

what you described. If what you

described was ambiguous, you're going to

get software that fills in the gaps with

software guesses, not customer- ccentric

guesses. The bottleneck has moved from

implementation speed to spec quality.

And spec quality is a function of how

deeply you understand the system, your

customer, and your problem. That kind of

understanding has always been the

scarcest resource in software

engineering. The dark factory doesn't

reduce the demand for that. It just

makes the demand an absolute law. It

becomes the only thing that matters.

Now, let's be honest. Everything that I

have just talked about assumes you're

building from scratch. Most of the

software economy is not built from

scratch. The vast majority of enterprise

software is brownfield. It's existing

systems. It's accumulated over years,

over decades. It's running in

production, serving real users, carrying

real revenue. CRUD applications that

process business transactions. Monoliths

that have grown organically through 15

years of feature additions. CI/CD

pipelines tuned to the quirks of a

specific codebase and a specific team's

workflow. Config management that exists

in the heads of the three people who've

been at the company long enough to

remember why that one environment

variable is set to that one value. You

know who you are. You cannot dark

factory your way through a legacy

system. You cannot just pretend that you

can bolt that on. It doesn't work that

way. The specification for that does not

exist. The tests, if they're any, cover

30% of your existing codebase, and the

other 70% runs on institutional

knowledge and tribal lore and someone

who shows up once a week in a polo shirt

and knows where all the skeletons are

buried in the code. The system is the

specification. It's the only complete

description of what the software does

because no one ever wrote down the

thousand implicit decisions that

accumulated over a decade or more of

patches of hot fixes of temporary

workarounds that of course became

permanent. This is the truth about the

interstitial states that lie along this

continuum toward more autonomous

software development. For most

organizations, the path is not to start

with deploy an agent that writes code.

It starts with let's develop a

specification for what your real

existing software really actually does.

And that specification work that reverse

engineering of the implicit knowledge

embedded in a running system is very

difficult and it's deeply human work. It

requires the engineer who knows why the

billing module has the one edge case for

Canadian customers. It requires the

architect who remembers which micros

service it was that carved out of the

monolith under duress during the 2021

outage and we've always maintained it

ever since. It requires the product

person who can explain what the software

actually does for real users versus what

the PRD says it does. Domain expertise,

ruthless honesty, customer

understanding, systems thinking. exactly

the human capabilities that matter even

more in the dark factory era, not less.

Look, the migration path is different

for every business, but it starts to

look something like this. First, you use

your AI as much as you can at say level

two or level three to accelerate the

work your developers are already doing,

writing new features, fixing bugs,

refactoring modules. This is where most

organizations are at now and it's where

the J-Curve productivity dip and it's

where the J-Curve productivity dip

happened. You should expect that.

Second, you start using AI to document

what your system really does, generating

specs directly from the code, building

scenario suites that capture real

existing behavior, creating the holdout

sets that a future dark factory will

need. Then you redesign your CI/CD

pipeline to handle AI generated code at

volume. different testing strategies,

different review processes, different

deployment gates. Fourth, you start to

begin to shift new development to level

four or five autonomous agent patterns

while maintaining the legacy system in

parallel. That path takes time. Anyone

telling you otherwise is selling you

something. The organizations that will

get there the fastest aren't necessarily

the ones that bought the fanciest vendor

tools. They're the ones who can write

the best and most honest specs about

their code, who have the deepest domain

understanding, who have the discipline

to invest in the boring, unglamorous

work of documenting what their systems

really do and of how they can support

their people to scale up in the ways

that will support this new dark factory

era. I cannot give you a clear timeline

here. For some organizations, this is

looking like a multi-year transition,

and I don't want to hide the ball on

that. Some are going faster and it's

looking like multimonth. It will depend,

frankly, on the stomach you have for

organizational pain. And that brings me

to the talent reckoning. Junior

developer employment is dropping 9 to

10% within six quarters of widespread AI

coding tool adoption, according to a

2025 Harvard study. Anyone out there at

the start of their career is nodding

along and saying it's actually worse

than that. In the UK, graduate tech

roles fell 46% in 2024 with a further

53% drop projected by 2026. In the US,

junior developer job postings have

declined by 67%.

Simply put, the junior developer

pipeline is starting to collapse, and

the implications go far beyond the

people who cannot find entry-level jobs,

although that is bad enough and it's a

real issue. The career ladder in

software engineering has always worked

like this. Juniors learn by doing. They

write simple features. They fix small

bugs. They absorb the codebase through

immersion. Seniors review the work and

mentor them and catch their mistakes.

Over 5 to seven years, a junior becomes

a senior through accumulated experience.

The system is frankly an apprenticeship

model wearing enterprise clothing. AI

breaks that model at the bottom. If AI

handles the simple features and the

small bug fixes, the work that juniors

lean on, where do the juniors learn? If

AI reviews code faster and more

thoroughly than a senior engineer doing

a PR review, where does the mentorship

start to happen? The career ladder is

getting hollowed out from underneath.

Seniors at the top, AI at the bottom,

and a thinning middle where learning

used to happen. So, the pipeline is

starting to break. And yet, we need more

excellent engineers than we have ever

needed before, not fewer engineers. I've

said this before. I do not believe in

the death of software engineering. We

need better engineers. The bar is rising

and it's rising toward exactly the

skills that have always been the hardest

to develop and the hardest to hire for.

The junior of 2026 needs the systems

design understanding that was expected

of a mid-level engineer in 2020. Not

because the entry-level work necessarily

got harder, but because the entry-level

work got automated and the remaining

work requires deeper judgment. And you

don't need someone who can write a CRUD

endpoint anymore. Right? The AI will

handle that in a few minutes. You need

someone who can look at a system

architecture and identify where it will

break under load, where the security

model has gaps, where the user

experience falls apart at the edge

cases, and where the business logic

encodes assumptions that are about to

become wrong. And if you think as a

junior that you can use AI to patch

those gaps, I've got news for you. The

seniors are using AI to do that and they

have the intuition over the top. So you

need systems thinking, you need customer

intuition. You need the ability to hold

a whole product in your head and reason

about how those pieces interact. You

need the ability to write a

specification clearly enough that an

autonomous agent can implement it

correctly, which requires understanding

the problem deeply enough to anticipate

the questions the agent does not know to

ask. Those skills have always separated

really great engineers from merely

adequate ones. The difference now is

that adequate is no longer a viable

career position regardless of seniority

because adequate is what the models do.

Enthropics hiring has already shifted.

Open AAI's hiring has already shifted.

Hiring is shifting across the industry

and it's shifting toward generalists

over specialists. People who can think

across domains rather than people who

are expert in one really narrow tech

stack. The logic is super

straightforward, right? When the AI

handles the implementation, the human's

value is in understanding the problem

space broadly enough to direct

implementation correctly. A specialist

who knows everything about Kubernetes

but can't reason about the product

implications of an architectural

decision is way way less valuable than a

generalist who understands the systems,

the users, and the business constraints

even if they can't handconfigure a pot.

Some orgs are moving toward what amounts

to a medical residency model for their

junior engineers. Simulated environments

where early career developers learn by

working alongside AI systems, reviewing

AI output, and developing judgment about

what's correct and what's subtly wrong

by working with AI. It is not the same

thing as learning by writing code from

scratch. I don't want to pretend it is,

but it might be better training for a

world where the job is directing and

evaluating AI output rather than

producing code from a blank editor. I

will also call out, as I've called out

before, there are organizations

preferentially hiring juniors right now,

despite the pipeline collapsing

precisely because the juniors they are

looking for provide an AI native

injection of fresh blood into an

engineering org where most of the

developers started their careers long

before chat GPT launched in 2022. In

that world, having people who are AI

native from the get-go can be a huge

accelerating factor. And that points to

one of the things that is a plus for

juniors coming in. Lean into the AI if

you're a junior. Lean into your

generalist capabilities. Lean into how

quickly you can learn. Show that you can

pick up a problem set and solve it in a

few minutes with AI across a really wide

range of use cases. Gartner is

projecting that 80% of software

engineers will need to upskill in AI

assisted dev tools by 2027. Estimating

wrong. it's going to be 100%. The number

is not the point. The question isn't

whether the skills need to change. We

all know they will. It's whether we in

the industry can develop the training

infrastructure quickly enough to keep

pace with the capability change. Because

I've got to be honest with you, if

you're a software engineer and the last

model you touched was released in

January of 2026, you are out of date.

You need a February model. And that is

going to keep being true all the way

through this year and into next year.

And whether the organizations that

depend on software can tolerate a period

where the talent pipeline is being built

and rebuilt like this on a monthly basis

is a big question because you have to

invest in your people more to get them

through this period of transition. So

what does the shape of a new org look

like when we look at AI native startups?

How are they different from these

traditional orgs? cursor. The AI native

code editor is past half a billion

dollars in annual recurring revenue and

it has at last count a couple of dozen

few dozen employees. It's operating at

roughly three and a half million in

revenue per employee in a world where

the average SAS company is generating

$600,000 per employee. Midjourney is

similar. They have the story of

generating half a billion in revenue

with a few dozen people around a hundred

a little bit more depending on who's

counting. Lovable is well into the

multiundred million dollars in ARR in

just a few months and their team is

scaling but it's way way behind the

amount of revenue gain they're

experiencing. They are also seeing that

multi-million dollar revenue per

employee world. The top 10 AI native

startups are averaging three and change

million in revenue per employee which is

between five and six times the SAS

average. This is happening enough that

it is not an outlier. This is the

template for an AI native org. So what

does that org look like? If you have 15

million people generating a hund00

million a year, which we've seen in

multiple cases in 2025, what does that

look like? It does not look like a

traditional software company. It does

not have a traditional engineering team,

a traditional product team, a QA team, a

DevOps team. It looks like a small group

of people who are exceptionally good at

understanding what users need, who are

exceptional at translating that into

clear spec, and who are directing AI

systems that handle that implementation.

The org chart is flattening radically.

The layers of coordination that exist to

manage hundreds of engineers building a

product can be deleted when the

engineering is done by agents. The

middle management layer is going to

either evolve into something

fundamentally different at these big

companies or it's going to cease to

exist entirely. The only people who

remain are the ones whose judgment

cannot be automated. The ones who know

what to build for whom and why, and who

have excellent AI sense. Sort of like

horse sense where you have a sense of

the horse if you're a rider and you can

direct the horse where you want to go.

You'll need people who have that sense

with artificial intelligence. And yes,

it is a learned skill. The restructuring

that is going to happen as more and more

companies move toward that cursor model

of operating, even if they never

completely get there, that restructuring

is real. It's going to happen. It's

going to be very painful for specific

people in specific roles. the middle

management layer, the junior developer

whose entry-level work is getting

automated first, the QA engineers who

just run manual test passes, the release

manager whose entire value is just

coordination. Those kinds of roles are

going to have to transform or they're

just going to disappear. And for people

in those roles, you need to find ways to

move toward developing with AI and

rewriting your entire workflow around

agents as central to your development.

That is going to look different

depending on your stack, your manager's

budget for token spend, and your

appetite to learn. But you need to lean

that way as quickly as you can for your

own career's sake. I want to leave you

with one thing that gets lost in every

conversation about AI and jobs. We have

never found a ceiling on the demand for

software and we have never found a

ceiling on the demand for intelligence.

Every time the cost of computing has

dropped from mainframes to PCs, from PCs

to cloud, from cloud to serverless, the

total amount of software the world

produced did not stay flat. It exploded.

New categories of software that were

economically impossible at the old cost

structure became viable and then

ubiquitous and then essential. The cloud

didn't just make existing software

cheaper to run. It created SAS, mobile

apps, streaming, real-time analytics,

and a hundred other categories that

could not exist when you had to buy a

rack of servers to ship something. I

think the same dynamic applies now and

it applies at a scale that dwarfs every

previous transition. Every company in

every industry needs software. Most of

them, like a regional hospital or a

mid-market manufacturer or a family

logistics company. They can't afford to

build what they need at current labor

costs. A custom inventory system

traditionally could cost a half a

million or more and take over a year. A

patient portal integration might cost a

third of a million. You get the idea.

These companies tend to make do with

spreadsheets today. But we are dropping

the cost of software production by an

order of magnitude or more. And now that

unmet need is becoming addressable. Not

theoretically now. You can serve markets

that traditional software companies

could never afford to enter. The total

addressable market for software is

exploding. Now this can sound like a

very comfortable rebuttal to people

struggling with the pain of jobs

disappearing. It is not the same thing.

Just saying the market is getting bigger

doesn't fix it. But it is a structural

observation about what happens as

intelligence gets cheaper. The demand is

going to go up, not down. We watched

this happen with compute, with storage,

with bandwidth, with every resource

that's ever gotten dramatically cheaper.

Demand has never saturated. The

constraint has always moved to the next

bottleneck. And in this case, the

judgment is to know what to build and

for whom. The people who thrive in this

world are going to be the ones who were

always the hardest to replace. The ones

who understand customers deeply, who

think in systems, who can hold ambiguity

and make decisions under uncertainty,

who can articulate what needs to exist

before it exists at all. The dark

factory does not replace those people

and it won't. It amplifies them. It

turns a great product thinker with five

engineers into a great product thinker

with unlimited engineering capacity. The

constraint moves from can we build it to

should we build it and should we build

it has always been the harder and more

interesting question. I don't have a

silver bullet to magically resolve this

but I have to tell you that we must

confront the tension or we are being

dishonest. The dark factory is real. It

is not hype. It actually works. A small

number of teams around the world are

producing software without any humans

writing or reviewing code. They are

shipping shippable production code that

improves with every single model

generation. The tools are building

themselves. The feedback loop is closed.

And those teams are going faster and

faster and faster and faster. And yet

most companies aren't there. They're

stuck at level two. They're getting

measurably slower with AI tools they

believe are making them faster. They're

wrong. running organizational structures

designed for a world where humans do all

of the implementation work. Both of

these things are true at the same time.

The frontier is farther ahead than

almost anyone wants to admit and the

middle is farther behind than the

frontier teams like to talk about. The

distance between them isn't a technology

gap. It's a people gap. It's a culture

gap. It's an organizational gap. It's a

willingness to change gap that no tool

and no vendor can close. The enterprises

that get across this distance are not

the ones that buy the best coding tool.

They're the ones that do the very hard,

very slow, very unglamorous work of

documenting what their systems do, of

rebuilding their org charts and their

people around the skill of judgment

instead of the skill of coordination.

And they are organizations who invest in

the kind of talent that understands

systems and customers deeply enough to

direct machines to build anything that

should be built. And those orgs need to

be honest enough with themselves to

admit that this change will not happen

as fast as they want it to because

people change slowly. The dark factory

does not need more engineers, but it

desperately needs better ones. And

better means something different than it

did a few years ago. It means people who

can think clearly about what should

exist, describe it precisely enough that

machines can build it and who can

evaluate whether what got built actually

serves the real humans it was built for.

This has always been the hard part of

software engineering. We just used to

let the implementation complexity hide

how few people were actually good at it.

The machines have now stripped away that

camouflage, and we're all about to find

out how good we are at building

software. I hope this video has helped

you make sense of the enormous gap

between the dark factories in automated

software production and the way most of

us are building software today. Best of

luck navigating that transition. I wrote

up a ton of exercises and a ton of

resources over on the Substack if you'd

like to dig in further. This tends to be

something where people want to learn

more, so I wanted to give you as much as

I could. Have fun, enjoy, and I'll see

you in the comments.

Interactive Summary

Ask follow-up questions or revisit key timestamps.

The video discusses the growing gap between cutting-edge AI-driven software development (

Suggested questions

7 ready-made promptsRecently Distilled

Videos recently processed by our community