Global Network Webinar - Introduction Module of Advancing Responsible AI - 3 June 2025

Now Playing

Global Network Webinar - Introduction Module of Advancing Responsible AI - 3 June 2025

Transcript

1378 segments

We have eight modules to promote

understanding of the need for guard

rails in managing AI

risks. And the table of contents we look

first into the diversity of AI. Then we

try to understand the AI risks and then

little bit discussion about guard rails.

What are they they and then

we are exploring the principles of

responsible AI and a little bit about

the AI regulations also and then very

short introduction to other

modules but let's first try to

understand the diversity of

AI you can all you can always uh take a

different kind of lenses and and one

lens is that that you uh study AI from

the roles of AI. For example, this

supportive AI has a very has a has a

their AI has very clear assistive role

and that's very clear nowadays for

everyone and it's a tool for tool to

enhance expert work and it acts there as

a asparing partner or knowledge

enhancer and there the expert remains

always accountable for how AI generated

information is shared and used. And

there on the opposite side we have this

autonomous AI uh full automation where

the AI performs tasks totally without

human

assistance. AI chat spots are like this

and this where the hype is now very very

very very high. this agentic AI it it

the agent it refers to systems that make

independent decisions they plan actions

without human

assistance and this also these

self-trained AI

models what we are we have been for

example at statistics Finland doing for

years

now they are trained for specific

purposes uh and they are also autonomous

although they are not foundation

models and they're there in the bit

there in between there's this

collaborative AI where the AI

does the main part of the work but the

there's still human in the loop who

decides if the output of AI was correct

or not and the expert remains

accountable

able for ensuring the correctness of AI

generated

outputs. But you can also look at

the AI from totally different

perspective because AI will arrive and

arrives in many different packages.

Uh I borrowed a picture from a slideshow

from Gardner because I thought this is

really a good example and it helps to

understand the diversity of these

packages. It enters to your office. So

on the on the right hand side there's

this green green green box and there are

these off the shelf applications such as

co-pilots, powerbi, chat GPT and so on.

So where AI is embedded into software by

vendors and then the the red box uh

bring your own AI box. There are

solutions de developed where within the

organization for specific

needs for example by different

department

uh custom GPTs in chat GPT platform are

such a

things and that also includes shadow

AI and and and that's

also an AI which is totally without

oversight. And then in the blue blue

box, we have these in-house trained

models or adapted foundation models. We

are probably fine-tuning and so on. And

in the blue box, there are also models

embedded by software develops developers

into the software.

And as we can see that there in the

middle there's this circle that says

that we should be able to coordinate and

run and secure all this that comes into

the

office.

And then we then there's a another

perspective a third

perspective while AI may operate

autonomously or collaborative the

significance and the risks of its task

must be

assessed

and not all AIdriven act actions they

they don't carry equal height or risks

for the organiza for the organization's

core

objectives.

So task

significance so how critical is the

task to the organization core operations

or goals. So low sign significance is uh

AI drafting a standard email response.

But the example of high significance is

when AI is generating statistical

outputs used in a national policym and

there's a big difference within these

two but we also have to do risk

assessment uh where we try to identify

the potential consequences when AI makes

a failure.

So what's the potential impact of AI

failure

in in in this task? You ask yourself

this question. So examples of lowrisk

task. So again automating document

formatting and the high-risk tasks. What

happens when AI editing data for

official statistics makes a failure?

It's a there's a huge difference.

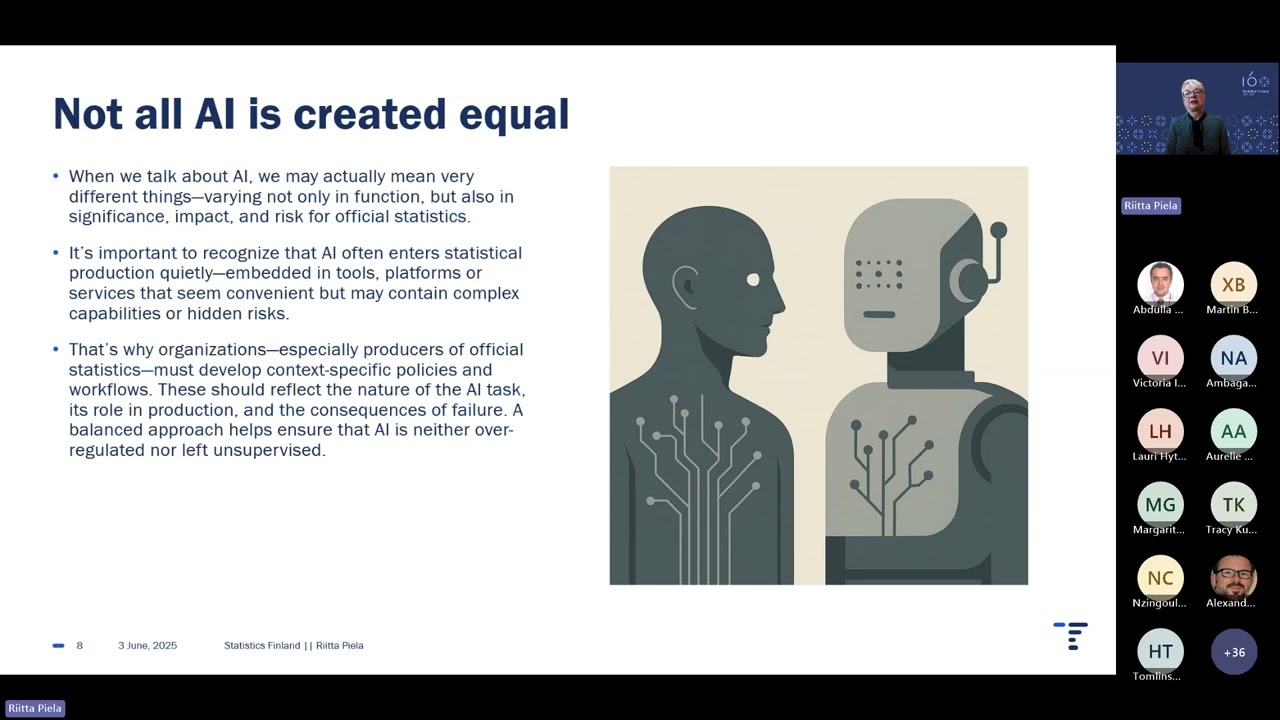

So not all AI is created

equal. So we may actually mean very

different things varying not only

function but in significance impact and

risks for official statistics.

So it's very important that we recognize

that AI also enters statistical offices

very quietly embedded in tools,

platforms and

services and we don't really know

uh about these hidden risks when we are

not aware that there is an AI. So I

think

especially producers of official

statistics we have to have context

specific policies and workflows that

they reflect to the nature of AI task

its role in production and the

consequences of

failure and

and that would somehow ensure that AI is

neither overregulated nor left

unsupervised and you have to find the

balance

here

and adopting AI it is really balancing

risks and

benefits and now particular particularly

uh generative AI at the present is it's

fundamentally transforming all

industries not just uh

statistics it's it's offering

groundbreaking thinking opportunities

and and really on the other side

significant

challenges.

So the more organizations utilicize AI

to enhance their operations, the more

risks arise. So I I I somehow thought

that it's it's like a it can be compared

to preparing puffer fish. If the

preparation of of fugu puffer fish goes

wrong, the chef uh has to commit

hariri because it will poison all the

all those people who ate that. So when

we and when producers of official

statistics when we utilize AI in our

data production, we bear a full

responsibility for the accuracy

uh and accountability of the data we

have generated. Just the same way as a

chef is responsible for the preparation

of fugu.

So but but we cannot always eliminate

all the risks but we can minimize risks

and manage the

responsibility while we are maximizing

the

benefits.

Uh unfortunately AI is a as a is a

greatest power of cyber risks at the

moment already. It's not only in the

future.

uh for example an AI powered chatbot

with uh weak security can be manipulated

through prompt injection attacks to leak

sensitive information and many other

things. So and many identified AI

risks are also cyber risks but not all

are cyber risk is especially refers to

digital threats where attackers

deliberately exploit system vulner

vulnerabilities

uh data breaches uh information leaks

and so on.

So I think always that understanding AI

before using it it is a question of

responsibility also.

So when adopting it we must understand

what we are working with. So we just

cannot take a black box such as

generative AI is now and ask it to

produce statistics for us. So, so the

the hype around generative AI has been

immense immense and as a as a result

it's not always clear

what generative AI is really useful for

and where it's simply not mature enough

yet.

uh but one way to begin to understanding

the opac parts of AI is to look at

through the lens of risk and of course

as a producers of official statistic

what would what could be more natural

than try to classify the

risks and why is AI risk categorization

so important

because

Yeah, thousands of AI related risks they

have been already

identified but it is impractical to

assess each one individually. So instead

categorizing these risks at a higher

level it helps us to understand it

better. Uh there is already such a

living database called MIT AI risk

repository. It's been generated by MIT

researchers and there are own over at

the moment over

1,600 AI risks categorized by their

cause and risk

domain and the causal classification

tells that tells you also how when and

why these risks occur. So I really

encourage the statistical society to

explore and consider utilizing this AI

risk

repository. Really really it's a really

good uh tool for identifying

risks. Uh I have picked up couple of

uh specific uh

subclasses from this MIT classification.

For example, uh this governance failure,

uh it's it it tells that there are very

weak rules and oversight that can't keep

up with AI progress and that leads to

poor risk management and then this

competitive dynamics. It's it means

that rapid AI development is is driven

by competition and and that is

increasing the risks of unsafe and

faulty

systems. But uh unfortunately I have had

not had enough time to go through so so

in

so in detail this um taxonomy of MIT

that I still used my own classification

uh in this presentation because there

are a couple of things that are lacking

there and and that perhaps I should have

somehow made a combination of these too

because for example these ethical and

societal risks and societal impact risks

which I call actually there in another

slide microlevel risks they are

something that are little bit lacking

from that other other classification and

and many other re researchers have also

attempted to classify all these uh AI

related risks

and they can be really classified from

multiple perspectives.

I go through now the

uh a main classes of the AI

classification I have made but there

there are some there's a they they at

the risk side they

use such a vocabulary that may be

sometimes a little bit strange for

example this prompt prompt injection

it's a malicious

where man malicious actor manipulates an

AI system prompts uh and that orders the

the model's behavior uh and

jailbreaking. It's it's where AI refers

to bypassing safeguards to make an AI

system generate proh prohibited and

unintented outputs and so on. And uh but

perhaps if if somebody wants to check

more about this vocabulary, this

presentation will be

shared.

Uh AI systems, they often handle

sensitive data and that makes them

really vulnerable to privacy violations,

all kind of data breaches and cyber

attacks.

So and um if there's a poor data

protection, it increases these risks

posing threats to organizations and

individuals. And I have some exo

examples here

uh

uh like like this generative

uh AI tools they have unintentionally

leaked user data and that happened also

to chat GPT

2023 where data bridge was where there

was a data bridge where some users could

see other users

uh chat histories and payment

information due to a buck. And this was

just an short

uh example what can

happen, what kind of privacy and

security risks can really

occur. And then these operational risks

they pertain challenges in in

maintaining the efficiency and cost

effectiveness and the reliability of AI

systems. Uh it's about ensuring that the

AI infrastructure remains controlled and

predictable and maintains the efficiency

and reliability.

Uh these operational failures they are

uh can be such failures where the system

starts to degradate and reliance on

third party services can then lead to

failures and generative AI systems

may be vulnerable to these denial of ser

service attacks

uh which can make them temporarily

unusable

And and there are also resource

dependency

risks. For example, high cost and

limited expertise can create

dependencies on on just few large

technology

companies. And then we have uh ethical

and societal risks.

uh and

examples. This I think these are most

familiar to all statisticians. For

example, this bias bias in in training

data. It can lead to unfair and unfair

outcomes affecting groups on race,

gender or societ soc economic status.

uh manipulation of societies is can be

something like AI algorithms on social

media platforms can amplify political

polarization and then this macrolevel

impacts soci societal impact

risks. So it's it's not

only the individual users AI can affect

it can also shape society as a whole and

these micro level risks include economic

environment or even existential

concerns. So example of economic

risks that AI and automation can replace

jobs especially low skilled ones and in

and that can cause inequal

inequality and at the same time for

example new AIdriven jobs they require

reskilling and retraining and perhaps

not everyone has access to

and environmental

risks where large AI models consume a

lot of energy and resources.

My daughter always says to me when I

answer I check that everything from

chach GPT that don't use that chachpd it

will

destroy our in whole environment and so

I think they the younger generation is

already more aware of that

uh and then these existential risks

uh some experts warn about

uh uncontrollable super super

intelligent AI that could pursue goals

that conflict with human values and and

that can cause serious long-term risks

to humanity. But these kind of risks

have not occurred yet, but this is

something that some researchers see that

might happen in the future.

And then uh explainability and

transparency risk although

other responsible AI principles

explainability and transparency they are

in in totally separate things but but in

this risk classification I've added them

into one and same risk class. So they

can make these uh lack of explanability

and

transparency can make it difficult to

stay for stakeholders to understand the

whole decision making process and and

that really undermines the trust. So if

stakeholders can't follow how AI works,

trust and accountability suffer.

So many system are like black boxes. The

logic is hidden or it's too complex to

explain. So if we can't explain AI's

role and decision making process,

uh I think people are less likely to

accept and oversee its use. So output

clarity is important and generative AI

models may produce

fabricated or culturally inappropri and

inappropriate content.

So complicating the the whole

explanability process. Uh for example is

uh hallucinations and gen AI outputs.

They require still human re review as

their origins are often opac. So that

makes trust and validation really

difficult.

So decisions made by AI can be hard to

understand and a

explain. And

then functional and technical

risks. This focuses on vulnerabilities

and exploits within AI models and

systems. And

uh yeah the risk category we had early

operational reliability risks focuses on

practical management and resource

dependencies. So

uh system exploits as a example where

attackers can bypass safety

measures using these prompt injections

and jailbreaking methods, should I call

them methods, even though they are quite

criminal methods and they they allow

malicious users to make the system

produce

really content.

uh and model integrity risks

uh are where over time the AI systems

they become less

reliable. And the example of of

course quite familiar for for those data

scientists is is model drift system

accuracy cleans and the real world

condition when real world conditions

change. And other examples I date the

poisoning and and other inconsistent

behaviors.

uh legal risk they arise from the reg

regulatory and liability challenges that

are associated with AI. Uh there are

copyright copyright problems

and and and with regulatory compliance.

AI evolves faster than many legal

systems. So that creates a confusion

about who is responsible and what rules

apply

and regulator regulatory non-compliance

uh failure to add to AI specific

regulations such as what we have now in

EU AU's AI act or GDPR which is quite

important here on the AI

uh branch. So it can lead to legal

penalties and

restrictions. Uh organizations must

actively follow legal

updates and then we come to these guard

rates in the context of artificial

intelligence. So AI guard rules they are

safeguards that ensure AI systems

operate safely and ethically and and and

operate so as they are intended to

operate. So the term now covers a broad

set of protections that help minimize

and and mitigate these risks. So they

can be technical guard rails, they can

be organizational guardrails, they can

be ethical guardrails. And why these

guard rails? Why do they matter? Because

without these

guardrails, AI can

behave unpredictably.

So guardrails ensure that AI systems

operate safely, ethically and minimizing

these potential

risks. Examples, MLOps and LLMOPS. They

are practic practical guard rail

frameworks. They include ethical uh uh

uh and

technical guard rails. So they provide

tools and processes for managing AI

through its entire life cycle. Uh

responsible AI principles. They are more

ethical guard rails. They guide AI to

align with public values like fairness,

explainability and so on. Uh and then we

have AI regulations. Um they are legal

guard rails.

And then again let's put these all

things together. So there are these many

kind of guardrails. And the goal of this

whole

whole project or whatever I should call

this advancing responsibility AI is to

build a network of guard rails to

support the safe use of AI especially of

course in official statistics.

So what does it mean in practice? So

that you put the right guard rails into

the right places. So strict oversight

there where it's needed and no

unnecessary restrictions where it's not

needed. So guardra protect core

principles of responsible

AI. And how do we turn the principles

into guard rails? So that you identify

risk is just the beginning. So these

principles, responsible

AI principles, they act as a guard raise

only when they are not just words but

goals we actively commit to. So we need

organizationwide

commitment that we can put these

principles into

practice. And once the principles are

set, the next step is to turn each one

of one into concrete

actions. For example, when we talk about

transparency, MLOps can support it. But

transparency, it requires governance

structures and clear communication to

stakeholders. And for example, when we

talk about data protection and privacy

techniques, we know like anonymization

and or sedonmization they help but they

are not enough without proper procedures

and oversight mechanisms. So in other

words, principle responsible AI

principles, they become guard rails only

when supported by practical tools,

processes and

accountability. And then we have the

principles of responsible AI. Uh the

naming and the division of responsible

AI principles, it's it's not

standardized. However, the overall set

of aspects they encompass has largely

become established standard. So I have

here

uh uh the principles I have used here is

ethics, data privacy, data security,

transparency, explanability,

inclusivity, fairness and

accountability.

uh transparency

is one of what I think it's one of the

most important principles. It's not only

a important principle in AI but in the

whole statistical production. So it

means that the processes decisions

decisions and the use of AI system is

open and visible to all stakeholders.

And it's not only about technical

transparency ML ops can provide but it's

also the organizational

transparency. It's clearly communicating

how AI is governed by whom and under

what

rules. It includes also stakeholders

access access to relevant document

documentation how the statistical office

has produc used AI in producing a data

and why this matters it enables trust

and

fairness and again I'm repeating now I

recognize now technical transparency

alone is not enough the stakeholders

they also must understand the context

and scope of AI use. So real

transparency then includes both

technical clarity and organizational

openness.

uh AI should also make fair and unbiased

decisions and fairness means that

predictions and decisions must be

equitable and free from

discrimination and fairness applies

across the whole AI life cycle from

training the model to output.

And how do we achieve this fairness? It

requires really careful attention to

first to the training data. We all know

that biased data produces biased

results. Tools for bias

detection. Fairness is isn't one time.

It requires continuous technical checks

and monitoring and also ethical

reflection. And why transparency and

explainability matter? Because fairness

loses meaning if experts and

stakeholders can understand AI

decisions. Explaining how decisions

decisions are made builds trust and

accountability.

And again generative AI brings really

big extra

challenges outputs generative AI makes

they are not always very traceable

uh and not traceable to specific

training data although they are open

access models but it's impossible to go

through all that data it has used that

has been used in training that model and

that makes bias detection and and

explanability really hard and I would

say it's uh quite often even impossible

with generative

AI. Uh

accountability means that we have clear

responsibility for decisions AI

makes and who is

responsible. We have to define

ownership. Who ensures the quality and

reliability AI assisted data at each

phase of statistical

production? Can we trace and verify?

Yes, AI models and data set must be

documented and auditable. It must be

clear how the data was formed or how the

decisions were

made and data production must follow

regulations and also align with

principles ethical principles of

official statistics.

But the final

responsibility is is a big question and

NSOS must ensure that the data is

suitable for decision making and

research and does not carry hidden risks

or

misinterpretation. So NSO is

responsible.

Now at the beginning I had these three

roles there and it's quite clear

that with with the supportive and

collaborative AI there's this human in

the loop and human oversees the result

and the impact of AI and is

responsible and the responsibility lies

with that user. But then with autonomous

AI it gets a little bit more

difficult. The person using or

triggering it. It is not re responsible.

So rather the responsibility lies

somewhere with those who have generated

the service and authorize authorized its

deployment.

So this is not that simple anymore.

uh ethical considerations with AI they

they must be core part of AI development

and use

uh and yes not an

afterthought and what ethical AI means

it means that AI must respect societal

values and avoid

harm and it should not unfairly benefit

or disadvantage manage specific

groups and how do we ensure that our AI

is

ethical. It regard requires really clear

governance who is responsible for

ethical

compliance and requires accountability

across the whole AI life cycle and

requires ethical impact assessment. Not

just that technical validation and

guiding ethical principles, doing good

and not doing harm and respecting human

agency and promoting fairness and making

things understandable.

And one of

the perhaps well-known

uh principles is data privacy and data

security and that's really protecting

personal

data and

it's for statistical offices it's quite

important

thing and and also it's essential for

maintaining public trust.

and AI systems. They must comply with

laws like GDPR, but they also must

protect sensitive data using strong

technical and organizational

safeguards. Communicating clearly how

data is handled

helps earn and keep that public trust.

Uh it

requires regulatory

compliance, technical safeguards like

usage of encryption, secure storage

access control, certainization,

synthetic data and so on and ethical

principles in practice. It's conducting

bias audits, ensuring transparency in

how the data is processed and used.

So privacy and security they are not

just legal obligations. They are

fundamental to trust for the and

responsible AI and again they are

fundamental to statistical offices for

to remain public

trust and then

explainability. Uh how do we achieve it?

We have tools and methods that help

technical and

non-technical people understand the

model's reasoning. Uh we have common

methods for explainable AI like lime and

shep. Uh but

uh deep learning and large language

models are much harder to explain

decisions in in large language models.

They rely on such a complex patterns

that are really hard to trace and

traditional tools

like lime and shop. They often fall

short and that makes

explanability and therefore trust also

harder to achieve in high stakes uses.

inclusivity it's it's

not as a principle in all the

uh responsible AI classifications but I

always want to have it as a separate one

because I think that that it ensures

somehow equal repres

representation in in the whole AI

development and helps prevent unintended

exclusion or harm and build systems that

reflect the needs of broad and diverse

population

and but uh why it's difficult and

especially in generative

AI because generative AI models often

learn from biased training data. So we

have then also quite often biased

output.

This can lead to unequal treatment or

exclusion of certain

groups. And inclusive public sector AI

is is really important because we have

to design services usable by all

citizens regardless of

technical skills.

So uh now I've got gone through go

checked all the uh

principles uh of responsible AI but then

couple of slides about implementing

responsible AI and checking a little bit

about the AI regulation side. So

implementing responsible AI is not a

theory it's a really a commitment to a

to action. It ensures the AI supported

statistics that they are transparent,

fair, accountable, secure, ethical and

so on. By actively committing to these

principles, statistical producers can

strengthen the public

trust and increase the reliability and

credibility of

outputs and ensure AI enhances rather

than compromises statistical integrity.

So without responsible AI, I think

there's a higher risk of bias, misuse

and loss of credibility and it would

will undermine evidence-based policym

and public trust in official

statistics. And then uh another piece in

these AI puzzle uh AI regulations, they

embed key responsible AI principles like

transparency, fairness and data privacy

into formal legal

requirements and when when responsible

AI are voluntary and internally defined

while the regulations bring external

enforcement. ment and industrywide

consistency. So most AI laws are

motivated by responsible AI concerns

such as avoiding bias and ensuring

explanability together. I I think they

complement each other. So responsible AI

builds culture and values and

regulations ensure compliance and and

and accountability.

And examples from practice all big

companies, tech companies like and for

example Microsoft and Google, they apply

also

responsible AI principles

uh even beyond the

laws required uh just to strengthen the

public trust and ensure uh ethical AI

development.

uh about the AI regulations that's our

module two actually uh just two slides

about this we have two broad approaches

we have regulated AI some specific AI

laws such as uh this European Union EU

act and then we have in United States

federal and state level AI legislations

and in China We have nationally

developed AI regulations and then we

have existing laws applied to AI and

most of the countries rely on general

laws uh to regulate AI and including

Australia, Canada, New Zealand and so

on. But but

but definition of AI varies a lot. We

have for example OECD definition EU's AI

act uh SNA and balance of payments

uh US census bureau and this definition

they vary a lot and it's it makes things

a little bit difficult sometimes

uh EU's AI act it's the systems are

classified into different risk

categories based on how they likely the

how likely the risk is and what the AI

systems is in intended to be used for.

So problem it I see there's a problem

related to the definition of an operator

the AI act. So the use for example

statist Finland is likely the use of AI

is is likely to fall into the low or

minimal risk category because Statistics

Finland itself does not make decisions

or take actions based on the data it

produces. The actual decision maker who

uses AI generate data from statistics

Finland as a basis for decisions may not

be using AI themselves and therefore

activities may not fall under AI acts

definitions of an AI operator user. So

this creates a a regulatory blind spot

where the influence of AI is significant

but the no single actor formally meets

the definition of AI user operator I've

forgotten the term in in English under

the regulation. So just because and just

because something is legally permitted

for statistical offices it does not mean

that it's ethically acceptable

especially yes from the perspective of

official statistics. So even though uh

these regulations don't force us to do

things or avoid doing things I think we

have to be more

uh look at this more from the ethical

perspective. So once more a picture

perhaps there should be one more picture

before the rise risks identified that at

AI better understood and then AI's risks

identified guardrails built and

responsible AI adapted that's the

process it should

go and

then short introduction to other modules

of this advanced responsible AI. So we

have total of eight modules uh with this

introduction introduction serving as the

first one. Uh the different modules they

cover various aspects of responsible AI

and explore strategies and guardrails

for more effective risk management. Uh,

additionally, advancing responsible AI

seeks to consider the role of AI

regulations in ensuring responsible

statistical production. The other models

are ethical principles. Uh, this module

will likely be feature

uh a lecturer from academia. Uh the next

one which will be quite soon is actually

AI operationalization. MLOps and Llops.

uh it's uh where ML ops provides guard

rails in various forms and then we have

the

explanability and data privacy and

security and then we have case

studies and then we have this continuous

learning and

adoption that covers

uh uh provides practical advice and tips

for some kind of ongoing develop

velopment. The dates for the other

modules will be announced as the web

femininas are finalized. So after

holiday season which in Finland is quite

long but but uh somewhere in the autumn

the third module will be

ready. Thank you. That was the last

slide.

Thank you Rita for this very great um

presentation.

So uh in the meantime while you were

speaking there were already a couple of

questions coming in in the chat.

Um maybe be while I try to uh switch on

the cameras and the microphone. Maybe Pa

you can try to do that if I don't manage

to do it. Um so maybe in as a starter

let me ask you one question first and

then maybe somebody can come in live.

There is a question from

Shahu Ibraim Sharif. Sorry if I'm

mispronounced the name. Um, can you give

a real world example how you used AI in

a statistical field in in official

statistics and what was the reason for

using

AI and was this uh feature accessible to

the public such such as journalists or

other users? Yeah. Uh yeah. uh at least

at Statistics Finland we have used uh AI

in in classification. We have

uh generated our own AI models in quite

many classification

cases and why we what was the reason for

using uh I

think uh when we use AI based

classification for example we don't need

that much human hands anymore because

going classification

do with text based classification done

uh manually. It's really hard work and

it's a lot of human hours, work hours.

Yeah. Yes. Thank you. So, exactly. So,

um AI is often

used to reduce basically the human

interaction or the human uh decision,

right? to to make

the processing of uh statistical

information more efficient. Um so I have

now enabled the microphones and the

camera. So if you have questions and you

would like to ask them live uh so maybe

raise your hand and then we can give you

the floor.

Um, and I saw that there was a question

by

Tutu again. Sorry if I mispronounced the

name. Uh, do you want to come in or

should I read out the

question?

Yes. And this is not

Can you keep

uh no so the question was do you have an

example use case for a technical or

organizational guard rail? Uh maybe we

can continue with that question. Thank

you. Yeah. Well, that's a good question

because that that's the thing I love

because we have been building uh our

infrastructure technical infrastructure

for for machine learning for some years

and and we have managed to somehow

finalize that and when we have the

infrastructure there in

cloud we we we also use such a such a

components that we uh version

everything. So everything is

reproducible and and and I think this is

this is really one of the

technical technical

uh guard rails, one of the best but of

course I always have to remind that that

when we come to generative AI we are not

that far. No one is that far that we

could say that that all the technical

guard rails are

there.

Yeah.

Um there there's another question by

Tracy Cougler. Tracy, not sure if you

want to come in yourself otherwise I can

read out the question for you.

Hi. Yeah. Um so my question is around

the kind of tension

between transparency and how hard it is

to really understand where the output is

coming from in a lot of AI systems

and thinking about how you kind of

manage that tension or draw that line

between when and how it's responsible to

use systems where you can't always fully

understand where the output is coming

from and if there are circumstances

where you should just avoid using those

systems entirely.

But do do you actually mean more now

explanability than transparency?

Uh both I think. Yeah. Yeah. Yeah.

transparency for example with the models

the self-trained models it's it's quite

quite easy actually when you have the

infrastructure and the processes but the

explanability I think it's always much

much more

difficult so

uh

yeah is

is is there actually a way to for let's

say if you use for instance a large

language model to to identify and

classify uh things right um and you

haven't trained that system yourself you

use something that you basically buy

from somewhere uh yeah you through or

which you use through an

API and you don't really know what's

going on in in that black box. Um how do

you deal with that? How do you explain

that to the users of official

statistics?

Yeah. And at the moment uh there there

are some for example some evaluation

agents. We are not using them. We are

just starting to use them. But to

evaluate the the output of large

language models that's really

challenging and I think no one at this

this earth has really solved the problem

but but to use large uh

products where large language model is

used in that way that there's still this

human oversight that's that's I that I

think it's it's still somehow acceptable

and and can be

somehow let's say there's this human

that's evaluating the result but as a

autonomous

AI I would not use large language

models and that's what the academia says

all the time and and there's this other

other hype that goes goes there in the

private private sector that you can use

large language was all over but when you

know it and understand it more you say

not yet

okay makes sense thank you

I see in hand up that's Amra

uh from UNC please come in

thank you Alex and thank you Rita for

the present

uh your presentation as a whole and the

modules uh as you presented them the

upcoming ones I they are more focused on

having a more a solid obviously

governance structure and governance

framework for the AI to mitigate it

risks and so on and this is absolutely

obviously needed uh I'm just thinking

also of the opportunities of the AI

especially for countries in south who

have very limited

And that would be mostly in the support

AI and the kind of at best collaborative

AI fields maybe. All right. And my

question do you have like a breakdown or

did you make like a breakdown of the

tasks in the production cycle of

statistics where either supportive AI or

collaborative AI could be used?

Yes. Um me and my colleagues we have

worked through the GSBBM

uh quite many times where the AI could

be used and in which role and and yeah

but it's something that really has to be

with last time when we did it that was

nearly a year ago and it's and it's it's

not it has to be updated because the

the AI is changing

so quickly and new things arriving that

it's yeah but I I think it should be

really produced such a GSBB and AI uh

map where you can use it and in in which

role.

Thank you. Um I see there's another hand

up by Zuzu.

uh please come

in. I think we cannot hear you. Maybe

your microphone is muted on your side.

Can you try

again? No, we cannot hear you. Sorry for

that.

Um yeah, let

maybe

uh write the the question into the

meeting chat. Sorry for that. Uh I will

try to enable your microphone in the

meantime. Yeah, there there

were

also some questions uh from the

registration form that we maybe can take

in in the meantime. Um so the we we

talked

already about the use of LLMs so large

language models and uh there there's a

question whether you have considered the

practical use of local large language

models so I guess large language models

that are running on your local machine

in uh and if they have particular

advantages that might have they might

have in terms of

uh energy impact on the one hand but

also in terms of data conf

confidentiality. Maybe you can elaborate

a little bit about that. Thank you.

uh I I easily start thinking about

uh deepseek and R2 when we are talking

about uh locally running

uh models large language models locally

and

and I just read an article this morning

about warnings of this. So yeah, I I'm

not a very big fan of this running these

models locally. So I still think

that common infrastructure for this is

the best one. Although it's

environmentally sometimes a little

bit not so positive thing.

Yeah. Thanks. Uh, Tuzu, you want to try

to come in once

more? Let's try once

more. No, sorry, not not working. Okay,

so that

um so there there was a question about

the use case for technical and

organizational uh guardrails and Tuzu

wanted to ask whether you have

recommendations for real time monitoring

and accountability structures that an

organization can implement for

guardrails in

practice. Um so maybe you can answer

that.

Yeah, we have been testing several

several AI monitors. Uh the problem is

that some of them are really good and

but they are also very expensive. So we

have now last week decided uh that we

start uh uh picking up uh proper

algorithms from from GitHub and and

start building the monitor by ourselves

because they quite often they start they

are six figure

numbers you have to pay for the license

for per year. So it's it's it's really

expensive.

So and with the and with the

accountability it's it's

a I' been discussing with the top

management and and we are trying to

somehow build uh some kind of uh

accountability

uh structure that that everyone would

understand where where their

responsibility lies. that we are not

ready

yet. But I think it's it's really

something that has to be done quite

quickly before there's a there here and

there running and we are not at all

aware who's responsible for the results

and and perhaps when the when we start

putting out totally biased biased

figures. Oh yeah,

thanks. There was also a question by

um Caroline Wood who was saying okay so

recently we were told that open AI

smartest AI model disobey direct

instructions to turn off and even

sabotage shutdown mechanisms in order to

keep working. So uh the the question

there was can guardrails really be

built? Is it safe?

Uh uh can guard rails be built and is it

safe? Is that the question? Yeah. Yeah.

So can can guard rails really be built?

Is this something that that works? So

that was kind of the question there.

Yeah. I I I can think about for example

data privacy and security

principles. Uh I'm I'm responsible for

that module also but of presenting that

module also and it's it's been really

hard to find anything new. I mean we we

have already this anonymized

anonymization and and and and so on and

and it's it's not that easy. I mean,

yeah.

Yeah. Some Yeah. It's the infrastructure

and the organizational uh regulations

and all these very familiar old things

that when you combine these I think then

you can build some kind of guard rails.

But I recognize that it's it's it's

difficult to al already talk with our IT

people because they don't although they

are gurus in in cloud things for example

but they don't understand the what the

the AI brings when you bring a put on a

large language model into a cloud what

what's the what's the combination then

it's it's difficult

Yeah. Um, there's also a question by

Kristoff Bon. Uh, Kristoff, you want to

come in?

Hello. Good evening. Thank you for this

very uh stimulating uh presentation. I I

I it gave me a lot of question. I have

now more question I think after this

presentation than before. Also I think

this is this is a sign that it was

really really good. Um I I have some

sort of pro provocative question. We we

always talk about explanability,

transparency and reproducibility in

black and white terms while it seems

that there are lots of gray zone already

uh in a sense that

um I mean it's difficult already to

explain and it depends once again to wh

um some models right uh we we are using

imputation we're using some machine

learning models inside the NSO um I'm

not sure we completely understand And

then we can un fully explain already

what is going on when you classify

something as you know a different uh

different classes. So um the question uh

I have

is how we decide the level of gray is

acceptable and and and who decide that

is it does should it come from the law

where somebody has the right to know why

he has been classified in as

um in a category or should it come from

a technical side and and what is the

role of NSO uh here how how to define

this level. It's it's it's a big

question I imagine. Yeah. Yeah. Yeah.

Yeah. Yeah. It's really is.

Yes. Yeah. I think we are

mainly all the time nearly at that gray

zone. I

think because I I for for me personally

explainability and all these methods

they are they are really difficult to

understand. I'm a computer science. I

say that every time when when we start

talk about those methods that who should

decide what's the right level. I think

this is something that has to do with

the AI governance as a

whole which is uh much more than just

this implementing responsible

AI and it I think it comes there we are

at the statistics fin the beginning of

that journey just trying to identify

what kind of governance structures we

need. But what what I think what I think

it's important that our stakeholders we

can tell something if they ask we we

just we cannot answer that it's a AI

that produces these figures. We don't

know how and

why. Yeah. Yeah. You should trust your

models, right? And and this is probably

the best answer you can say. You're

trusting because there's human in the

loop. Yeah.

Yeah, thanks. Um, so I wanted to give

another chance uh for people to ask

questions. So please if you want to come

in either raise your hand or type it

into the chat, that's also fine if you

cannot speak.

Um, and I see one more question here

from Carolene Wood. Carolene, you want

to come in?

I can just read my question. Yeah,

that's fine. It's a question I'm

starting to to ask myself in the past

few months because it's maybe ethical,

but if we combine the risk of AI to

disobey human orders and with its

environmental impact and we see more and

more that AI is diminishing the need for

human work. You said it yourself read

less words less hands involved in the

statistical work. So is it worth this

risk on a large scale to to to use AI at

all? Why is humanity embarking on AI? I

know it's a bit of an ethical large

question.

Uh I think we just have to at

least it's it's very clear at Statistics

Finland. we we have to produce

statistics meet with much less

people.

So there are other things that we should

be thinking also but that's so clear at

the moment. So it's it's the tool we can

reduce the the amount of people

producing

statistics. It's not it's not very it

does not sound very nice.

I guess I mean uh in addition

to well of course you you have

efficiency gains

uh through the use of AI but I guess you

would also have um maybe a better

quality of your statistical output in in

principle. Right?

In principle, yes. But but the the the

monitoring and all this

infrastructure, it has to be really good

that you more you use it more you have

to evaluate and monitor the results and

with large language models these things

are not ready. So the expectations are a

little bit too high at the moment.

So I said in some meet in some meeting

uh last year that when do I expect for

example this aentic AI is is there in in

statistical production I asset after 10

years. So

it's not there. Yeah it's

all

right. Okay. There was just a comment

from Christina goodness. I'm so glad

someone just asked that question. So

confirms this. So last chance to ask a

question now. Um

otherwise if there is none so really

last chance raise your

hand. Okay, I don't see any. Well, in

that case, I would like to ask everyone

if you can please put your camera on for

a few seconds and uh also maybe your

microphone and give Rita a round of

applause. Please join me in doing

that. Thank you, Rita. Thanks so much.

Thank you so much, Rita. Appreciate it.

Thank you, Rita.

Thank you.

Thanks so much. Thank you. Uh I just

want to say one more thing uh before you

all go. So we will have our next webinar

also related to AI. It's uh on the topic

of AI operation operationalization

MLOPS and LLM ops on the 17th of June.

Uh so please sign up to for that

and uh join us next time. I will put the

registration link in the chat. Thank

you.

Thank you. Bye. Thanks Rita. Thanks

everybody. Yeah, please. Bye.

Thank you. Thank you. Thank you so much.

Bye-bye everyone.

Interactive Summary

Ask follow-up questions or revisit key timestamps.

This presentation outlines a framework for managing artificial intelligence risks through the implementation of guardrails and responsible AI principles, specifically tailored for official statistics producers. It explores the diversity of AI roles—supportive, collaborative, and autonomous—and identifies various risk categories, including technical, ethical, and societal. The speaker emphasizes that adopting AI requires a delicate balance between maximizing benefits and minimizing risks, highlighting the necessity of transparency, accountability, and fairness to maintain public trust and comply with evolving legal frameworks like the EU AI Act.

Suggested questions

5 ready-made promptsRecently Distilled

Videos recently processed by our community