Что защитит наш код от искусственного интеллекта?

Now Playing

Что защитит наш код от искусственного интеллекта?

Transcript

569 segments

MUSICAL INTRO

APPLAUSE

-Dear comrades programmers,

tell me, are you afraid of artificial intelligence?

Because I am afraid of it.

I am afraid of it.

But not for the reasons you might think, not because it will fire me,

but because it will not fire me, but will sit next to me

and will program.

This scares me a lot.

I will tell you about my fears and explain,

how I prepare for them, what countermeasures I take.

First, a brief overview of what hinders all of us programmers from living,

besides, of course, management, and how we feel about it.

You are programmers, I understand, yes, most of you?

Who loves their profession? Raise your hand.

Wonderful. I do too, but there are things that hinder us.

For example, I have divided them into three categories,

let's quickly go through them.

The first - defects, bugs.

We write programs, make mistakes,

for example, in this small program, the error is highlighted.

You understand what this will lead to. We have defects at runtime.

Defects come to us in the backlog, we fix them,

and they certainly annoy us.

But maybe it's not entirely right to view defects

as an annoying factor.

Many years ago, in a wonderful book, Steve McConnell showed,

or rather explained, that for every thousand lines of code

on average, programmers make between ten to twenty mistakes.

Good programmers, bad programmers, we all make mistakes.

Mistakes are an integral or essential part of the development process.

Whether we want it or not?

It makes sense to change our attitude towards this component,

to approach it calmly and, moreover, with love and joy.

If there are defects, then there are those thousands of lines of code that we write.

We conducted a small study recently and looked at,

how many there are now in Open Source projects.

programmers make mistakes per thousand lines of code.

Twenty years ago, it was between ten to twenty.

Now, look at modern Open Source products

in different programming languages.

In the right column, the same number or the same figure,

also calculated the same ratio

of the number of defects per thousand lines of code.

You can see that the numbers are different.

It's not from ten to twenty, but it's not zero.

And it's not a million.

We are roughly in a certain range.

Some have more, some have less.

I am surprised that the Linux kernel has the smallest number,

while VSCode has the largest.

What is your number in the project?

How many errors do you make per thousand lines of code?

I'm sure you don't know this number, and that's not good.

It's worth paying attention to such a metric.

So how many mistakes are you making?

It's great if you are making them.

Treat mistakes as fuel that drives the development team.

The more errors you find in your code,

the more you register them,

the fewer users of the product will find them, of course.

So mistakes are a good thing,

but they are one of the annoying factors.

The second is security holes.

You, as programmers of a financial organization,

understand how important this is.

What is wrong with this code?

The lines at the bottom.

Why did I bring it up as an example of mistakes?

Louder?

Yes, concatenation.

And why is that bad? Concatenation and...

SQL injection, that's right.

As a variable x, you can substitute

a piece of an expression, an SQL expression,

which will split the entire query into several queries,

and there can be anything inside.

This is a leak or a security hole,

which does not lead to a functional problem.

The product will work, but at some point

someone might take advantage of the error

and steal or destroy our data.

In a wonderful book, again, 20 years ago,

the authors said that security

and security errors in the code -

are an inevitable part of the development process.

We will make such mistakes.

Our future is filled with these problems.

I recommend this book to those who,

anyone who is in one way or another connected to software

related to the banking sector.

And the third problem that annoys us all

is complexity, I grouped them all together.

Roughly speaking, it's dirty code.

This is what our conference is about.

Dirty code is complex code,

it's code that duplicates itself,

and code that has what is called

"Code Smells" or "Antipatterns."

You know very well what that is.

For example, the code in the bottom right.

No one wants to read or maintain it,

or understand it, at least not me.

It's something complex, something unclear,

written in violation of many programming rules.

The complexity has been beautifully described by a good author.

Exactly the following.

If you, as a programmer,

believe that complex code,

written by you, is your achievement,

if your code is hard to understand

and only you can make sense of it,

then you are more likely misunderstanding your profession.

Complex code is a sign of a bad programmer.

A good programmer writes simple code,

which is easily understood by them,

their colleagues, and even a junior programmer.

Let's strive to write simple code.

That's all for now, the intro to the main part.

The second is duplication.

A great author of a good book,

a classic that has long become a classic,

said that duplication is the main enemy

of a well-designed system.

The main one.

If you took a piece of code from one place

and did a copy-paste to another place,

then you have opened the door to the main enemy.

It will be difficult to maintain such code,

because in one place you will need to change it,

and in another place we have already forgotten that it...

needs to be changed. If you propagate duplication throughout the codebase, in the end, we will have many problems.

The second problem is duplication. And the third is technical debt or various anti-patterns.

Technical debt - again, a wonderful book, highly recommended. Technical debt is what

accumulates in a product as a result of careless programming. Somewhere you use the wrong

pattern, somewhere you don't use a design pattern, somewhere you wrote it too complicated,

somewhere you used the wrong algorithm, which is fine for now, but in the long run, it should be

fixed, and you need to treat your code like a garden that you take care of. I told you all this

to show that we all live, as programmers, in a state of constant stress in

as a result of low-quality software, due to technical debt, bugs, security leaks, and

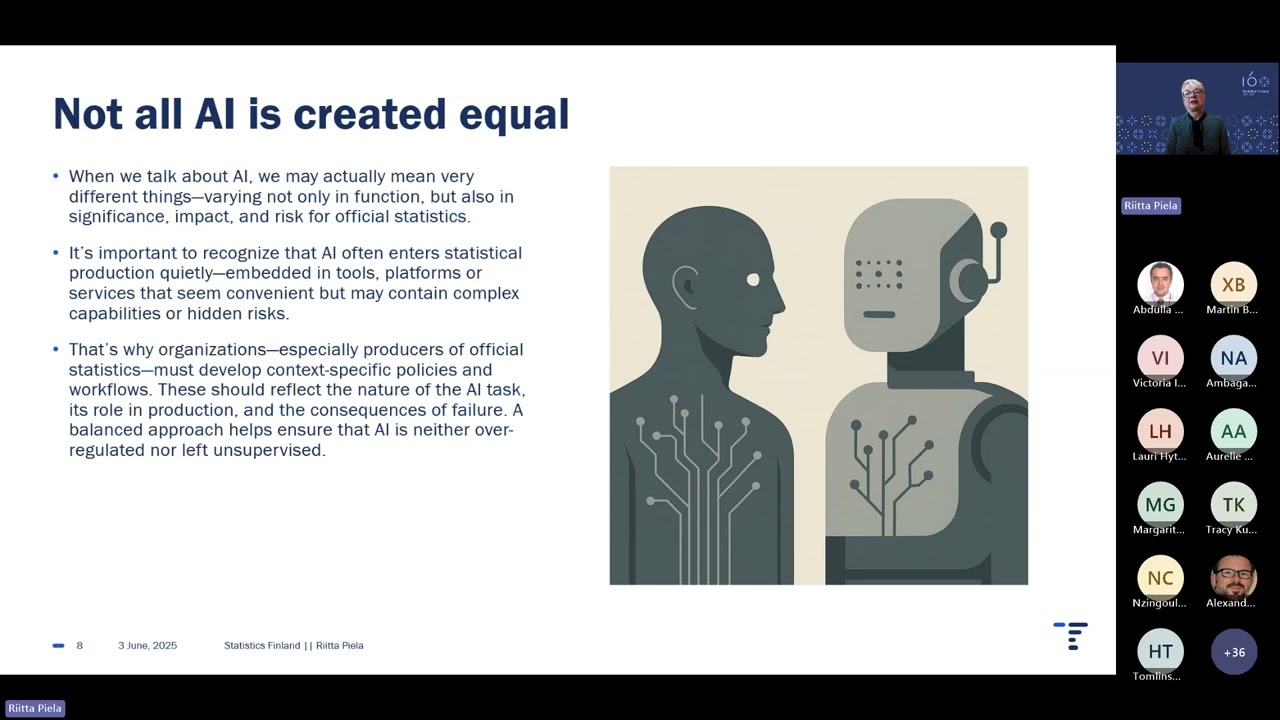

so on. With the advent of artificial intelligence, the situation is worsening. Let me explain why. Robots

are starting to program alongside us or instead of us. They write code, they review it, they complete it,

they help programmers finish code blocks directly in the IDE. Who is already using Copilot or

something similar? Some AI systems. You understand perfectly what I'm talking about. So robots

are alongside us, and the problems we've had for the last 50 years are starting to worsen.

Let me explain why. First of all, we think that robots write correct code. We trust machines. We do not

expect that a machine can make mistakes, but a calculator cannot be wrong, and a compiler cannot be wrong.

We know that if we input one number into a calculator and then multiply it by another, we do not

double-check the calculator. We know it calculated correctly. The same goes for the compiler. If you

if you give it a program in Java, you know that it generated the correct bytecode. You do not check it.

And artificial intelligence works like a calculator too. At least, it looks like

a calculator. We input something, and it immediately gives us an answer, most of the time without hesitation. However,

research shows, in an article from last year. After analyzing code generated by artificial

intelligence and subsequently reviewed by a human, it is evident that the code produced by the LLM is

of low quality, contains errors, and is prone to crashes and hangs. Much more so than code

that a human would write. Secondly, another study from this year shows that still

a third of the code instances written by machines have serious flaws. But at the same time,

let’s remember, we still consider it a calculator that does not make mistakes. If it were a

calculator that we initially did not trust, there would be no problem. We would know that we need to check,

but we think it is a machine that cannot make mistakes. Imagine that you hired someone

a junior programmer who pretends to be a senior programmer. He is so grown-up, overweight,

in glasses, with a beard, and seems to know everything. But his knowledge is at the level of a sophomore, and he writes along with

you and sends you code. And you believe him. Well, because it's hard not to believe. You have a high

level of trust in a senior developer. But inside, he is a junior developer. That's how it works.

An LLM right now. Behind the guise of a senior developer hides a weak programmer. And this is a serious

problem. Imagine that you are working with a programmer. Next to you sits a coder. You

are contributing to the codebase together. You are trying hard, while he makes mistakes at the level of a junior programmer.

Do you understand what threats this creates? Well, and the third article. If you assign the LLM an article from this year as well,

if you assign the LLM to check the code, that is, to conduct code reviews, then programmers will ultimately

take responsibility off quickly, thinking that the machine checks it thoroughly. Again,

imagine that a developer is sitting next to you. You send him the code. He checks it and

says "very good, very good." But it turns out he is a junior. He doesn't even really understand what you are writing. And you have relied on

him for all these six months. And after six months, you realize that you have been deceived. Your expectations were based

on an incorrect level of quality from your partner. Second. It turns out that LLMs have a negative

influence on us. Specifically, research from this year shows that when writing code, LLMs introduce into the software

code a programming style that they like. Not yours, not your repository, but the style of programming

they were trained on. And they were trained, as you can imagine, on all possible

examples of code from the internet that they could find. And they have processed all this mess,

learned something. They have their own programming style, their own understanding. They come

into your project and program the way they want. At the same time, imagine, let's return to our

analogy, imagine that sitting next to you in your team is a junior developer who is making

it seem like he is a senior developer, and he encourages everyone to write the way he thinks is right. To each one

he tells each programmer, I know exactly how to write, let's program in my style.

And people start to listen to him. As a result, your codebase will lose quality. You will be

losing the style, you will lose the principles that you have developed within the codebase, you will

you will lose yourself, lose your quality. And this is inevitable, as they say.

researchers. The third is data leakage. Again, let's use our analogy. Imagine that

this junior developer who pretends to be a senior, turning everyone against

himself and also communicates with a competitor at that moment, telling them that you

you are programming here, how it happens. Imagine, you open your IDE,

write code in it, and a colleague from the neighboring department calls you and says that something in

production is not working. To check, you insert the password and

login for the production server into the code just to run a test on your local computer,

just to verify that there is a request going there. You don't commit these passwords to

the repositories, you are an honest programmer, a careful programmer, but you click

the copilot button, and ask it to help you write. What does it do? It takes all

your source code along with the password and login, sends it to the OpenAI server, and

receives a response from there. Yes, let's hope that OpenAI immediately deletes your

request. But that doesn't happen. Most likely, your request is not deleted. Rather

in total, it remains on the server, whether it's OpenAI or GigaChat. Look at what

Gemini writes in its policy regarding such issues. They do not say that we do not

save your data. I read the entire policy carefully. Nowhere do they

state that we will immediately delete your data along with the login and password. They will not

delete it. They simply say, don't enter confidential information. It's your problem if you

did that. And we will take and use it. If they take your requests and use them

to train the model, then the login and password will go into the next version of the model. And

the whole world will be able to access the password and login that ended up there

by chance. You didn't even click the "send" button. You just

asked Copilot how to fix the algorithm on lines 15 and 16. But in

lines 50 and 60 had a password, and it was sent to the server. What does this tell us?

GigaChat on this matter? And GigaChat is even stricter. It says, it is prohibited

to send confidential data to our server. Google

says do not send, while GigaChat says, we prohibit sending. But neither of them actually

says, if you send them, we will delete them. So you understand that you are sitting with

a junior developer who constantly tells the competitor about what you are doing.

you are working on. And finally, the pressure from management. Management thinks that robots

can write code faster and better than humans. And they put pressure on

programmers. They say, why are you taking so long to work on this problem when I

can ask GPT chat, and it will answer me in five minutes.

Why do I need a programmer sitting here for five days working on one problem?

Management thinks that we are just like this impostor. That we, too, can...

in quality, in level, are the same as a junior developer pretending to be a senior.

developer. I conducted a small survey in my Telegram channel. I asked, maybe a little bit...

not visible. The next question was. Programmer, what is the difference between you and a robot,

that can write code? I asked programmers. There were five answer options.

different options. Two of them. The first is that I write code better, make fewer mistakes.

The second is that I write cleaner code. All the other options are different. For example, I care...

about design, I go to parties, and a robot can't do that, and the last one is that I don't...

better, I'm just more expensive. So the other three options are more like...

They are funny and do not relate to the quality of the code. What conclusion can we draw from this?

Only a third of programmers believe that they are better than robots. Two-thirds...

engage in self-deprecation. We are convinced that we are worse than machines. This is a question for coders.

I asked programmers. This means the opinion of people; people think that they are worse.

machines. Even management doesn't need to pressure them. Management just walks into the room,

and we all know that we are already ashamed. We already understand that yes, indeed, to put...

Chat GPT will do everything better. Here is the result. The question is, I was extremely surprised. I...

thought that people would say, no, we write better. After all, research shows this, we...

we know from the numbers, well from articles, that people still write code better. Maybe in the future...

in five years the situation will change, and such a survey will make sense. But right now it is...

it doesn't make sense. That is, management pressures us, we will bend, thinking that yes,

indeed, we will be ashamed that such a knowledgeable specialist is next to us, while we...

program so slowly. What should we do? We are approaching the most interesting part. What about this...

What to do? How to fight against such robots that will be introduced to you one way or another in your...

teams to implement? Whether you will do it yourself, incorporating various assistants into...

IDE, incorporating assistants in the command line, you will do this. Or someone will come to you...

management will, one way or another, today or tomorrow, force us to use robots,

that will give you suggestions, help, and so on. How to protect yourself from their negative...

influence? How to ensure that they do not ruin the codebase? How to make sure that...

that they do not introduce garbage into the code that we will then have to clean up ourselves? I see five...

ways. I'll start from the simple to the complex. The first one. The team needs to learn to do code

reviews in small portions, small ones. In most teams, unfortunately, this does not

happen. In most teams, my experience shows, people accept large

pull requests, significant ones, and expect that each pull request will contain a large, cohesive

block of work. If you do it this way, the LLM will do the same. It will send you

large portions of code, and you won't be able to check what this very

imposter wrote there. You won't have the opportunity to do a quality review. So until

the robots arrive, we start writing code in small portions. Google conducted

research. True, it is already quite old, from 2018, so seven years have passed,

but I think not much has changed since then. Google believes that there is a direct...

the correlation between the size of a pull request and the quality of code review. The smaller the code in

in the pull request, the better you can review that very robot that

and tries to insert faulty code into your work.

repository, without any conscience or responsibility,

no emotional attachments to your team.

To combat it, do small code reviews, small...

pull requests.

What does "small" mean?

Another study, which is much older,

it is almost 12 years old, and yet, it is an analysis of open-source repositories,

in which the authors showed what the average size of a pull request is.

44 lines of code, 25, 32, sometimes 263, which is a lot, 78.

This is the average amount.

It is clear that there are super small pull requests, fixing 2-3 lines

and immediately merging.

But on average, we should still strive to reduce the size of

the pull request.

In my understanding, more than 100 lines in a pull request

is already a risk.

If you allow programmers to make large pull requests,

LLMs will learn, see how you do it, and will be

do the same.

That is, implement the practice of small changes.

Small changes will give you the opportunity to protect yourself.

and from clueless junior programmers, as well as from the robots that rely on them.

similar.

The second.

Test coverage control.

Well, probably everyone writes tests, right?

Although I remember when I spoke at conferences 5-7, 10 years ago,

when asked who writes unit tests, 5 people in the room raised their hands.

Who writes unit tests now?

Don't scare me.

Well, many do, right?

Good.

So, many do write them.

But now I will ask you a second question, and you will see how

few hands will be raised.

Who among you controls the build based on the percentage of test coverage

of the source code?

Who controls test coverage?

One, two, three, well, here are four.

Five.

There is such a metric.

Test coverage means the percentage of the source code covered

by tests during the execution of a full test cycle.

The higher the percentage of code covered by tests,

let's say in simple terms, the more lines

of code are involved while the tests are running,

the better.

Ideally, 100%.

Ideally, all your code should be tested during testing.

be involved.

All lines of code should be executed in one way or another.

What is this percentage?

Few people know, on one hand, and even fewer control it.

What does it mean to control?

They do not allow programmers to go below a certain level.

boundaries.

If we have, for example, 80% coverage in the repository, then

we will not allow any programmer to achieve 79%.

If a programmer achieves 79%, we will reject their work.

pull request and will require them to add tests.

This is considered a good practice by many.

According to someone else's opinion, I know the opinion of programmers,

who believe that this is not the case, that there is no need to control it.

coverage.

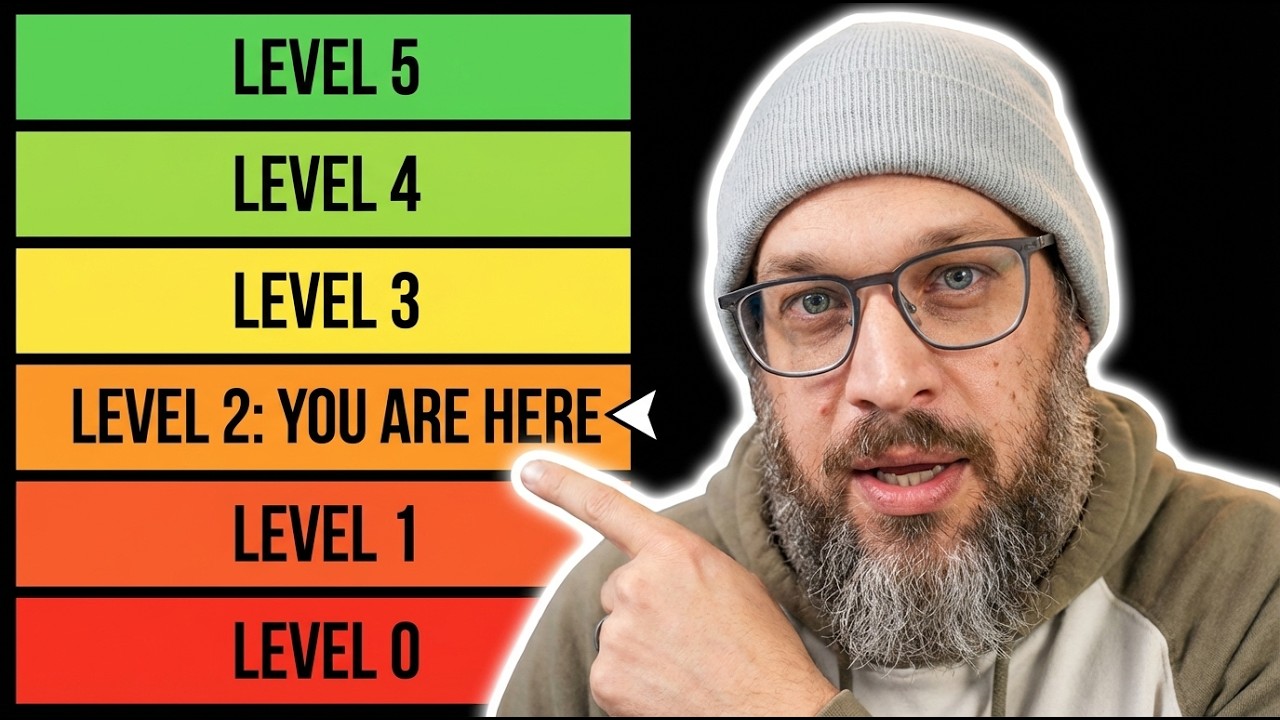

Let's listen to the company Google.

The company Google says, article from 2019.

They say, we do not apply any mandatory measures.

coverage thresholds.

A threshold is what I just told you about.

They say we do not apply it.

Okay, we do not apply it.

However, right in this article, in the same one we mentioned,

there is a voluntary notification system that defines

five levels of thresholds.

We do not enforce it, but there are five levels.

What are these five levels?

They are defined in this same article.

If your coverage automation 2 is completely disabled, like for many others.

Here, this is level 1 in your project.

If you are using coverage automation, that is, you are reading

the number, but you do not prohibit programmers in any way from

going below the number, you have level 2, so to speak,

in your repository.

And if you have 90% coverage, then you have level 5.

This is how the company Google does it.

I recommend that you do exactly the same.

It doesn't have to be 90, but you need some number.

Set up a system for collecting information about test coverage and this.

use the information to make decisions about what,

to accept changes or not.

When the robots come to you, impostors pretending,

that they can do everything, they will send you code with weak coverage,

and you will be able to stop them because the system will already be

ready for this.

You will be ready for this threat.

And the robots will be forced to write a sufficient amount of

tests.

When they write a lot of tests, they encounter

their own mistakes and are forced to fix them.

If a robot is given the freedom to write without tests or with

low coverage, it easily handles the task, but

makes a lot of mistakes, far more than if we

were controlling it.

Third.

Coding standards.

An interesting thing.

It's called a "manifest."

I don't know if you've heard of this modern

term?

It's called "agent coding manifest."

I don't know how to translate it correctly; this is an example from

our repository, there's a link on GitHub, this is an example

of the text we use.

What is it about?

When you work with a robot, with an LLM, it, while editing

your code, knows what it knows.

The programming principles that it is familiar with.

It doesn't understand your principles.

It doesn't know, for example, that you like to name variables

in Camel case, or conversely, that you prefer Kebab case.

It doesn't know that.

It can, of course, look at your previous code and

draw conclusions from that, but in general, it approaches

you with a blank slate, regarding your repository.

You can write a so-called coding manifest, in which

you outline all your programming standards, telling

it how you want it to program.

Imagine that a Junior Developer comes to your project, and you

are training them at the start, saying, "Sit down,

grab a coffee, and we will spend 3 hours explaining how we write

in Java."

Not how to write in Java in general, but how we write

in Java.

So you need to create such a document and place it

in the repository.

This is necessary; if you don't do it, the Junior Developer will

work with you blindly.

They do not understand your repository and your style.

Creating such a document is extremely challenging.

It will take time; you need to write it in a way that is

concise and compact, and at the same time,

it should cover everything the Junior Developer needs to know about

your programming style. There is a repository I recently

found where leaked or stolen prompts are published,

or so-called system prompts that are used by

ChatGPT, Anthropic, and so on. Open repositories

on GitHub. Check there to see how such coding manifestos are written.

In what style they are written. Take the most valuable parts from there, compile

your coding manifesto, and place it in the repository. In this way,

you will prepare for the war with robots. Many probably

know this famous tweet, published not long ago,

stating that the hottest programming language today

is English. Indeed, looking at the situation with the coding manifesto,

this statement can be confirmed. You will indeed have to

program in English. You will have to learn to

write in English what you would explain to a programmer who came

to your office using gestures or examples.

code. Imagine I come to your project and ask

you, well, how do you program here? Tell me. And you start

to teach me. Well, you know, we place the files like this...

like this, and we name the classes like this, and we name the methods like this...

we don't just name, but we name like this. These are the patterns we use,

and we don't use these, we tried them, and they didn't work for us. And all this

you will be conveying information to the programmer's mind.

day by day, maybe month by month. You need to

do this quickly in the form of a manifesto for LLMs. Give it a try.

to write it right now. It will be difficult without it. The style,

programming style. Stylistics, style checking. When you write

code, there is a certain style that you adhere to, which you...

adhere to. Who even thinks that style in programming is...

is not important, but what's important is that it works? Raise your hands. Wonderful!

There are no such people, although maybe you are just afraid.

raise your hands, because we are at a conference about clean...

code, but there are such people, I have encountered them in my life,

who say that style doesn't matter, everyone has their own style,

I wrote this class in my style, and the neighboring programmer...

wrote it in his style, he has tabs there, I have...

spaces, he has line breaks arranged in such a way,

I have it differently. It doesn't matter, it matters. And you with...

agree with me, since no one raised their hand. So,

controlling style should not be done only through conversations.

with programmers, not just through mutual, so to speak, agreement.

about how we program, but with robots, tools.

The robots are those that will come to us, with tools.

There should be tools configured by you, which...

punish programmers for violating...

the style. For example, I will give you a short list of the most...

the most popular tools for style checking, you can

integrate them into your programming language, take some

others and configure them to your needs. The stricter, that's my

point, you all probably know this, these are obvious things,

but my point is that the stricter you configure the style

control tools, the easier it will be for you to deal with

the robots. Again, the robot comes to you, not understanding

your style, it needs to encounter a barrier, it needs

to write code and have someone slap its hands

and say, this is not how you should format it, this is not right. It will understand,

it will understand how to do it correctly, but it needs a

feedback, and you won't have the time, strength, and energy

manually explain to the robot each line so that it

rewrote it. You should have a system in the repository

style control. Take one of them and here you go.

look at the interesting numbers, what a number of checkers

or how to say it, rules within each style.

checker. A huge number. Imagine in ClangTidy,

almost 700 rules. You won't remember 700 rules manually, you

won't be able to explain to any robot, nor to any programmer,

that he did something wrong, keeping 700 rules in mind.

And a style checker can, if you, of course, enable all the rules.

I recommend you use a style checker with the maximum

strict configuration. When you insert it, you take

into the project, integrate it into the project, enable the configuration.

to the maximum. Let it be painful, let the programmers

complain, let you have difficulties at the start,

but it's better to go through them, and then you will be fully prepared.

armed, when the robots come to you and start trashing you.

your repository. And this is exactly what they will be doing.

Well, imagine once again a Junior who comes in.

into your project, pretending to be a senior and starts writing.

in the style that he wants. And no one will stop him.

stops him, except for you, who is writing the code.

review, and he sends you a huge number of files, like a thousand files,

formatted in a haphazard way, but the tests pass. And you do not

understand what the design is, what patterns are there, how

he did it. Well, it seems that the tests pass, but you accept

and you have what you have. And there will be no turning back,

since, well, he has no conscience, you won't punish him.

for this. And here are a few exotic style checkers

that might be useful for you to check out.

For example, Shell Check. Who has ever used Shell Check? One

person, two wonderful people. This is a checker for

bash scripts. And who writes scripts in bash?

I mean, you write scripts in bash, but you don't use

Shell Check. Try using it. It will tell you so much

interesting about your bash scripts, it will show you such interesting

mistakes that you make. I get a lot of pleasure

programming in bash now, after I spent a whole

year struggling with the rules of Shell Check. And in the end, I realized that it is from

me what he considers to be correct, necessary, and safe.

programming in bash. The same goes for the others.

checkers that are for files of various sizes.

For example, Dockerfiles. We write Dockerfiles, how many of you have used them?

Hadalint. This is a checker for higher quality, more competent

formatting of Dockerfiles. Not just formatting,

how to place spaces, and which commands to use,

in what order, what can be called, what

cannot be. Checkers will help you, protect you from...

And finally. The last thing we need, the most difficult, I don't have exact answers for you.

This is an open question that needs to be explored, as I understand it.

Description of architecture. It is necessary for the knowledge of developers and architects in the project to somehow be translated into English.

So that the LLM can understand how you program.

I will give a specific example. Look at two blocks of code. One is written in C++ and the other in C++, they are both in C++.

In the left example, we are trying to read some content from a file.

If we fail, we return -1. If we succeed, we return 0.

Quite understandable error handling.

In the right case, in the right example, we are also trying to do something with the file, only in this case to save the file.

If we fail to save, we throw an exception.

Both files are functional. They may have been created by the same programmer.

Perhaps even within the same time frame.

But they are a vivid example of an inconsistent method or way of handling errors.

In one part of the program, you handle file system errors one way, in another part, you handle them differently.

No style checker will catch you.

No code reviewer will catch you. And no LLM will catch you on large volumes.

If you, of course, show the LLM these two short snippets of C++ code, the LLM will say, yes, you have inconsistency here.

But if you open a repository with a thousand files, in one place it will be one way, and in another file, far from it,

it will be different, you are defenseless. No one will help you catch such an error.

This is a defect at the architectural level. And modern science and technology are powerless against this, well, almost powerless.

I will show you something. But this is, of course, trivial compared to the threats we have.

That is, programmers write as they want. And now imagine, you invite a junior developer robot to your project,

who pretends to be a senior developer, and you say, write me the third snippet in the third file.

And it writes in some third way. And makes another mistake, similar to this, also handling errors in its own way.

How will you catch it? How will you be able to counter this? Because both pieces of code will easily pass code review.

Well, unless you are an architect who keeps a finger on the pulse and closely monitors all changes,

then you will see that this is not acceptable. I remember that we always return -1 in case of an error.

And therefore, the right code, no-no-no, don't do it this way. Most likely, you won't catch it manually.

That is, some tool or mechanism is needed where we could describe in text form,

how we, for example, handle errors, and the LLM will read and understand that it can only be done this way.

Our architecture is like this. That is, it is necessary to invent some language for describing architecture,

or maybe some tools for describing architecture that could be placed in the repository

and referred to each time when working with robots. What language is this? I don't know, it seems it doesn't exist.

Well, for example, there is a rather old study that says if you have a good readme file,

then your repository will seem to have more popularity.

I think, why am I bringing this study here. It seems that the readme file is the place where you can describe the architecture.

That is, you have a starting readme file in the repository, which, on one hand, people will read and understand,

what is said there and how your repository works. And on the other hand, robots will also pay attention to it.

And it will be difficult for the LLM robot to go against what is stated in the readme file. How does the LLM work?

It tries to find an answer to the question you ask, minimally deviating from all the context that surrounds it.

That is, you ask something, it builds an answer so that it matches as closely as possible to what you asked.

And with what it knows beforehand, and with what you have in your repository.

It tries to give you an answer that is closest to where we are among all these coordinates.

The readme file will help you. And finally, there is a tool called ArcUnit. Who has ever heard of this?

Hardly anyone. Only two people have heard. We are three people. We are trying to use it here and there, but it can help in some way.

For example, we can say that from the Source module, you can access the Target module, but you can never access the Foo module.

This rule can be formulated as a unit test. That is, you will run a unit test, written in unity, which will scan the entire bytecode.

And check in your bytecode whether there were accesses from the Source module to the Foo module. This is a picture from their website.

If such an access occurred, the unit test will fail. Quite a good way to control.

But how much can you write in ArcUnit? It turns out not that much. We tried to write something more or less substantial, but unfortunately, it doesn't work out very well.

So, it's an open question. Do you think about how to do this? We don't know. We are discussing it, and there are no serious solutions yet.

It seems that a special language is needed, which is English on one side, and on the other side, it is technical.

And in this language, it should be possible to write, write, write, explaining how our architecture is structured.

And then, if we have described everything, set up style checkers, established coverage control,

then we can invite robots in large volumes without worrying that they will cause harm.

They will then contribute, contribute, adhering to our style and our architecture.

This is where we need to get to. Unfortunately, we are not there yet.

That's all. Subscribe to my Telegram channel. Thank you very much.

Subtitle editor A. Olzoeva Proofreader A. Kulakova

Interactive Summary

Ask follow-up questions or revisit key timestamps.

The speaker expresses fear not of AI replacing programmers, but of it working alongside them, potentially degrading code quality. He outlines three common problems for programmers: defects, security vulnerabilities (like SQL injection), and code complexity (dirty code, duplication, technical debt). The core issue, he argues, is that AI, perceived as infallible like a calculator, often produces low-quality, buggy code, introduces inconsistent programming styles, and poses a significant data leakage risk. Management's overestimation of AI also pressures human programmers, leading to self-doubt. To counter these threats, the speaker proposes five strategies: performing small code reviews, enforcing strict test coverage, creating a 'coding manifest' to define team standards for AI, implementing rigorous style checkers, and developing methods to describe architecture in a machine-readable format to ensure consistency.

Suggested questions

9 ready-made promptsRecently Distilled

Videos recently processed by our community