How Chinese A.I. Could Change Everything | Dr. Michael Power of Kaskazi Consulting

Now Playing

How Chinese A.I. Could Change Everything | Dr. Michael Power of Kaskazi Consulting

Transcript

2802 segments

When I speak to really well-informed

people of the US AI ecosystem, I'm

horrified by how little they know about

the competition. The Chinese approach,

which is open source, open weight

specifically, will likely win out.

There's not much road ahead for Nvidia

to continue to miniaturaturize. The

Chinese are now moving, I think, into

the next stage. Smart factories are just

spreading across China at an

extraordinary rate. Last year, China

installed more robots than the rest of

the world altogether. What Deep Seat

came up with is far more radical than

anything Open AI has ever come up. The

amount of debt that's creeping into the

system both on and off balance sheets at

the moment should be of concern. It's

particularly of concern in related areas

like dare I said Oracle and Paul the

numbers that are being talked about by

the likes of OpenAI every now and again

start to almost approach the same sort

of level of absurdity. If you're on

bubble watch at the moment you focus on

Oracle we're heading towards some sort

of crisis point.

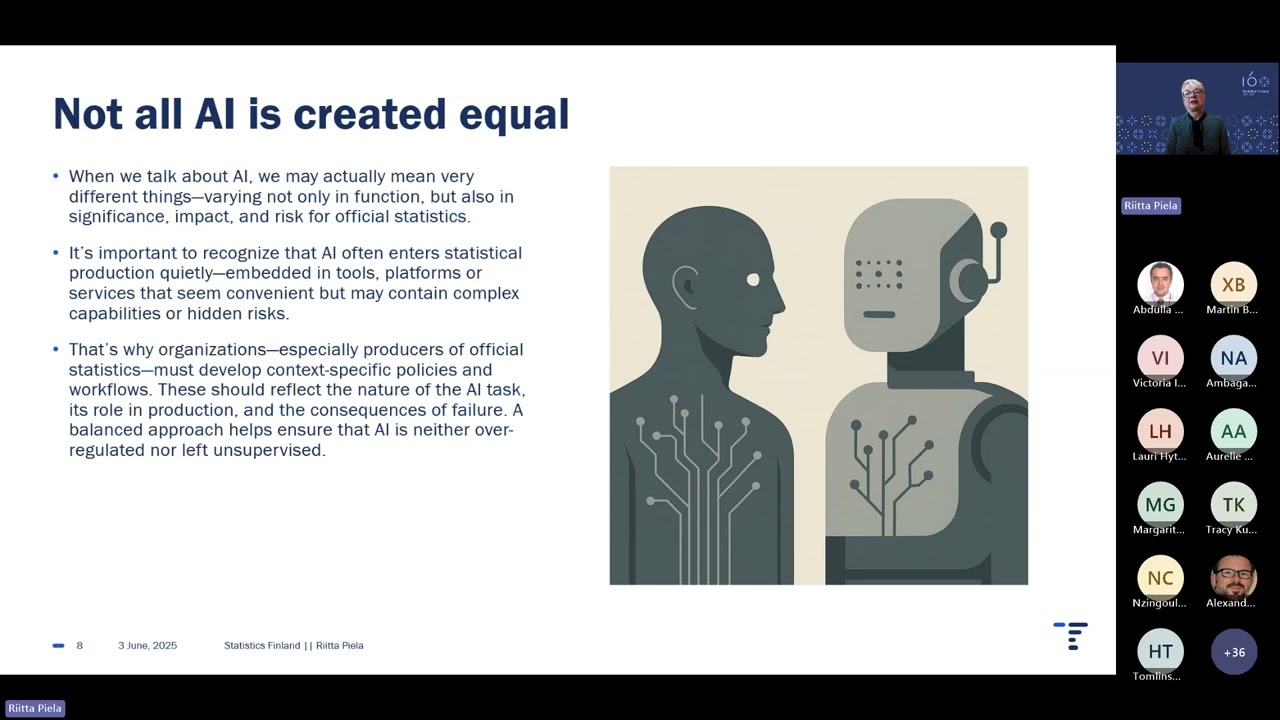

>> I'm joined by Michael Power of Kuscazi

Consulting. Michael is a veteran of

macro strategy among many topics.

Michael, it's great to see you. But you

right now have have written an essay

that is kind of blowing my mind. It is

extremely indepth an analysis of US AI

architecture and Chinese AI

architecture. you find that Chinese AI

architecture is has significant uh

advantages over the US AI architecture

and that basically the hundreds of

billions of dollars and trillions of

dollars that are currently and going to

be invested in US AI right now may be

basically a bust. So that this has

extreme geopolitical consequences for

the entire world, China and and the US,

but also economic and market

consequences as well. So there's so many

strands we can grab on to, but just how

about you you you take us into the

journey. How did you first start

thinking about this? Tell us about the

process of you discovering this and then

we'll just get into it.

>> Well, thank you first of all, Jack, for

having me. I think that I'm now in

semi-retirement, though I've discovered

that the word retired doesn't exist in

retirement. And I'm one of these people

that was determined not to allow my

brain to atrophy. and I still continue

to make plenty of speeches um on those

more traditional subjects that you

mentioned before. But nevertheless um

I've made it my business to try and

understand probably the great uh theme

of the world today. Not least because it

has completely enraptured Wall Street.

Um and I felt that uh in order to be

able to to have a a meaningful

contribution to make I needed to

understand it. So what I did is that for

the first six weeks is I just immersed

myself in everything AI and in the first

instance that basically meant learning

the language of AI. Um because a lot of

it is jargon which is uh not easy to

understand. Um and I first translated it

into language which I could understand

and any reasonably intelligent person

such as yourself could understand as

well. And when I was doing this, I

started to realize coming from an

objective perspective that the narrative

that dominates much of uh Wall Street

thinking um was not as as strong as uh

it was made out to be and that the

Chinese although I don't think the

situation is as we speak today one of

the Chinese leading deep down the

structural process that they're putting

in place with regards to how they're

approaching AI I I think uh roll forward

three years uh will um outmaneuver that

of the United States and that their

model which is first and foremost built

on the idea of open source rather than

closed source and we can come to that if

you will um but nevertheless their model

um has a lot more runway ahead of it and

it's a lot cheaper runway uh than does

uh the US model which I think is

actually starting uh to run out of bread

and if If you look at the US AI

ecosystem, the valuations in that I

would roughly estimate at 15 trillion.

If you look at all the publicly traded

securities and then the venture capital

funded companies, whereas the the

Chinese the market cap of the Chinese

ecosystem like a lot of it is is private

or governmentbacked. But I mean, you

know, Alibaba has a has a market cap of

less than a half a trillion dollars,

which is certainly still a very large

company, but uh pales in comparison.

First of all, Michael, yeah, I I just

want to share some of some of the

consequences. I mean, you said that

basically the grim reaper is coming for

the American AI bubble. So we'll get

into that in a second, but but but but

first, what is the source of the Chinese

advantage over US technology and and how

does that disprove or challenge the

consensus uh within America about US AI

advantage uh in in Silicon Valley as

well as Wall Street?

>> Well, I'm going to use a four-letter

word on a very aerodite um discussion

like this. The essence of the Chinese AI

approach is that it's free.

And I can't say that enough. And that is

because they have a completely different

philosophy as to what AI should be as

compared to the US model.

China is building a structure at the

moment where AI will be a utility like

electricity

and the value that is going to be

derived from using the electricity is

where they're going to benefit but the

electricity itself is a pretty no value

product. Yes, you can make a little bit

of money on the way by generating

electricity but as you well know uh the

real valuation from electricity comes

from what what it is purposed to do. uh

whereas the US model is essentially that

it's a service that can be monetized if

they can monetize it to the degree that

they need to given capital expenditure

they've undertaken but that it's a

service and these two different

philosophies profoundly different

philosophies mean that there are two

paths developing and to some extent um

the analogy is not precise but it's good

enough um essentially the Chinese

approach is uh the android approach

because as you probably know although

Android is technically owned by Google,

Google deres no money from that. It's

actually controlled by a foundation and

that foundation uh is a nonprofit. But

compare that to Apple and their

ecosystem which is uh you know extremely

profitable. Um Android actually follows

another great example and that is of

course Linux. Um and if you look at the

top 100 supercomputers in the world

today,

Linux runs 100 out of 100. And so

essentially there is this philosophy of

open source or open weight which is

essentially the Chinese uh approach to

AI versus closed source. And my sense is

that over time, as with Linux, as with

as with Android, um the Chinese

approach, which is open source, open

weight specifically, um will likely win

out, which is why I say they have a

longer runway ahead of themselves uh

than uh does the United States. I should

say in this

that the US may be like Apple is able to

do create an ecosystem um that Apple is

secure in. And there are four countries

in the world where Apple is more popular

um than uh Android but everywhere else

in the world um Android is more popular

than Apple. Those four countries by the

way are the US, Canada, UK and Sweden.

And and what we may beginning to see in

a very analogous sense is the emergence

of a bifocated world where because in

large part the Chinese offering is free

uh it's winning big time outside of the

core uh capitalist world centered on

Wall Street. And so, and I've often had

chats to people who are essentially

Apple heads or iOS heads and they don't

really actually grasp the world of

Android. They don't actually understand

that there is another way. Um and I

think what's happening at the moment

that uh there is another way uh in AI

and it's winning big time uh in the

world at large not specifically by only

looking at what's happening in the

United States. And you note that Chinese

AI models have a very high rate of

adoption and that a a venture capitalist

I believe from A16Z

said that 80% of the startups that they

fund are using Chinese models not US

models which is certainly very

surprising. So the the open source

versus closed source I think they so

closed source what it literally means is

that the the models weights are not

disclosed whereas open source they are.

But you're referring to the the pricing

model. So it goes further than that.

>> Okay.

>> Uh as an open-source model, you can play

with it,

>> but with a closed source model, you

can't. And I always make the um

distinction that closed source is like

ordering beef Wellington. And a perfect

beef Wellington arrives on your plate.

open source. You order beef Wellington,

but when it arrives, you get a list of

all the ingredients and you can play

with it and mix the ingredients up and

completely reform it and conceivably

create egg and bacon ice cream out of

it. Um, but nevertheless, you have

complete freedom once you receive your

beef Wellington in the open-source world

to reconfigure it to whatever way you

like.

>> Okay. Okay. But when you say it's it's

free, you know, so so chatbt is

massively subsidized and the there are

free versions of Gemini, Chachbt, all

the American models and I'm sure there

are of China, but are you saying that

China doesn't charge its users or that

the pricing the price the charging model

the pricing model is different?

>> I'd say less than 5% of the Chinese

models um have uh a user fee attached to

them. Um and it's only for unbelievably

specialized areas. And to be perfectly

honest, um, generally speaking, the

answer is no, they don't have a fee

attached to.

>> Okay. But then what is the business

model? You know, a key part throughout

your piece, which which is excellent and

what we'll link for for our listeners to

to to read it, but a key part of your

piece is that China has a cost

advantage, but they still have costs.

So, how is the Chinese LLMs and the

clouds and the AI in China going to be

funded if they don't make any money?

Well, just take Quen, which is by far

the most powerful of all of them. That

is Alibaba's large language model. Quen

is applied and used everywhere else

within the Alibaba community. So, Tao,

which is their online shopping or Ali

Logistics or Alip Pay will use almost

certainly Quen as their model. Now in

using that model

there there may be a and often is a

small fee that gets paid across from Tao

to essentially the central resource that

is Tong Yi which is uh the IIT

sector that controls Alibaba and that's

how Alibaba then gets funded. Now this

is not the same I must say immediately

for all Chinese LLMs. There are some now

that are not connected to a broader

commercial network but we'll come on to

that later because there is a mechanism

now arising when they can be um and but

the central point is is that uh it's

like a central expense the research and

development budget as it were of Alibaba

that goes towards Quent

some fees do come in from uh other parts

of the Alibaba Empire to help fund uh

that budget.

>> So, let's say Michael, if you're right,

in five years, what does the world look

like? Uh Nvidia, all of

>> Can I just follow on something there

just because it's really it's clever

because it's only in the last week that

it's happened. As I said to you before

that Google essentially owns um Android,

but doesn't really make much money from

it. However, it has recently agreed

because virtually every Samsung phone

that I know of is run on Android. It's

done a deal with Samsung that in the

search engine of every Samsung phone

going forward will be Gemini, in other

words, Google's LLM. and Samsung is now

paying a fee to Google for the rights to

have that embedded in their phones. So

there is a way an example of how it's

being done in that context. But this is

just happening everywhere within the

Chinese community. It isn't just a you

know one-off exception. But but Android

is able to be partly monetized through

arrangements like that. And I'm just

using that as an example.

>> Thank you for explaining that Michael.

So now so now we have just just a sense

of our of our terms what we're dealing

with here. So I if you're right in 5

years what does the world look like the

publicly traded semiconductor supply

chain of of US AI the privately backed

AI models uh most recently you know uh

open AI anthropic etc. What does that

world look like? I imagine that those

companies would have you see severe

challenges and how does that world

differ from the the scenario envisioned

by imagine by many of the uh uh rosy

rosy eyed AI US AI optimists who

probably you know expect Nvidia's market

cap to be uh above 10 trillion and

expect open AI to be massively

profitable and and the rest well first

of all we have to be careful that when

we use the term world we don't just mean

US world we mean world

But unfortunately when I'm listening to

Bloomberg and CNBC and they use that

term world, it generally speaking means

like Apple world. It's just the world uh

that is defined by uh the United States.

There is some international dimensions

to it but it's a very small part of the

whole. So if we're talking about what

will US world to start to answer your

question look like I think we're going

to see that and we're already beginning

to see when Anderson Horowitz is seeing

you 80 90% of its people presenting to

it using free software from China that

there are and I'm borrowing a word here

from Jamie Diamond and you'll understand

it given your background cockroaches all

over the place at the moment. um that

are essentially starting to be used.

We're seeing Airbnb now is essentially

moved over uh to Quent. Um but

essentially there is nothing stopping

those people who find uh this software

and I'm going to use a phrase which is

not really fair because it's actually

very good but good enough. Let's just

start with good enough. And they're

finding if they can use it for free and

it's good enough. And just take the

example of Gwen, it's it's fluent in

something like 120 languages, which if

your Airbnb is rather a a good plus to

have. Um, so I think what we're seeing

now is that there is leakage between the

two ecosystems that have essentially

been described by us, US world and

world. And there is leakage from US

world into world war. So projecting five

years forward to go back to to your

question. I see this leakage is

continuing. However, it goes beyond that

because there are technological issues

that are now beginning to question

firstly the making of the hardware and

secondly construction of the software.

And they feed off each other but uh they

need to be looked at separately before

we combine them. First of all, I don't

think Nvidia's hold on the chipm is

going to be anything like as strong. And

again, uh part of this is because what

Amazon's doing uh with its own

chipmaking, what Google is doing, Amazon

has the tranium, Google has the TPU. Uh

and I think what we are seeing is that

even within the United States, a

low-level civil war is breaking out

between the major players in in big AI.

um so much so that the dependency on

Nvidia chips is starting uh to be

reduced. But outside of um US AI in the

rest of the world there is no doubt in

my mind that we are seeing all sorts of

efforts to diversify

uh the Chinese even have a word for

devidarization

um but diversify away from dependence on

let's just call it expensive chips and

most of those expensive chips

historically have been Nvidium chips. So

in the hardware side there are all sorts

of options that are starting to open up.

First of all, also and you'll see in the

open part of my my presentation, the

whole area of Moore's law is starting to

come under pressure because basically

we're getting to a point where you can't

really make chips much smaller um and

still hope to carry uh to continue uh

the compute level that comes from those

chips. There are rules of physics, rules

of what I call material, chemistry, and

then rules of economics. I call them the

three assassins that are actually saying

that there's not much road ahead for

Nvidia to continue to miniaturaturize

its uh its chips. What China is doing is

essentially creating a and they're not

alone in this. It's happening even in

the United States, but a whole new

ecosystem that is basically built around

not two or three NMS but somewhere in

the the 14 to 18 NM specs.

And what they're doing is that they are

building what I call cognitive

skyscrapers, cognitive towers. So they

use the chip as the base, but then they

layer other sorts of chips and uh

memories and various other things on top

of it and create a sort of megallike

world. Um and that is increasingly the

way forward. So I don't think China is

uh thinking smaller, it's thinking

smarter. Um, and this is one way where

you can continue to be very relevant in

the chip space without chasing um down

that rabbit hole um the idea of making

the chips ever more small. Um because as

I said Moors law is close to dying. It's

on its deathbed. It may not have passed

its last breath but it's not looking

good at the moment. So in the hardware

space,

uh, Nvidia and we're already seeing

certain, um, things that they're doing

here. They're doing it more in the in

the in the software space, but they too

are also starting quietly to do it in

the hardware space, too. But Nvidia is

recognizing that its model up until now

cannot be the only way forward for it.

has got to actually diversify away

actually potentially look at slightly

larger chips actually look and we

haven't talked about software yet but

actually look at complimentary software

with those slightly larger chips in

order for it to remain relevant the

problem with that for Nvidia is that the

margins associated with that alternative

are much much much lower than they are

with the model that they're now pursuing

which then starts to

moving forward potentially undermine the

hold that Nvidia has the margins that it

has and then that will potentially play

through into profits and therefore stock

market valuations. So that's what's

happening on the hardware side. And I

truly generalized in a number of areas

there in order to be able to answer your

question, but that's essentially what's

happening. And as I said, the Chinese

are doing it. Yes. And and they've got a

vested interest to do it. But even

Amazon's doing it. Even Google's doing

it um at the moment that they want to

diversify away from these high-priced

chips that Nvidia has that increasingly

are not built for purpose.

and uh the new world um that we're

moving into for chips requires a

different combination. So that's the

that's that's what's happening on the

hardware side. On the software side, um

there's breakthroughs happening

everywhere. Um and it's not that Nvidia

um hasn't got a very powerful software

hold on its on its chips. It does it

through something known as CUDA. um

essentially it ties in developers to the

way that uh they're able to use Nvidia's

chips. What's happening at the moment is

that um both in the US but especially

especially especially in China people

are finding way to circumvent and this

they are doing both two sides to chips

the training of them and the inference

that arrive derived once you train them.

So you endow a chip with knowledge and

then you get that chip uh able to answer

a question that you and I might pose to

it. Um but in the training stage which

is where Nvidia has especially good hold

um uh there are breakthroughs taking

place the most important of which

happened in the last 10 days um which is

something that Deep Seek did and it

happened on New Year's Eve. I don't

think the market has truly understood

the scale of what what that particular

uh breakthrough means. But it's also

happening uh in the inference side um

which is uh where to be perfectly honest

lesser chips are used often chips that

are previously involved on the training

side after two or three years they've

still got a little bit of useful life

left in them. So they get transferred

over to the inference side for another

couple of years where they can still be

usefully employed. But the margins on

inference chips are much lower than the

margins on training chips. But the

essential point is is Nvidia here is now

facing attacks on both fronts, hardware

and software. So, it sounds like the the

consequences of what what you're saying

are immense because all of this money in

the United States in public markets and

private markets uh is being deployed on

the premise that AI margins uh might be

slightly lower than the traditional

extremely profitable US software

business model that has 80% 90% gross

margins. slightly lower margins but

still a very profitable enterprise. You

are saying that it is we're likely

headed to a world where profits margins

are extremely low and it's something of

a um you know communitarian co-op model

an open- source model and that you know

the consequence you're saying Michael is

that the trillions of dollars being

spent by the US is basically just cash

incineration and that a lot of investors

are going to lose money. Obviously,

it'll take time to pan out and I'm not

saying it's going to happen tomorrow,

but I did my PhD thesis ultimately on

the concept of commoditization

and um I am able to recognize the traits

that indicate that a particular product

or service might be uh being subjected

to the forces of commoditization and I

can now see those forces gathering both

on the hardware side and on the software

side.

>> Yes. And you you come from South Africa

which is a you know dominant player in

commodities. You know it is no surprise

that the you know South African uh stock

market is a tiny fraction of the size of

the US stock market which you know has

commodities but also has these things.

commod commodities. It is hard to make

money from commodities and they

certainly do not command 40 or 50 times

earnings multiple

>> and South Africa is an example of that.

But there are plenty more commodities in

the world than simply those that are uh

like um you know wheat or or or or

metals or any of those or even dare I

say it some of the energy commodities.

Uh there are plenty of other commodities

that have existed that have come into

being. I mean I would say that um you

know petrol-based automobiles are on the

verge of becoming commodities. Um and so

the concept commoditization is not

exclusive as I say to the traditional

term that is described as a commodity.

>> Yes. And Michael so I've said what is in

your piece about how it could you know

end badly for US investors because of

the consequences. But can can I get you

to say it?

>> Yes. Um, essentially what is happening

is that China has realized that and part

of this has come about by the fact that

they've been subjected to certain

embargos or or or controls that

particularly from the United States.

China has been forced to look for

another way, another tow as I sometimes

like to call it because uh the tow is

the other way in in in Chinese in

Mandarin Chinese. And what's happening

is that uh necessity being the mother of

invention um because they didn't get

those Nvidia chips, they've had to find

other ways of doing it. And as I say,

they they thought not smaller, they

thought smarter both on the software and

on the hardware side. And what Deep Seek

did last year and what I predict it's

going to do this year and we've already

had a foretaste of that with their

latest paper is uh essentially challenge

um the margins that exist on the

software side of the business. Sunsu

would basically advise any Chinese

general if you don't think you've got

the right number of forces to be able to

beat the enemy change the battlefield.

And there are plenty of almost SunSu

pieces of advice that are now playing

out in the world of AI. Um, as I say,

there's going to be no gunfights at the

3NM or 2Nm corral between Chinese chip

makers and let's just say Nvidia because

the Chinese aren't going to fight there.

They know that's not a gunfight. They

can win. But, uh, they are now shifting

the battlefield and shifting it

dramatically.

And when you are dare I say stuck in the

US ecosystem, I don't think you can

imagine that there might actually be

another battlefield for you. But there

is and the rest of the world is catching

on. Um and it is starting to spread both

software and hardware. Although the

Chinese don't have a lot of chips to

spare to export at the moment, but come

2028, the forecast is that China will be

producing more chips than it needs. Um

and it already dominates um the world of

what's called commodity chips to go back

to where we were before. Um you may have

remember the story with NXeria which was

a Dutch company that got essentially

shut down by the Dutch authorities at

the heads of Trump administration.

NXeria produced

quote unquote commodity chips for the

particularly auto sector of Europe and

this brought the auto sector to a

standstill. The point being here is that

the low value low margin chips in the

world the Chinese already dominate

probably uh yeah up to the the sort of

almost twothirds of the actual chip

supply in the world now has quote

unquote been and I don't want to say

commoditized to the point where it

actually can't create a profit but where

the margins are very very thin and you

have to be incredibly efficient if you

want to stay in that space. So what's

happening is that we're seeing this uh

essentially march uh up the value added

ladder. Um and the Chinese are now

moving I think into the next stage which

is the um medium value chips 14nm to

18mm

um that essentially have huge uses

across the world. I mean massive uses

vehic vehicles u your cell phone cell

phone towers I mean the areas where

those sorts of chips are are are

prevalent um are are are almost too many

to mention in the world of let's call it

the latest Apple iPhone um yes you want

to have one of those tiny chips um but

there are many more applications for

chips in the world now I'll give you an

example which is not uh being wholly

recognized yet but chips that are being

embedded in the smart factories of China

so that these smart factories can

actually operate almost remotely. Um

they don't have to have people in them.

Uh and those chips are not necessarily

um driven by a constraint about size.

Size is not always the issue. Um but

they need to be able to form perform the

function. I'll get back to my earlier

comment. They're good enough. The result

is that smart factories are just

spreading across China at an

extraordinary rate. I mean just to

understand it last year China installed

more robots than the rest of the world

put altogether. So we are seeing this

this process take place across all sorts

of areas which are not necessarily again

wholly um acknowledged in the United

States. Now, I'm being a little cruel

here, and forgive me, but they're not

being acknowledged because there are not

many factories left in the United States

for their chips to be embedded in. And

the Chinese now with by 20, 30, 45% of

the world's manufacturing production

versus 10% in the United States, the

Chinese are essentially moving their

industrial structure over to smart

chips, smart factories. Um, and they're

not these 2Nm, 3NM chips that you're

going to find in the world of iPhones.

So, China has a, as I say, it's a

different path. It's a different road

and it's not fully recognized partly

because particularly in the United

States where consumption is 80% of GDP,

services is 80% of GDP,

most of the AI talk that comes out of

the United States is related to

services. agentic AI that is essentially

going to allow you to do to buy an air

ticket on your phone. These are what get

all the talk in the United States. They

get talk in China. I'm not saying that

they don't, but there are plenty other

conversations taking place about where

chips can be used um embedded in drones.

Um to an extraordinary degree, chips are

being used to uh run uh the electricity

system to an incredible degree across

China. the the solar system, the the the

wind turbines chips are the application

for chips in China tends to be a much

longer list than the application chips

in the United States.

>> Thank you, Michael. So, we're recording

January 8th, 2026,

a little less than a year ago in late

January 2025, Deepseek, a Chinese AI

model company that was actually, I

think, started by a Chinese AI hedge

fund manager of of all, you know, places

of all people. um uh launched their

deepseek model R something. uh oh

>> are are one and that model and this fear

that emerged a little less than than a

year ago uh caused a mini you know one

or two day crash in the US semiconductor

supply chain stocks particularly Nvidia

if I remember was down 16 or 17% at one

time now on January 1st 2026 so the

first day of the year while everyone was

uh partying in the west and the markets

were closed you're saying that they have

released a new model or a new paper that

it could have similar consequences. Tell

us about this.

>> Well, I think it's essentially um and

what Deep Seek is doing at the moment is

a sort of dance at the seven veils

before it actually drops R2 which is

going to do in my prediction just ahead

of the Chinese New Year which starts on

the 17th of February. But essentially

there have been a number of releases and

this is the last release I suspect big

release before that date. uh and the

biggest by far because what they've done

is they found a mechanism for

essentially um attacking uh the whole

idea of memory in the training process

of chips. So now they have found a way

where previously a particular chip

produced let's just say 100% of memory

that same chip now if properly arranged

within the software you only need 7% of

its power to produce that 100% that

previously you were able to get. So

essentially they have um increased the

power of uh a small chip by 15 times in

the training process and then these this

this essentially leads again towards the

commoditization of highv value chips

because they found a way round the whole

idea you need our chips because you need

100 and and what deepsekers said well

actually you only need seven to do that

uh because that seven will give you a

100. Uh and it's all about the new

phrase that everyone is talking about

architecture and architecture is

happening both on the side of training

chips as well as on inference. In fact,

until you know a month ago, people

didn't really talk about architecture on

the side of training. Yes, there was

some, but they did talk about

architecture on the side of inference.

the Chinese not just the Chinese uh this

big company which uh has just been

bought by Nvidia in Singapore. Manus is

very good at architecture but it's

inference architecture. What DeepS did

this time was come up with an

unbelievably radical way of reinventing

the architecture on the training side of

the chips which is largely been ignored

up until now. Now why I think this paper

which is part of a whole as I say seven

veils um is significant is that it's

setting things up for the release of R2

which as I say is probably going to

happen at the start of the next Chinese

year. Um we had another indication for

instance in mid December which only the

geeks really picked up on but the

Chinese redu Deep Seek reduced uh

produced something called uh I'll get it

right the V3.2 to special which was

essentially a mathematical standalone

model

and it basically went to the top of the

benchmarks. Not every one of them but

nearly every one of them went straight

to the top of the benchmarks. So what

we're seeing is that once you put all

these seven veils together, and I'm not

going to bore you with all sorts of

acronyms as to what each of those veils

constitute, but you put all of those

together and then tie them up in what is

going to be coming out, I suspect in the

middle of February, um, and that is

going to be monumentally significant

because, uh, Deep Seek is going to, I

think, in most areas, go to very near,

if not at the top of every benchmark

that counts. um they're going to have

and you may not know the name of the

game is is is the number of parameters.

They'll have in excess of one trillion

parameters. Um they will have this

what's called uh mixture of experts

structure and they will have MLA which

is the the

paper that we've just been talking about

dropped on New Year's Eve. Um, and

they'll put all of these together to

create an unbelievably powerful model

that is powerful both on the training

side and on the inference side and uh I

think intentionally um having even

greater effect than than R1 when it was

released a year ago. So there there's

two things training and inference like

let's say the old school um creating a

computer that can beat human beings in

in chess which I you know has existed

for close to 30 years now but training

is the process of getting the computer

to learn chess and develop its

strategies. inference is okay you're

playing Gary Kasparov now you have to

actually run Western US models of

training has been extremely capital

inensive and the Chinese models appeared

to be far less capital intensive and

that's why Nvidia you know crashed 17%

when this deepseek news came out uh you

know in late January 2025 because oh my

god they don't need to spend that much

on on Nvidia chips I know there was some

doubt about that Michael is it really

true that they only spent you know, a

tiny fraction of of uh Nvidia chips.

>> I saw a paper yesterday said that Deep

Seek actually spent 1.5 billion on that

first R1.

And even if it did,

OpenAI

alone is spending its own equity

contribution, not co-contributions from

other players towards Stargate is 19

billion.

And yet what Deep Seek came up with is

far more radical than anything Open AI

has ever come up with. I mean on a scale

of

20 30 times and it did so let's be take

it to the worst possible extent 1.5

billion. I I don't think it was anything

near 1.5 billion but I'll accept that

that that that forecast.

>> A lot of money but a tiny fraction of

what the US companies are spending. A

tiny fraction

>> tiny fraction. So the point being is

that um once R1 dropped last year, they

gave the model to Nature magazine in

London, which is one of the most

prestigious magazines in the world. And

over eight months, Nature tested that

model and in their September cover issue

last year came out and said every claim

that Deep Seek made as to what our one

could do was verified.

Now since then as I say they've dropped

a number of other upgrades and you've

had to be really buried in the whole

process to see each of these incremental

upgrades. I mentioned the one that

happened in December regarding the

mathematical capabilities. Um but I

think what's happening now is that there

is a cumulative effect of all these

upgrades that going to be rolled into R2

and I don't know how much it's going to

have cost them to get got to that spec.

I really don't I I'm I'm somewhat um

skeptical of the claim of 1.5 billion

because I'm not sure that the hedge fund

uh that owns Deep Seek had that sort of

money to spend unless someone was

handing them some money slightly, you

know, through the back pocket. But in a

way, it's irrelevant

because none of the claims as to what

these models capable of doing are being

disputed.

They're all showing up in the benchmarks

and they're all being subjected to

unbelievable peer review

and anyone who's looked at that paper on

the 31st December last year has come

back and said, "Yep, their claim is

absolutely spot on. They they've done

it. They found this way around this

whole problem that we've all been facing

for a long period of time, which is

what's called catastrophic

forgetfulness, where you're training a

model, you get up to a certain level,

um, and then you add more data into it

and it just forgets everything that it's

already learned. And they've essentially

created a very stable way for that model

to accumulate and to sort out

information and keep it properly

organized so that they continue to add

what we call scale data into that model.

And the result is they now have a very

very clever stable way to grow the

database of a model. And the result is

I'm I think it's pretty radical to be

perfectly honest and there are a number

of geeks out there and I'm not a geek.

I'm not a techie but I've read the

papers that I can understand and

virtually all of them have pretty much

confirmed uh what Deep Seek is playing.

>> Later on I've got some potential push

backs about your thesis, but I want to

get into the nitty-gritty uh where you

know you're not a tech geek but you've

kind of become a little bit of a tech

geek. Uh you say the three assassins of

the US AI architect architecture the US.

>> Oh, let's just call it chips generally.

>> Chips. Chips generally. Yeah. And

potentially the the bubble in AI in in

US uh private and public markets of US

AI. The three assassins you say are

physics, material science, and

economics. Let's begin with physics.

What what why is that an assassin of

chips? Well, you get down to a certain

level where essentially the process by

which uh uh the the chip operates in

physics becomes unstable.

You have basically switches that are

either on or off.

But the electrons that control those

switches are able to slip through

because they are so small.

they can slip through and essentially

turn that chip into not an on or an off

but a baby

and that basically starts to question

um the robustness of the model.

So what it does is when you're getting

down to these incredibly small

things, the the actual, you know, it may

look like a piece of steel or hard

silicon to you, but it's actually got

little gaps in it and these electrons

are finding way through it. They use the

expression like ghosts through a wall

and they move through and go to the

other side and then essentially um turn

that particular switch into a m which

which really starts to question um at

what point can you continue to

miniaturaturize everything.

Um and still have the security of

knowing that when I want that switch to

say off it says off. It doesn't say

maybe. You're saying that chips are

coming up to a limit, a retical limit.

Chip chip sizes are getting so small

that basically um you know electrons are

going crazy in there and it gets so hot

that you you know need a lot of the

chips to be devote devoted to uh cabling

to to you know uh control the thermic

output so so it doesn't overheat and and

ruin the chip. And and I will note

Michael, not you, but there have been

haters of Moore's law like over the past

20 years who have said Moors law is

dead. Moors law is going to, you know,

this drastic two years every two years

the amount of transistors that we could

put in a semi semiconductor roughly

double, which is, you know, held true

since the 1960s. That's no longer going

to happen. And I want to say Morsaw

refers to the number of transistors in a

chip. It does not refer to compute

power, actual compute power. Actual

compute power has way more than doubled

um over the past 15 years because of

Nvidia and because of parallel computing

and the fact that all the electrons are

are you know going at the same time. The

wires are going at the same time you

know and Nvidia invented that. So you

know Jensen Wong CEO of Nvidia has said

that Moors law is dead in the other way

that like computing power has way way

way more than doubled every two years

because of that thing. But you're you're

saying you're a critique and saying

finally like Moors law is going to be

dead and that you you you can't double

the number of transistors every two

years. It's simply getting too small. It

went from 30 nmters to 50 nanometers to

7 nmters and now the latest Nvidia

Blackwell is 3 nanometers. You're

obviously it can't be 0 nmters or

negative nanometers. That that kind of

there's not a ton more uh uh juice to be

squeezed out of that lemon.

>> We talk of disconomies of scale. There's

essentially diseconomies of physics and

diseconomies of material science. And at

some point, and you can't divorce this

entirely from the cost of being able to

achieve this, at some point it becomes

prohibitively expensive expensive

without that much uptick in what you

mentioned compute to move from 3nm to

2nm

and that there are complications that

start arising in physics, in material

science. and let's leave but one cannot

leave on one side the whole issue of

economics because ultimately that comes

in and that's probably where I come from

and spoils everything. So what we're

doing is we're seeing the diseconomies

of physics. We're seeing the

diseconomies of chemistry and yes there

are potential workarounds to use that

wonderful phrase but they are

unbelievably expensive and you ask for

instance and one critical player to ask

in this whole process is ASML in the

Netherlands. Can you carry on making

your EVU EUV machines so much so that

you can actually start producing 2Nm

chips? and they will say we can

but it's complicated and it's not just

complicated it's almost prohibitively

complicated to do so people have talked

about changing things from silicon to

something else you know there are all

sorts of areas and one of the most

interesting areas potentially is

photonics although I should hasten to

add here is that China probably leads in

the whole area of photonics which is the

whole idea of embedding data in light

itself uh which is a completely

different way of thinking about chips. I

mean it's it's it's just moving into a

whole different space. Um and and and

it's still five, six, seven years away

from having anything that's remotely um

practical. But in terms of of research,

China probably leads platonics at the

moment. It's not an undisputed claim,

but it's probably a claim. Um and so in

that that is essentially saying forget

silicon we're we're going to move to a

post silicon world but while we still

live in that silicon world we are seeing

these diseconomies of physics

disconomies of material science and

diseconomies

of economics and to some extent these

three assassins are working together not

consciously obviously but but there is a

sort of strange cooperation that's

happening between all three that are

making as I And I'm not going to say

that Moore is dead or Mozore is dead,

but he's on his deathbed and these three

guys are standing around that deathbed

sort of rubbing their hands saying, you

know, your time is up, mates. And what

the Chinese are saying, well, listen,

we're not going to have a gunfight at

the the 3M Corral. It's just becoming

unbelievably expensive to play at that

game. And we're not going to win because

we just don't have the EUV machines that

come from ASML to be able to play in

that game. Let's move the battlefield.

let's fight this war in another space

and using other other other sort of

mediums and this is why this soal SIP

system in processor

these cognitive towers is now becoming

something which pretty much everyone

including Nvidia is now pursuing. So,

Michael, the the game at which US chip

makers, primarily Nvidia, have excelled

and dominated and crushed the

opposition. That game is making chips

smaller and smaller and and more

efficient. You're saying that that game

is something where China is saying we're

not playing anymore. And that the

advances in computing have mostly come

from making chips smaller and smaller

and smaller. Parallel computing, of

course. um that you're saying that in

the future the the gains are going to be

made from connecting the architecture

connecting the chips themselves um

something called advanced packaging so

allowing uh the chip to be 3D and that

whole system so the chips can talk to

each other rather than just the most

efficient chip because the most powerful

chip is no longer you're saying going to

be what is the driver of effective

usable compute which is what it's all

about and you noted in your piece with

that the you know former uh uh you

Google executive Eric Schmidt said that

the constraint of AI is not chips it is

power and electricity

>> and that's that's true I mean when you

see the amount of power that's going to

be required to run the likes of Stargate

and you've seen the pictures or these

maps of where all the data centers are

being put up over the United States. And

you don't live in Northern Virginia, but

if you did, you were facing some fairly

severe power shortages in the in the

next five years because of all the data

centers, many of them military related

um that have been and are continuing to

be erected uh in in in Virginia,

Northern Virginia. But there are other

areas. There are five or six what I call

hotspots all over the United States

where power issues are going to be very

profound. But it's not just about power,

though I completely agree with what Eric

Schmidt had to say. I think that is the

the Achilles heel, the the black swan,

whatever phrase you want to use that

potentially threatens um uh um the US AI

model. And remember that China does not

face this constraint simply because they

have invested absolutely massively in

particularly renewable, but not only

renewable energy. um I mean massively um

and this is allowing them to not think

of um energy as a constraining factor at

all. Um they are able to do whatever

they want to do and they don't have to

think about energy. In fact the price of

energy has actually been falling

for the Chinese. So their particular

model which is what we call distributed

intelligence model as distinct from

concentrated intelligence model

personified by the likes of Stargate the

Chinese one is far less power hungry

anyway and to the extent that they need

that power they have it if you go to a

Chinese conference and it doesn't have

to be an AI conference and speak to all

the the geeks that are there the last

thing they're going to tell you about is

oh we're worried about our power

supplies the last thing. go to a US

equivalent conference and almost the

first thing they're going to talk to you

about is power.

>> What about the second assassin, material

science?

It's somewhat related and I always think

that there's a fairly thin line between

the physics and the chemistry but

essentially it's about degradation

of the materials

that at these incredibly small levels

with all the heat that you were

mentioning rightly um you're seeing the

materials starting to break down.

They're starting to um corrode if that's

the right word. It probably isn't in the

context, but it's something you and I

can

>> depreciate. How's that? How about that?

>> Well, depreciate. Yes, but that's we're

getting into the the language in Michael

Bur there, but yes. All right, let's

call it depreciate in the sense that

they're no longer useful. If that's what

you mean by depreciate, yes, I

completely accept that they're no longer

useful. And Michael Bur will say that a

high-end chip has three years of useful

life. Um, Amazon will claim it's five.

Um, I don't know. um account accountants

are going to be forced probably to

follow the absent line but uh

essentially depreciation

um uh corrosion happens uh at these uh

very small levels and that creates all

sorts of secondary issues like as you

mentioned heat um and the result is the

chip becomes less than useful. it starts

to break down. What we call yield, which

is the number of transistors that are

are operating within the chip at at full

strength, starts to fall fairly

dramatically. Um, and so it's all about

essentially the corrosion of the

metallic properties that exist in the

chip, particularly dare I say, silicon.

There are ways again of buying time. You

could I think it's called it's halfneium

or something like that that gets coated

on the chips.

>> Hafneium is one of those one of those

periodic table metals that you and I

never got down to. Um but it's

nevertheless it it's it's it's it can

buy a little bit of extra time. But the

point being is that um we really are

fiddling. It's like, you know, as I

said, we're using we're it's life

extension drugs if if you want to think

about it in that context. Um, but it

ain't going to last for long.

>> And this is where we get to that third

assassin. Michael,

>> can I just add there? I went to my essay

and I'm going to read one sentence, two,

but it basically says everything that

I've just said, but very very

technically.

>> Transistors now require, and we're

talking really here about materials

science issues. Transistors now require

ghost proofing such as hapneium oxide

layers. But by 2Nm even these are just a

few atoms thick. One missing oxygen atom

causes a short circuit and even

deposition of that creates gaps that

invite electron tunn tunneling. So

you're seeing what I'm talking about

here is we're we're reaching limits of

science both physics and chemistry um

that are starting to make get making

things smaller incredibly difficult

>> and this is where the third assassin

economics really comes in. uh you

referenced Michael Bur who has you know

reemerged and has made the the following

critique that the companies that are

spending massively on these chips they

put the capital up front and you know

that that is not recorded as at a loss

at all. It's you know net neutral. The

the cost comes and is depreciated over

the weighted life. So if you if you if

it had a weighted life of a 100 years

every year in its annual report that

cost would only be 1%. If it had a

weighted life of two years it would take

a 50% hit in the first year and a 50%

hit in the second year. The weighted

average life of chips and certain in

data center investments is my

understanding from like 2019 to 2021 it

had been three years. It was extended to

five years or maybe six years for some

companies. And it's my understanding

that a lot of that was for old CPUs that

actually was uh completely legit like

three years was too short and so it was

the it had been wrong and it was the

correct thing to to make it longer. The

critique is that for these newer GPUs

because the transformation and the

innovation is so rapid. uh you know five

or six years is not that relevant and

and the idea that in five years uh these

chips are still going to have uh you

know as serious value is is something of

a joke. I will also point out that you

know Michael Bur very very smart

investor but technically you know um

someone who had been saying that a lot

earlier than Michael Bur is Jim Chenos

the short seller noted for his shorting

Enron and being early there. Um just a

plug I did interview him in uh December

of of last year about this very issue.

So we can link to that and people

definitely should should check this out.

And then also this advanced packaging

thing. Um I interviewed Catrini about uh

a researcher known as Satrini about it

and and basically it's it's it's

everything you're saying that uh the

these scaling laws and the improvements

are going to be coming not from the

power of the chip itself but from the

interconnection and the architecture and

basically so so that uh you know so that

so that you maximize the effective

compute

>> there's nothing I can say to dispute I

agree 100%.

>> I I think you you know Michael this

issue has been raised to Jensen Wong

Nvidia CEO and he has said that with

every new Nvidia chip it it does get far

more efficient and that the uh you know

amount of energy it takes goes goes way

down per chip. to what degree is that a

uh you know a fair push back and a

justification of of the Nvidia's model

or or do you do you do you find issues

with that? Look, I think that he is

speaking correctly where it when it

comes to capability,

but as you probably know, the cost of

each of those chips and the next

generation chip is rising as a

percentage faster

than the useful compute that those new

chips are producing. Now, as an

economist thinking about that, he

basically says we're heading towards

some sort of crisis point where um you

can't just regardless of cost continue

to improve the the chip if the thing

that you really want it for

the usable compute is not rising at a

commensurate rate with the technology.

And this is where the economics really

does come in.

the diseconomies of scale derived in

part from the physics and material

science that you and I have talked from

um is now starting to weigh very very

heavily and I think that's where Michael

Bur is in part coming from. I I haven't

seen and I'll look it up the Jim Chos

interview, but there's a lot of other

people that have said this as well that

we're moving into a world where that

monolithic chip that Nvidia has been so

famous for um is unlikely to continue to

rule the Bruce for much longer. And the

replacement of the Nvidia chip, the

dominant Nvidia chip, is not so much the

new dominant player AMD, a competitor to

Nvidia, but rather a a custom ASIC chip.

uh o or so are you saying that uh

basically these the companies that are

uh building the data centers and

creating the models are going to be

making their own chips and probably

hiring a company like Broadcom or

MediaTek in order to make to make their

own chip rather than just buying a chip

from Nvidia or AMD.

>> I think that's absolutely right. I think

what Amazon is doing with Traium, what

Google is doing with its TPUs are

particularly interesting, but you can

buy them in from third parties. Yes. But

they're actually doing it inhouse. And

the chips that they're building,

designing are not

side by side as powerful as the ones

that Nvidia produces. But they're built

for purpose.

They work for what Amazon needs them to

do. They work for what Google needs them

to do. So, it's a bit like buying um I

don't know, a truly magnificent

Mercedes-Benz that's got off-road

capability, but the reality is is that

you basically going to drive around town

with it. It's just not needed to have

that off-road capability, but it adds

huge amounts to the cost. and what what

Nvidia has come up sorry Amazon and and

Google and I'm oversimplifying here but

they come up with a chip that works for

the specific needs that they have for

that chip. Now a lot of these chips are

being used not just on the training side

but on the inference side and this is

something which by its behavior uh

Nvidia has started to recognize and then

moving over to chips that are more

geared towards achieving success in

inference but also the software that's

required to get the best out of those

chips which is why they bought Grock

with a Q

>> um That was precisely a recognition that

Nvidia is now stopping from being simply

a supplier to other big

chip based tech companies actually

becoming a player. It's it's it's

building its own stack from hardware

through to software. And so essentially

um it's starting to shoot itself I think

in its own revenue foot because it's

starting to compete with its best

customers. Um, and that is something

which can only go on for a certain

period of time. I mean, if I was Amazon

at the moment, if I was Google at the

moment, I would just say to my chip

development department, full speed

ahead, guys. We can no longer rely on

Nvidia because they're actually trying

to become a competitor to us. Um, and so

I think there's a very interesting

low-level civil war breaking out in the

United States at the moment between the

big players in the world today. What do

you think is going to happen to the US

model providers? So not talking about

Nvidia, but I'm talking about OpenAI,

I'm talking about Gemini of Google, I'm

talking about Anthropic, uh, as well as

the other, let's call them lesser

players. Where are they going to be in

three to five years in your view on the

spectrum from they have a product, it's

you know, modestly profitable, somewhat

of a success, but not the lights out

versus this company is not going to

exist anymore. Well, um I I please don't

think I'm trying to be a stock promoter

here, but the model I like most at the

moment is Google's um because um they

have uh and I think they're doing

something which is again happening at

the very early stages but they appear to

be essentially um courting Apple at the

moment and bringing Apple as in in as an

ally. The great thing about that is that

it can't be seen as competitor or from

an antitrust perspective. it just to be

seen as a an ally. So, um I like what

Google's doing. They have a very

powerful model. Gemini is a very

powerful model. They have an

unbelievable distribution capability.

They actually still technically own

Android. And now they are quietly cozing

up to the other great phone based

software company, Apple.

So, I like what they've got most of the

pieces of the jigsaw puzzle in place

already. They still need to work hard on

all of them, but nevertheless, they seem

to be putting it all together almost

better than anyone else at the moment.

Of course, Nvidia, as I said, has broken

ranks with its old model and is now

trying to do all of these things as

well. But the orphans, and I would think

of a barropic as an orphan,

um I think they're going to have a tough

time of it staying independent. Um I

think unless

Open II has the likes of Microsoft

behind it, I wouldn't be

and of course I suppose um the big

Japanese um companies that are

supporting open AI, but I'm not I'm not

a huge fan of open AI. I don't think

it's going to be a winner in this setup.

I think they're essentially taking on

more uh capital cost um than they will

able to be able to generate sufficient

revenues from. So I think OpenAI has got

its work cut out for it to an

extraordinary degree. Amazon is is an

interesting pair at the moment. Um is is

doing what Google is doing, the tranium

chip for instance, but they don't have

the consumer reach that Google has.

doesn't have a a browser like Google.

>> Michael, who what is Amazon's model?

>> They are starting to make their own

chips.

>> Their own chips, but they don't have a

model. I think they they own a little

>> They don't have a model. Absolutely. No,

but Amazon is as as I said, it's it's

it's an interesting and I think probably

I'm making a prediction here, but but

you know, maybe Amazon will buy

anthropic and then suddenly it will

jumpst start its model position. the the

the point is Amazon is essentially now

offering um a data service um data

centers but its data centers are

increasingly being offered to third

parties um and it's doing that with its

own chips but all I will say is that you

know not nothing compared to Google

which I think is really doing a great

job at the moment um but I think that uh

Amazon has got an interesting one and

they would be for me a potential um

acquirer of one of the models the orphan

models as I like to think of them that

we're talking about. I mean for instance

another one out there is meta. Um I mean

meta's had a a disastrous year in my

humble opinion. I mean the whole llama

story which was and I'm using the

appropriate euphemism here put out to

grass in August last year. Um llama is

an orphanford now. It's a good or

ironically it's an open-source or but

nevertheless

uh it hasn't been improved. not that we

know of uh in any material way since

August last year and Mark Zuckerberg

seems to be going down a completely

different path now and I I I'm not

exactly sure of what that path is but

Meta would be another company that for

me would on the basis of current

behavior be struggling in five years

time.

>> So so Amazon does not currently I

believe have their own model or any

model that is you know serious they are

a huge cloud provider. they were you

know the first really uh large cloud

provider. Microsoft is is now

>> and increasingly Google as well. You

know the cloud computing is a profitable

business uh and quite growing rapidly in

particular is growing rapidly now but

how much of that is because the

customers are is open AAI and all of

these other unprofitable AI startups. So

everyone says the dem the demand for

compute is so high the demand for

compute is so high. So you know data and

that is demand for people uh becoming

customers of data centers but how much

of it is you know real and sustainable.

>> You ask a very profound question which

actually leads us even back to Nvidia.

Nvidia might be immensely profitable and

indeed it is immensely profitable at the

moment but are its customers profitable?

And so to

>> Michael sorry an an amazing question I

want to say technically a ton of its

customers are immensely profitable like

Microsoft

>> yeah but but the customers of its

customers are not profitable that's

although and to the extent that its

customers are profitable they're not

generally speaking always very

profitable from their artificial

intelligence activities I mean to the

extent that um uh its customers u might

be uh doing well. They're able Meta is

able to subsidize uh its activities in

AI because of the advertising that it

gets from Facebook. But if you actually

look at if you can compartmentalize it

when I ask the question again,

how profitable are Nvidia's customers

from their AI activities? It's a much

more complex question to answer. they've

got associated areas which can subsidize

for now um those areas but one of the

interesting things is is that we've seen

a lot of the companies meta being one of

them move out of the fact that they

could finance their AI activities from

free cash flow to now having to borrow

again a slight warning sign echoes of

1999 2000 I don't want to make too great

a parallel I'm not Michael Bur

nevertheless

a warning sign. The amount of debt

that's creeping into the system both on

and off balance sheet at the moment um

should be of concern. It's particularly

of concern in related areas like dare I

said Oracle and Pwe.

>> Yeah. The point being is it's part of

the ecosystem. So one has to look to

some extent of the health of the

ecosystem as a whole. Though one can

recognize that there are parts of that

ecosystem that ostensibly are very

healthy at the moment.

>> Yes. And Michael, it has been said by by

others as well as I have said the

following statement that the amount of

the money being spent on AI to people

who are building the data centers and

buying the chips are among are the most

profitable and you know largest

companies that have ever existed. I

stand by that claim in its technicality.

I want to add the caveat that the

customers of those immensely profitable

companies name Microsoft, Amazon, and

Google, those c customers are often

VCbacked companies that are losing a

gajillion dollars a year. That's that's

a technical term. So, so the customers

of Nvidia are making money. The

customers of the customers of Nvidia are

not making money.

>> I'm happy to be go with your

qualification.

>> Yes. And and this example you said of

Meta buying a ton of Nvidia chips in

order to make its own process better and

you know to to serve ads with AI ads

that is a somewhat rare scenario. I

think a lot of it is cloud computing

that is profitable but the customers of

that cloud computing uh are are losing a

a ton.

>> I I'm happy to accept your

qualification. No, I'm not going to

speak.

>> And so, so you you make a lot of uh

military analogies and you basically

compare the US architecture and and

Invidia to to um the German tanks uh uh

during World War II, which were

extremely effective tanks. It's just

that the the German economy and

industrial uh uh uh powerhouse was

unable to make enough of them compared

to the Soviet tanks and the American

tanks that the tanks were maybe not as

powerful but they were able to produce

them at scale and you know ultimately

led to uh defeating uh uh uh the Germans

thankfully. Tell us about that uh

analogy. There's a very famous infamous

apocryphal story of a rather put out

German tank commander who said, "One of

our tigers is worth four Shermans. The

problem is the Americans always bring

fire." Now that saying has been

subjected to scrutiny and it doesn't

hold precise water but the concept

everyone agrees that in the end if you

can moanize enough material

um you can overwhelm people who have

oneonone better pieces of equipment than

you do and this is something which the

Chinese are essentially doing now when

it comes to chips and even David Saxs in

the White House had admitted as much to

this that that China doesn't need our

chips because what they do is they

essentially amass so many chips from

Huawei that they can outshoot in terms

of usable compute your earlier term um

an Amidia cluster which is really what

we're talking about. So the the Huawei

supercluster versus the Nvidia cluster

um there are just so many more Huawei

chips in that cluster and the net effect

is that it outshoots the Nvidia cluster

and that's what has started to happen

in China. Now, it's not fully

operational at the moment. And this

whole will they won't they story that's

coming out of China at the moment with

regards to will they allow uh the H200

or the H100 to be imported from Nvidia

uh is part and parcel. It's caught in

the crossbar mix of my met, but not

entirely um in this whole process at the

moment because the Chinese feel they're

close. My own estimate is that come

2028, they will have met with parity are

being able to match

perhaps on scale if not on quantity

anything that can be thrown up by the

likes of the amount of of of of effort

of money of resources that are being

mobilized to the producing of just huge

numbers or chips in China

is such that while it's touch and go and

the comparison today and David Sachs may

be right or it may be wrong or may be

technically right but not practically uh

right but nevertheless in two years time

he absolutely will be right my own view

is that what China's setting itself up

to do is to tide itself over it

basically needs to buy time probably two

years and it may take a dollop of H200s

H100s from Nvidia for 2026 and 27 but by

2028 it won't need those chips any

longer and it's not that they won't be

able to that they can produce better

chips It's just that they're going to be

able to produce massively more.

>> You have several agenda in your piece.

The first is a story which I love takes

me back 20 years when I when I read the

story you know as a child of the the

Indian tale about the king that uh you

know said I will grant you any favor and

the guy said give me a grain of rice on

day one on day two double it and then

double it on day three. And basically by

the end of the month or by the end of

two months uh it was a million trillions

of of rice. So you know the possible

number I believe that is a quadrillion.

H I love that tale. It's the story of

compound interest. How does it apply to

what we're talking about right now?

>> Well I look I just wanted to uh

essentially one of the problems that

happens often in the whole area of um

talking about AI is that we get drowned

in big numbers. So, I essentially wanted

to go to one of the biggest numbers I'd

ever seen, which is the number of grades

of rice that will be on the 64 square

chessboard. Um, and essentially use that

um to explain a story. Um, and in fact

the it's 1 2 3 4 5 6 7 8 9 10 11 12 13

14 15 16 17 20 numbers on that last

uh 64 square. Um and the point that I

was making here is that to some extent

uh the numbers that are being talked

about by the likes of Open AI every now

and again start to uh almost approach

the same sort of level of absurdity.

Eventually you run out. You can't

mobilize the amount of rice that's

required to cover that 64th square. Um I

came across a statistic and I'm just

going to quietly bring it up uh

yesterday

when talking about we've mentioned it

earlier in the context of what Eric

Schmidt had to say but of energy and on

on the current level of um energy needs

that open AAI has. They need to increase

their energy capacity over the next

eight years by I kid you not 125 times.

Now, these are the sorts of compounding

numbers that eventually

cause me to say enough's enough. This

isn't possible. You can't carry on like

that. Um, and

the the whole process starts to come off

the rails. I'm sure that that Indian

prince or king uh eventually when things

were starting to get 24 square was

saying, "Oh my god, I'm going to

bankrupt this nation." you know, the

number of grains of rice that's on the

24th square is just more than three

years worth of production. And I think