Sergey Brin: Lessons from Google Glass + Why Every Computer Scientist Should be Working on AI

Now Playing

Sergey Brin: Lessons from Google Glass + Why Every Computer Scientist Should be Working on AI

Transcript

293 segments

It's a race. It's a race to develop

these systems. Is that why you came back

to Google?

Um I mean I think as a computer

scientist uh it's a very unique time in

history. Like uh honestly anybody who's

a computer scientist uh should not be

retiring right now should be working on

AI. That's what I would just say. I mean

there's just never

been a greater sort of problem and

opportunity a greater cusp uh of

technology. Um so I don't I wouldn't say

it's because of the race. uh although we

fully intend that Gemini will be the

very first AGI clarify that

uh but uh to be immersed in this uh

incredible technological revolution I

mean it's unlike you know I went through

sort of the web 1.0 thing. It was very

exciting and whatever. We had mobile, we

had this, we had that. But uh I think

this is scientifically

uh far more exciting and I think uh I

think ultimately the impact on the world

is going to be even greater in as much

as you know the web and mobile phones

have had a lot of impact. Um I think AI

is going to be vastly more

transformative.

So what what do you do dayto-day?

I think I torture people like uh Demis

um who's amazing by the way. He

tolerated me crashing this uh fireside.

Um I'm in the you know I'm across the

street uh you know pretty much every

day. Um and they're just uh uh people

who are working on the key Gemini text

models on the pre-training on the

post-training mostly those I

periodically delve into some of the

multimodal work. uh V3 as uh you've all

seen.

Um but I tend to be uh pretty deep in

the technical details. Um and that's a

luxury I really enjoy fortunately

because guys like Demis are you know

minding the shop.

Um and uh yeah that's just where you

know my scientific interest is. It's

deep in the algorithms and how they can

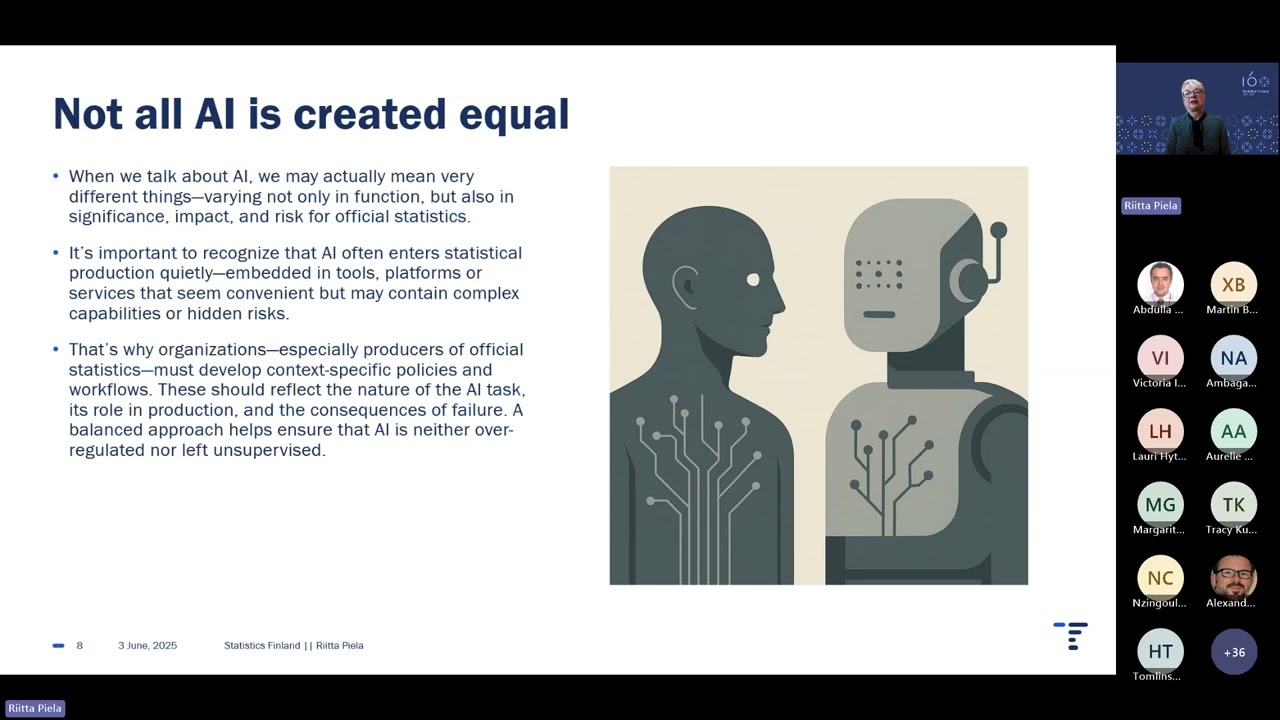

evolve. Okay let's talk about the

products a little bit. some that were

introduced recently. Um, I just want to

ask you a broad question about agents,

demis, because when I look at other tech

companies building agents, what we see

in the demos is usually something that's

contextually aware, has a disembodied

voice, is often interacted uh with you

often interact with it on a screen. When

I see Deep Mind and Google demos, often

times it's through the camera. It's very

visual. We there was an announcement

about smart glasses today. So talk a

little bit about if that's the right

read, why why Google is so interested in

having an assistant or companion that is

something that sees the world as you see

it. Well, it's for several reasons,

several threads come together. So as we

talked earlier, we've always been

interested in agents. That's actually

the the the heritage of deep mind

actually. We started with agent-based

systems in games. We are trying to build

AGI, which is a full general

intelligence. Clearly that would have to

understand the physical environment,

physical world around you. And two of

the massive use cases for that in my

opinion are a truly useful assistant

that can come around with you in your

daily life, not just stuck on your

computer or one device. It needs to we

want it to be useful in your everyday

life for everything. And so it needs to

come around you and understand your

physical context. Um, and then the other

big thing is I've always felt for

robotics to work, you sort of want what

you saw with Astra on a robot. And I've

always felt that the the bottleneck in

robotics isn't so much the the hardware,

although obviously there's many many

companies and and working on fantastic

hardware and we partner with a lot of

them, but it's actually the software

intelligence that I think is always

what's held um robotics back. But I

think we're in a really exciting moment

now where finally with um these latest

versions especially 2.5 Gemini and more

things that we're going to bring in this

kind of VO technology and other things I

think we're going to have really

exciting uh algorithms to make robotics

finally work in in in its and you know

sort of realize its potential which

could be enormous. So I think this and

and then in the end AGI needs to be able

to do all of those things. So for us and

that's why you can see we always had

this in mind that's why Gemini was built

from the beginning even the earliest

versions to be multimodal and that made

it harder at the start because it's

harder to make things multimodal than

just text only. But in the end I think

we're reaping the benefits of those

decisions now and I see many of the

Gemini team here in the front row of the

correct decisions we made. They were the

harder decisions but we made the right

decisions and now you can see the fruits

of that with all of what you've seen

today. Actually, Sergey, I've been

thinking about whether to ask you a

Google Glass question. Oh, far away.

What did you learn from Glass that

Google might be able to uh apply today

now that it seems like smart glasses

have made a reappearance? Wow. Yeah. Uh

great question. Um I learned a lot. I

mean, that was um I definitely feel like

I made a lot of mistakes with Google

Glass. I'll be honest.

Um I am still um a big believer in the

form factor. So I'm glad that we have it

now. Uh and now it's like looks like

normal glasses doesn't have the thing in

front. Uh I think there was a technology

gap honestly. Now in the AI world, the

things that these glasses can do to help

you out without constantly distracting

you, that capability is much higher. Uh

there's also just um I just didn't know

anything about consumer electronics

supply chains really and how hard it

would be to build that and have it be at

a reasonable price point um managing all

the manufacturing so forth. Um this time

we have great partners that'll are

helping us build this.

Um so that's another step

forward. Uh what else can I say? I do

have to say I miss the the um airship

with the wings suiting sky divers for

the demo.

Honestly, it would have uh been even

cooler here at Shoreline Amphitheater

than it was up in Moscone back in the

day. But maybe we'll have to we should

probably polish the product first this

time.

Ready and available and then we'll do a

really cool demo. So that's probably a

smart move. Yeah. What I will say is I

mean look we've got obviously an

incredible history of glass devices and

smart devices so we can bring all those

learnings to today and very excited

about our new glasses as you saw but

what I' what I've always always talking

to our team and Sham and the team about

is that I mean I don't know if Serge

would agree but I feel like the the

universal assistant is the killer app

for smart glasses and I think that's

what's going to make it work apart from

the fact that it's all the tech the

hardware technology is also moved on and

improved moved a lot is this. I think I

feel like this is the actual killer app,

the natural killer app for it. Okay.

Briefly on video generation, I sat uh in

the audience in the keynote today and

was like fairly blown away by the level

of uh improvement we've seen from these

models and I I mean you had filmmakers

talking about it in the

presentation. I want to ask you Demis um

specifically about model quality. If the

internet fills with video that's been

made with artificial intelligence, does

that then go back into the training and

lead to a lower quality model than if

you were training just from human

generated content? Yeah. Well, look, we

we you know, there's a lot of worries

about this so-called like model

collapse. I mean, video is just one

thing, but in any modality, text as

well. There's a few things to say about

that. First of all, we're very rigorous

with our data quality management and

curation. We also, at least for all of

our generative models, we we attach

synth ID to them. So there's this

invisible AI actually made watermark

that um is pretty very robust has held

up now for you know a year 18 months

since we released it. And all of our

images and

videos are embedded with this watermark.

that we can detect and and we're

releasing tools to allow anyone to

detect uh uh these watermarks and know

that that was an AI generated um uh

image or video. And of course that's

important to combat deep fakes and

misinformation, but it's also of course

you could use that to filter out if you

wanted to whatever was in your training

data. So I don't actually see that as a

big problem. Um, eventually we may have

video models that are so good you could

put them back into the loop as a source

of additional data, synthetic data it's

called. And there you just got to be

very careful that you're you're actually

creating from the same distribution that

you're going to model. Um, you're not

distorting that distribution somehow.

Uh, the quality is high enough. We have

some experience of this in a completely

different main with with things like

Alphafold where there wasn't actually

enough real experimental data to build

the final alpha fold. So we had to build

an earlier version that then predicted

about a million protein structures and

then we selected it had a confidence

level on that. We selected the top three

400,000 and put them back in the

training data. So there's lots of it's

very cutting edge research to like mix

synthetic data with real data. So there

are also ways of doing that. But on the

terms of the video sort of generator

stuff, you can just exclude it if you

want to. At least with our own work and

hopefully other um gen media companies

follow suit and um put robust watermarks

in also obviously first and foremost to

combat uh deep fakes and misinformation.

Okay, we have four minutes. I got four

questions left. We now move to the

miscellaneous part of my question. So

let's see how many we can get through

and as fast as we can get through them.

Um let's go uh to Sergey with this one.

What does the web look like in 10 years?

What does the web look like in 10 years?

I mean, go one minute. Boy, I think 10

years because of the rate of progress in

AI is so far beyond anything we can see,

not just the web. I mean, I don't know.

I don't think we really know what the

world looks like in 10 years. Okay,

Demis.

Well, I think I think that's a good

answer. I do think the web I think in

nearer term, the web is going to change

quite a lot. If you think about an agent

first web, like does it really need to,

you know, it doesn't necessarily need to

see renders and things like we do as as

humans using the web. So, I think things

will be pretty different in a few years.

Okay. Uh, this is kind of an under over

question. Uh, AGI before 2030 or after

2030?

Uh, 2030. Boy, you really kind of uh put

it on that fine line. I'm going to I'm

going to say before. Before. Yeah.

Dennis, I'm just after. Just after.

Yeah. Okay. Um, no pressure, Dennis.

Exactly. But I have to go back and get

working harder. Is that I can ask for

it. He needs to deliver it. So, Exactly.

Stop sandbagging.

We need it next week. That's true.

Interactive Summary

Ask follow-up questions or revisit key timestamps.

This video discusses the current state and future of Artificial Intelligence, focusing on Google's advancements with Gemini and other AI initiatives. Key topics include the rapid development in AI, the potential for AGI (Artificial General Intelligence), the evolution of user interfaces through AI-powered agents and smart glasses, and the implications of AI-generated content. The speakers emphasize the transformative impact of AI, comparing it to previous technological revolutions like the web and mobile. They also touch upon the challenges and learnings from past projects like Google Glass, and address concerns about model collapse due to AI-generated training data.

Suggested questions

5 ready-made promptsRecently Distilled

Videos recently processed by our community