SQM, ВШЭ, 2024-2025, лучшие моменты из лекций 9-16

Now Playing

SQM, ВШЭ, 2024-2025, лучшие моменты из лекций 9-16

Transcript

1021 segments

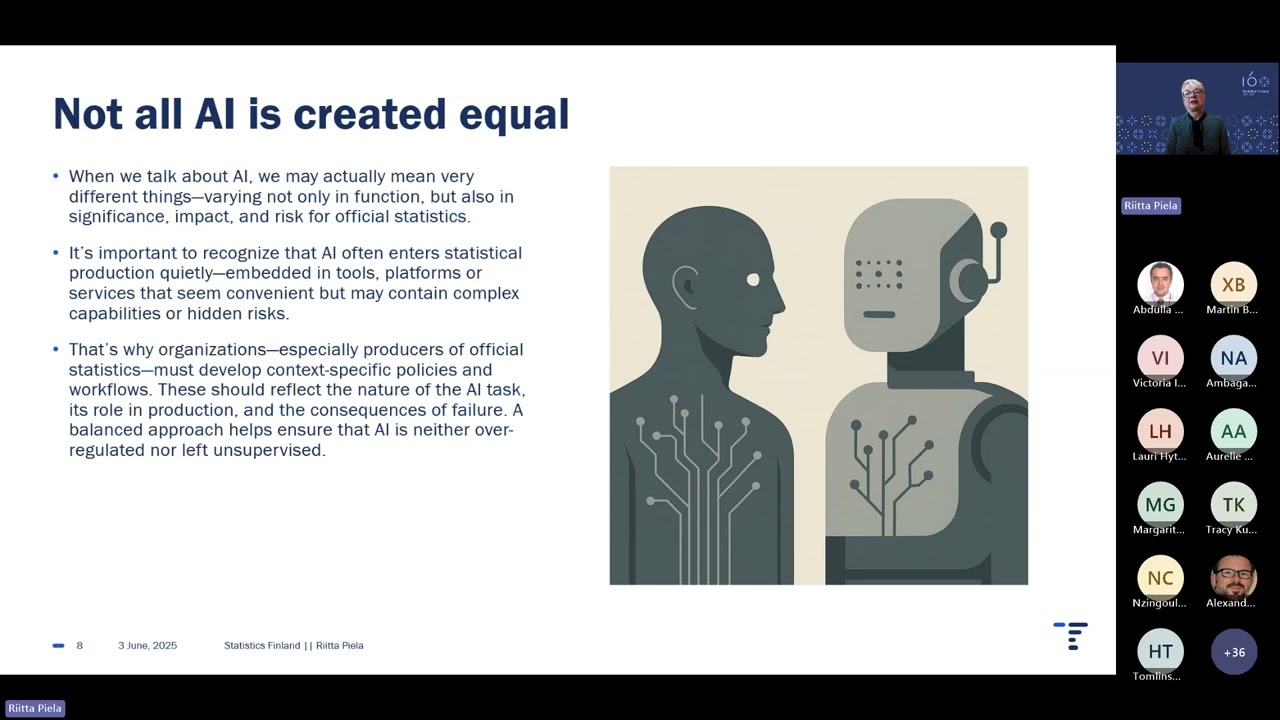

We continue with the same course called SQM - Software Quality Metrics.

Our task is -

to use metrics to assess the quality

of the software code and its various other characteristics, not only quality, but also quantity, as well as its depth, breadth, width, and so on.

With the help of all these metrics, we want

to gain control over what we create, to gain control over the programmers.

Programmers create software code. If we gain control over all of this, we will be able to

create higher quality software code, which means we will have more

users, more successful projects, more money, more happiness. There will be more of everything. Every company, every garage producing

software products created its own regulations. Everyone invented their own, and everyone was proud of it. Each one,

Wherever you went at that time, everyone would say - this is how we are organized. Moreover,

as soon as there were more than five programmers in that garage, software was immediately created for this regulation.

A software product was developed that helped manage all these procedures,

so that they wouldn't be recorded on paper, but registered. And among others, there was a company called "Rational Software."

"Rational" from its name.

The company "Rational Software" also proposed such a process. "Yet another," I emphasize, these processes were present in every,

in every second team. But "Rational Software" turned out to be one of those that caught the attention of IBM.

And IBM acquired "Rational Software."

Why didn't they buy me, my company, or why didn't they buy a dozen other companies?

Apparently, "Rational" turned out to be more developed. In "Rational," this process was described in more detail.

A multitude of factors. Perhaps it was simply one of the best.

Well, this is all in history, because what we know as the output is the "Rational Unified Process," that is, a certain

unified development process,

described by this

person and the team that worked with him, turned out to be quite

suitable for many projects of that time. And, in my opinion, it remains suitable for projects today.

We want to make the programmer work, and exclude the lazy programmer

from the team.

This is largely what this framework is about. And to make the programmer who doesn't want to use UML,

use UML. And to make the programmer who doesn't want to write a project plan and specify strict milestones,

with dates, numbers, and deadlines. We will also exclude them from the project or somehow punish them, force them, however you want to put it.

But we will do it in such a way that everyone will follow

the rules. Everyone will march in formation, so to speak. This may not be liked by many. I also don't particularly like marching in formation, but

when you think about

the project as a whole and find yourself at the top of this pyramid, and you are the person

who pays for all of this, you will understand that without everyone marching in formation, you won't be able to complete the project.

It won't work. All these teal organizations - they are all a path to failure. In most cases.

Sometimes, in exceptional cases, yes, a team that is cohesive with good synergy, with good

engagement from each team member, can sometimes succeed. In one case out of 100.

But in 99 cases out of 100, these teams will fail, they will fall apart, there will be chaos, there will be disorder, they will switch between each other, these...

employees. It will be a regular classic development market.

Yes, in one percent of cases, people do succeed. And then everyone tells the story that we succeeded because we had a teal organization.

What is this called? Survivorship bias. Yes, you succeeded, but not because you had a teal organization, just because you got lucky.

What is the Rational Unified Process? Let's take a look. It suggests that we organize all development by dividing it vertically and horizontally.

What do we have going vertically? Vertically are the main processes we engage in, the main activities. There are only

6 of them here, but there can be 16 or 20, but the Rational Unified Process suggests 6 main ones.

Imagine that these are 6 people. 6 different people. Each of a different color, for example. And each one does something of their own.

One

records Requirements.

That is...

listens to the client and

records what the client needs in a specific, strictly defined format

for requirements registration.

Does not memorize them in their head.

Does not become

the champion of the client, as they say now. The champion of a client.

Well, and

writes them down in a document, understanding that this person may not be on the project tomorrow.

And the document must exist. A document with the requirements.

The second person is responsible for analysis and design.

Analysis and design. That is, he creates the architecture, the system architecture. He draws those squares, connects them with arrows, resulting in some UML

document or set of documents. You can read the details inside the book. It explains in great detail what specific documents need to be created.

One person is responsible for implementation. This is us, programmers. The programmer specifically writes code.

The document provides the requirements, and he understands how it should be programmed and

programs according to the assigned,

specified tasks. There is a person who is responsible for testing. He

tries to break what the programmer has written, finds errors in it, and registers the errors in some bug tracker.

And there is some deployment person, who, like a DevOps, as they say now,

assembles everything and deploys it to production. For example, like that.

But Rational Unified didn't just say, for example, but directly

firmly stated that you must have such people, and for each person, he directly wrote templates of documents that they should create.

Of course, in a small group, you won't do everything exactly according to these documents. You will simply

spend a lot of effort on bureaucracy. But if you have 40 programmers in a project, for example, then the Rational Unified Process

or RUP, as it is called, will certainly suit you very well. And then horizontally, we have the so-called

iterations. They don't have

sprints, as proposed by Scrum, but iterations. Each iteration is similar to a sprint; it takes

a certain amount of time, a week, a week and a half, or two. These iterations go

one after another, horizontally over time. This results in an iterative development.

And in each iteration, we engage in these activities for varying amounts of time.

Okay, let's go back to programming now. Let's return to interfaces. We

let's take a look at the metrics that evaluate classes in terms of their external interface.

The previous metrics in the last lecture were about what happens inside methods. We looked at how methods

use variables, attributes within the class, and based on the relationships that exist between them, we drew conclusions about what

relates to cohesion in the class or not. How cohesive it is, how well it is

interdependent, how necessary all its elements are to each other. Here, there is a different approach in the metrics we will discuss today. They suggest looking at interfaces, examining what is present in them.

the class from the outside. We have a certain shape, a shape - a certain form. This shape has...

There is nothing. This shape has only one method - area. We can calculate the area of this figure. And

Next, Circle implements this shape. You see, there is an arrow. I am using this to indicate...

the long-forgotten UML. This is an arrow with a blank, empty end.

arrow.

This

visual element

shows that

Circle inherits the properties of the shape.

That is, what can be done with the shape can also be done with Circle. Circle also has an area. We can call this area and

calculate it. And when a shape comes to us in some main method, we do not know exactly what has come to us. Whether it is a Circle or some

rectangle, which can also be...

which can also be

inheriting the properties of this shape. Or some other, for example, I don't know, it could be a Polygon,

which also inherits the properties of this Circle. What gets into main, you see, the thick arrow shows that main uses the shape.

Main does not know who it is working with. This is called polymorphism.

Polymorphism is when an object comes to you, and it has many forms. You do not know exactly what it is at the moment. Whether it is a Circle, a Polygon,

or a rectangle. Something has come to you. And you are not even interested in knowing what it is. You simply call the area function, and it will sort itself out.

Those who have come to you will figure it out themselves. If it is a Circle, it will be calculated one way; if it is a rectangle, another. This is the foundation

of object-oriented programming and not only - polymorphism. But we are interested in decoupling. This is called...

decoupling, which we learned about in lecture number 6, I believe.

Where we said it would be good to make it so that Main never knows about Polygon, Circle, or rectangle.

These connections that I just drew are

harmful.

It is preferable that they do not exist. So that the Main function never learns about these three.

creatures, and only knew about one - Shape.

Therefore, we remove these connections.

How do we achieve decoupling between Main and Circle?

This connection is

removed through an interface.

It is very good to do it this way.

The rule is very simple. If you write a function and you accept

a specific class, for example, Circle, that is bad design.

Memorize this simple rule, and everything will be fine. You should always accept an interface as an argument.

This is a specific class. The following metric was proposed.

CAMC. It was proposed a long time ago, as you can see. It shows

the relatedness of methods in the interface,

using the list of parameters that are for this method.

That is, you have some interface, with methods defined for it. For example, area, as we just saw, but there are usually more.

And then we look at what parameters are passed to them.

And based on how related they are to each other, we determine how these methods are

belong to the same interface.

Let's look at an example to see what we're talking about. Let's imagine this is C++. Here, I'm just a little bit...

I'll practice on C++. Imagine you have a class with these methods on the left, 6 methods... 7.

You have a total of 7 methods.

7, yes, 7 methods, including the constructor and destructor. Let's now arrange the types horizontally,

that are used in the description of these methods.

Look, the first method is the constructor. And here are its types. One, two, three, four.

And here we put ones. Look,

the alert type is used,

byte is used, bitmap is used, and star text. Here it is not mentioned at all for some reason, well...

Ah, I think that this is...

There's an error here, just a typo. It should be not a star, but a char star.

I think that's it. In general, there are three ones here, for whatever reason. What are they using?

Types?

I took this from...

a picture from an article, so I'm trying to somehow

align what we see on the left with what we see on the right. Next, for the destructor,

here’s a table, the destructor has no types at all. This guy

has one, also no types. This one has

char with a star. This one has char with a star and

list, va list. Here it’s also empty, here it’s char with a star and int. So, this is the matrix, the dependency matrix.

Next, we consider k - this is the number of methods, in this case, it’s 6, and l - this is the

number of types, in this case, more precisely, sorry, it’s 7, and here we have 6. Here it’s 7, here it’s 6.

Is that clear? This is not difficult math. And then we calculate the actual metric.

This is called CAMC.

It is calculated as follows. We sum everything we can sum from this table, namely all the ones,

3, 4, 5, 6, 7, 8, as I understand it, here. This sum is the sum of all the ones.

Well, there may not always be ones, you understand, right? For example, if they are used twice. If, for instance, in some method there were

char star and char star, then there would be a two in this matrix.

We get the number 8, and then we divide one,

and then we divide

one by

7 multiplied by 6 and multiplied by 8. So it results in

8/42.

Something like that. And ideally, the more we have...

the higher this number,

the higher the coefficient. And here is a quote from our favorite author, this is from one of his books. By the way, he is a big proponent of...

Agile. Back then, I don't know how it is now, but it seems to me that he is not such a big proponent of Agile anymore.

But this is one of his central books. He is one of the authors of Agile, Robert Martin.

He says that classes with thick or fat interfaces, meaning interfaces that have a lot of...

methods,

these are the classes,

that do not have cohesion.

The more methods you have in an interface, if you then implement a class from that interface, your class will not have cohesion.

You can break them down into parts. More precisely, he says this means that the interfaces of such classes can be broken.

They can be divided. Since there are so many of them, they kind of suggest being separated. A few years ago,

together with

the Higher School of Economics, by the way, along with several students from the Higher School of Economics, we conducted a study.

This was

in 2021. These students have already completed it, but the research remains. The article has been published,

and several professors from the Higher School participated. We helped a little on our side, and the students did as well. They did the following. They compared several

metrics that we studied together. We studied everything except for this one. We did not study PCC, but

that's not a big deal. They took

7 metrics and took a certain set of Java classes and...

calculated

on these Java classes, on their methods, more precisely, on these Java classes, they calculated

cohesion using different metrics. Then they looked at what these metrics indicated.

Some metrics say that your class is very cohesive, while others say no, it is not cohesive. And an interesting

interesting, more precisely, irregularity was discovered, an interesting correlation. Where the number is higher,

it means the correlation is strong. Where the number is lower, below zero,

the correlation is negative, meaning in the opposite direction.

One metric says that the class is cohesive, while another metric says it is not cohesive. For example, the TCC metric and

the MAC metric, they are related to each other.

TCC and...

Sorry, TCC and LCOM.

TCC and LCOM. If you look at the TCC metric and the LCOM metric, you will see that they

diametrically opposed.

hold views regarding the Java class.

And if you look at LCOM 5 and LCOM 2, then they, of course, relate to each other.

coincide in position. Well, and the others too. For example, NHD, which we looked at today, and LCOM 2. You see, it’s like that,

0.5. There is a correlation, but it is weak. And this is actually a negative correlation, -0.75. This means that

Who to believe? You will have the same class, and they will say completely different things. One will say

"class of kachiziva," while the other will say "no." And this is done on a large volume of classes. You can open the article. It states how many classes were analyzed.

analyzed. This is not on one class; it is on hundreds, tens of thousands, or maybe even hundreds of thousands of classes that this analysis is based on. And further...

make your own decision. One of the first articles that is quite popular and

is relied upon is a set of metrics called Mood Metrics for Object Oriented Design.

It proposed

in this mood

about six different metrics. We will consider four; there is no point in

looking at all of them, we will look at 4, even 5, as they are somewhat interesting.

I don’t think you will actively use them, but you should understand that

since these metrics exist, it means that people look at your

objects from this perspective. Let’s look at a metric called

method hidingFactor. We take the total number of methods and see how many of them have

the private modifier and divide it by the total number of methods. We have one method that is private, and in total, we have

two. So we get the MHF of this class as one half, which is 0.5. Apparently, I...

I understand that the higher the MHF, and I don't even know, I think there is no conclusion here, I wanted to...

to say that the higher the MHF, the better, but that would be strange, as we need public ones.

methods, so I would even say that in my world, in my understanding of OOP,

probably, the lower the MHF, ideally it should be equal to zero, because I...

for example, I believe that private methods are an example of poor design if you have

if an object has private methods, it means you have some functionality

inside the object that is only needed by your object and you do not expose it outside,

but inside your object it is used, after all, that's why you created it.

a private method. You had some large public method, for example, well,

most likely that's how it is, private methods are most often created this way, there was some

large public method, you noticed that there is some functionality that would be

good to extract because it is a standalone

functionality quite worthy of being reused, even

if just once. You extract this functionality into a separate private method

and call it from the main public method. This results in some reuse.

It's good if you call it from two public methods,

then you really have true reuse. Even if it's just from one method, it's

still a form of reuse to some extent, maybe without the prefix re, just use. But in...

in this case, you prohibit reuse from the outside; you have isolated some functionality, but you do not...

didn't finish your work, you could have made a public class out of it, you could have...

have turned it into a full-fledged functionality, a complete object, maybe...

even several objects. You didn't do that; you left it inside.

this class. What will this lead to? First of all, it directly and most often...

leads to the duplication of such functionality in other classes. Somewhere there...

something similar will be needed, and since you have what you have here in the private...

method, you don't expose it to the outside, then in the neighboring class, well, they won't...

use it and won't bother to create something from your private method.

somehow public. Making it public, violating encapsulation, thinking...

why did you make it private, what was the logic? Very few people will deal with this,

who do not want to touch your class. That is, you have a chance for reuse, but you do not...

use it. Let's move on. The next metric is called the attribute hiding factor.

How many attributes you have hidden in private state. Here everything is clear; if

we have h, h, f approaching, this h, h, f approaches zero, then everything is good. Sorry, to one.

That is, we should have one here. Ideally, all attributes should be private.

You can take a repository, create some kind of checker, a simple

tool that will go through all the classes and count the h, h, f of all classes and

output some average number for the entire repository.

If you do not have one, then you have some problems. You can very well...

tell the programmer that he needs to fix this.

Here, it is probably obvious. This metric is quite clear. I think there is no need to even

explain why we want all attributes to be private. The next metric

is called the method inheritance factor. It was proposed back in 1994,

30 years ago. You should understand. The method inheritance factor is the ratio

of inherited methods to the total number of methods. In this case, look, we have

the method content, which is inherited because there is an annotation

overwrite. This means that it came from an interface or, in this case, from

a class called material. And the total number of methods we have is two. 1 to 2

equals 0.5. Is this good or bad? It's hard to say here.

Probably, I am generally against inheritance, but here it may not be the case.

inheritance, here it could be, for example, the word implements. If there is implements here,

then it means that we are dealing not with implementation inheritance, but with subtyping. So

there is, book is an instance of the type material. Everything is fine with that, it's not inheritance. And then I

would say, okay, then I will express my opinion, then I would say that this metric is

should strive for one. I think that good design is when each

method has this annotation. All methods have overwrite. This means that your

classes implement a certain design defined in some

type system, in some type architecture, not even a type system, but in some

type hierarchy. There is some hierarchy where there is one type, it has subtypes, and those

and even more subtypes. And your classes fit into this hierarchy. And in this way,

this way allows, thus you ensure decoupling of the client from your

implementation. The client will always rely on information about types and on

this hierarchy, and will draw the necessary knowledge from it. How exactly you

implement some type? For it, it won't be so important whether it works

polymorphism, that is, it sees the type material and knows that it can be a book, it can be

a journal, it can be a paper. It doesn't matter because it knows how it works.

material, it knows its interface. This is the ideal design, the ideal object-oriented

object-oriented design, where you have an @Override annotation in every method.

They all come from interfaces. And this can be measured through such a

metric MIF. The next metric, we have a total of five, as I promised, is the fourth one,

called the attribute inheritance factor. IIF is defined as the ratio of inherited

attributes to all attributes. In this case, we have some material class, we are

extending it here. Thanks to this extension and the protected keyword, we will have

two attributes, title and content, in this class, in the book class.

Those who know Java understand how this works. We inherited

attributes from the parent class. In my world of OOP, I believe this should be zero. This metric should

equal zero, meaning we should not receive or

inherit any attributes from parent classes. In general, the word protected is a great evil, in

my understanding. Why? Because in this way we create additional

coupling, an additional connection between the child class and the parent class. It already exists.

exists due to inheritance, which is bad, but when there is a protected attribute, it is

means that in my child class there is functionality that I cannot see, I do not

control, and I didn't write it. It is located somewhere above. Now imagine

imagine, we have a parent class, from which there are three child classes, and in these three

child classes, this functionality is present along with the protected attributes, and

protected methods. And now we change something in the parent class. This leads to

changes in all three child classes in an unpredictable manner. That is, those,

those who program there, the programmers of these child classes, suddenly find themselves in

themselves seeing that the functionality has changed. This is an extremely dangerous situation; it can

seem convenient for the programmer, as if we are thus able to code once, use

everywhere. We coded in one place, and they all use it simultaneously. As

Unfortunately, such a design can often be seen in Apache repositories, everywhere. Such

old Java code, if you look at something like Apache Hadoop, some Apache

Maven, opening the repositories, you will see this everywhere. For some reason, programmers

from the 90s and other early years thought it was really great, this

inheritance, they used it everywhere. Everywhere functionality needed to be reused, they

said okay, if you need functionality from here, you can just use me.

inherit, you inherit me and gain access to everything that is here. And when we

if we change it here, it will change everywhere for you. This is a completely flawed practice; we need to

instead use composition. You can read about this on the internet

Composition Over Inheritance. This is the right approach to the design

of code reuse. The last metric from this article is called

Polymorphism Factor. What is the Polymorphism Factor? It is the number of methods

that redefine inherited methods. What happens? We have a parent

class, we inherit from it again, it has a class, it has a method Content.

Content comes to us here, it is also present in the Book class since we inherit it.

These are the very wheels that we take from someone else's

car and add our own here. We added our own title, and Content came to us

from above. As a result, we have two methods here in Book, as we

let's read about PF. PF is calculated, what was the number of methods in this case for us

methods that we could override. One is the Content method, but we did not

override, we simply added the title. Therefore, we did not override any, but we could override one.

override. As a result, we end up with zero. I think that if you do not use

Inheritance, then you will have zero in the denominator here, and in

numerator it doesn't matter anymore. So your PF will be, I don't know, some kind of

undefined, because you will have zero here and zero, zero divided by zero,

it will probably be one. I think so, the logic of this, of course, you will say, dividing by zero

is not allowed, but if zero is divided by zero, then I have a feeling that it should

simplify, and in the end, it results in one. In the world of metrics. Let's think, if you have

there are many of you, let's say you could override five methods.

Okay, fifteen. So you have Inheritance, and you have a tree

of Inheritance, you have a parent class, it has another parent, and it has yet another

parent, and in total, this whole tree comes to you with 15 methods that

came from the parent classes, and you can override all 15, but you

only override one. In this case, I would say,

that in this case, probably, the design, who knows, is probably better than if you

had overridden all 15, I think. Although, in such a world of dirty

programming, I don't even know what conclusion to draw here. And so, this

article, it was in 1994, in 1998, four years after their article, they published

another article, by different authors, I decided to present it to you, a scientific article, they

made such a calculation, remember what the metrics were called, yes, moodmetrix, this is from

the previous article. What did they do? They listed vertically the metrics that

we saw with you, not all, we didn't consider this metric today, I think, вот

we didn't consider this one, we looked at this one, this one, this one, and this one, four in total, I've already forgotten,

what we looked at, in general, is not crucial. They listed vertically the metrics that

that were written by the authors of this mut-collection, and nine different

projects, nine commercial projects. And then they showed what percentages in

the projects they discovered in 1998. For example, polymorphism or whatever,

the one we just looked at together, the polymorphism factor, yes, we talked about it,

what we asked, which is better, this way or that way, let's see what turned out in

real projects, the polymorphism factor, you see how it is, it is very low, so

apparently, this is how people do it, they take what has come, all this

hodgepodge, and don't touch it, changing it a little bit. Well, here you have 6 percent out of

the possible, they could have overridden 100 percent of the methods, but

they only overridden 6 percent. And so on, some numbers, you can,

this is 1998, well you can see for yourself, let's look at another metric,

the inheritance factor, we talked about how one is bad, aif,

let's see what we have here, aif, here it is, 32, 36, 19, 26, 46, here, well some

such numbers. How many of these projects have programmers being reprimanded for exceeding

some limits of these metrics? I think, zero. What would happen if programmers

were reprimanded? This is an open question for you to explore separately. In all

In all the lectures, I try, or rather I attempt, to use certain metrics as a support.

for discussions about what quality code is. Don't take this as a

course or this particular lecture as an attempt to impose on you or force you to

think in terms of one specific metric or just present to you

information, or rather, not to present it, but my goal is not to

teach you some metrics, this is not at all what this course is about, although it may

seem so, we go lecture by lecture, in each lecture we analyze

a specific metric or a set of them, but that's not the main point, that's not what I

would like to convey to you, not the knowledge that I would like to share, and moreover,

I am not an expert in this knowledge; I learned many of these metrics while

preparing this course. Ten years ago or five years ago, I had never heard of

some of them, so I am discovering their existence along with you. However,

relying on these metrics, I can tell you in general about software development and

overall quality, using the knowledge I have accumulated over the last

30 years of software development. In no way is this course a course

on metrics; that would be completely boring, and I wouldn't be telling you this. We want

to avoid code duplication; in my understanding, code duplication is

one of the biggest sins of a programmer. When we have

code duplicates, and there are different types of these duplicates. In the simplest case, we can...

...we will look further today; it is really just copy-paste from one...

...piece of code inserted into another; it works there and it works here. This is a primitive...

primitive cloning, as will be shown later, is the first type of cloning,

there are a total of 4 types of these cloning, and every time we do this, we essentially...

we are signing our own unfitness for the profession, as I understand it; we are laying a bomb,

a time bomb for future development, for future programmers, for those who...

who will come after us to this codebase; this is a clear sign.

of an unprofessional, if there is any kind of duplication in the code,

duplication of logic, duplication of meanings, duplication of formatting,

duplication of artifacts, duplication of tests, any duplication; if something you...

...are doing the same thing in two places, and you didn't notice it as a programmer, you didn't...

...realized that there is a repetition of the same thing here, this is a sign of your...

unprofessionalism, and this comes with time; I am speaking now as...

I am currently referring to these programmers as unprofessionals, not in a negative context, but just to show that they simply did not have enough time to develop this skill.

It's really just a matter of time.

Extreme programming. You probably know this term, extreme programming; there was a whole book on this topic, and after that, there were a whole series of books, publications, conferences, and a lot of noise surrounding it.

It's really great; I highly recommend that you read this book and pay attention to extreme programming, authored by Kent Beck, who is on the screen. He is a highly respected and well-deserved figure in the industry.

I think we didn't look at his work in the previous lectures, so it's worth paying attention to.

He also, in the year 2000, talks in his book about extreme programming about the importance of eliminating, removing duplicate logic in the system, and he explains what this will lead to. He says that if you engage in this, you will end up with many small objects.

For those who understand or are trying to understand object-oriented programming, this dilemma should be familiar: how to design a system—should it consist of a large number of small objects, or should there be a few large objects?

You can often hear criticism of such pure OOP, which contains the main message: with your pure and beautiful OOP, you lead the codebase to a situation where too many objects appear, and they are very small.

One object has two methods, another object has one method, a third object has two attributes and one method; it becomes difficult to understand what is what, hard to maintain, and so on.

My opinion is the opposite; I believe it is good when the system has lots of little objects because each of them helps us eliminate duplicates.

If you have one large object, for example, an object that reads content from a file and transfers that data to a database while filtering and removing spaces, you have a strong, powerful, unified class that can do everything: read from a file, filter, and save to a database—all in one class.

In this case, it will be difficult to remove duplication because another class nearby, which reads from the console, then filters spaces, and also filters something else, and then saves to a file, for example.

This is a completely different functionality, it has a different implementation, and it is also a cohesive file, resulting in two quite large files.

If you start removing duplicates, you will end up with six files instead of two: six classes—one that can read from a file, another that can write to a file, one that can filter, another that can read from the console, and a third that can write to a database. You will have five files, plus you will need wrappers, meaning those files, those classes that encapsulate all three and can perform the entire pipeline.

You will end up with not five, but seven classes; instead of two, you already have seven. If you want to eliminate duplication further, you will have even more, and more. In my opinion, this results in a system that is more understandable, as it consists of small fragments, and each fragment has its own isolated functionality. It is more testable because instead of two tests for two large classes,

you will have seven tests written for seven separate classes. This leads to better, more convenient maintenance of the entire system; it is more testable, more understandable, but of course, all of this is provided that you have thought through this decomposition well and had the insight to do it correctly.

Here you ask, Maxim asks, who will pay for the refactoring? The business doesn't care about the state of the codebase, whether patterns are applied during development. Well, it depends on the business; for some businesses, it matters, but even if the business doesn't care, as an architect, you shouldn't tell the business that you are doing refactoring. You simply submit tickets to your backlog, write three tickets for your three programmers: for Sasha, for Petya,

for Masha. These new tickets go into the backlog. Who from your management will delve into what tickets you created? Usually, this doesn't happen; no one at the company level will look at the details of development. They will ultimately look at the end result: you closed 120 tickets in a month and wrote 50,000 lines of code. Well, that's good; the details of your development are not particularly interesting. As an architect, you should just be confident that you are moving in the right direction,

and eliminating these duplicates, clones, is definitely a step in the right direction. In this way, you are helping the team. But if you, as an architect, are not doing this and just sit back and watch how people individually close their blocks of code, their tasks, their modules, in the end, it all seems to work, but duplication grows, and duplicates naturally appear here and there. This is an inevitable process,

because sometimes a programmer, seeing a certain module, needs to use that module in some way. They use it in their own way while implementing a specific algorithm for using that module. Another programmer also wants to use the same module; they also implement an algorithm for using that module. This results in two implementations that are somewhat similar. It's good if they are short, just a couple of lines, but they can also be long.

So, the first mentions of this term "dead code," or the phenomenon of dead code, authors tell us that dead code is code that has been used in the past, meaning it was used at some point, but currently, it is never executed. The term "never" is ambiguous; you understand that. What does "never" mean? How can we prove that it is "never"?

Can we, by looking at a program, in general, prove that some of its

components are never executed? This is an open question. You probably know that

proving such things

theoretically is not possible. We cannot say how a program will behave in general. In specific cases, we can show this in some elements.

For example, in the case above, where we compared one number with another number.

Well, and further their conclusion. They say that this dead code, it...

Hinders Code Comprehension. Comprehension, meaning understanding. It complicates understanding.

this complicates the understanding of what the programmer has written. And we have discussed many times that understanding the code, its...

maintainability, its readability - this is the main quality criterion before the code reaches the client.

Once it has reached the client, the quality criteria are, of course, the quantity...

of bugs at runtime, which...

has the opportunity or has the pleasure of seeing the end user. But everything that happens before that...

I don't know, 90 percent...

is determined precisely by readability and...

code maintainability. If the code is easy to read, understand, and maintain, then you have achieved success. They tell you that...

dead code is harmful. We agree with that. An even more recent article.

They say that...

even this is a whole investigation for them, look, you see, a multi-study investigation into dead code. So people are interested in this.

Research is being conducted in this direction, although there wasn't any before.

By the way, they will... here they talk about it, look. They say that this is a common...

phenomenon, a phenomenon that...

this dead code is known to be harmful. It is known that this is a problem.

Nevertheless, it seems that this problem has received very little attention from the community.

Especially from the research community.

That is, there have been few studies...

in this area. We don't really know, by the time this article was written, they say that we...

do not have broad fundamental knowledge about the reasons for the emergence of this code.

Why does it appear there?

How long does it exist there? What...

What negative effect

quantitatively does it

bring to development?

There are no such studies. We will look at this a bit later; they have appeared literally in the last few years, but before that, there really weren't any, and this topic

is very interesting. Perhaps there weren't many studies like this in such large numbers and so fundamentally because, maybe, there was

less code back then than there is now. Maybe it wasn't as accessible; there was no GitHub, there weren't such...

convenient platforms where one could view open source libraries

so widely, freely available, and conveniently.

This is my guess, just an intuition. Why did this happen?

Apparently, humanity has accumulated millions or even billions of lines of code, and it was finally time to take a look at them and

decide what should be adopted and what constitutes

a normal product. You write that dead code can serve as documentation or a test?

It might, but I would

recommend writing documentation or a test instead of relying on dead code that accidentally ended up in the repository.

As we already understand, it got there right at the beginning, being dead.

That is, right from the start, the programmer...

laid this mistake, he immediately made this noise, and embedded it into the repository. In most cases, this is exactly how it happens.

Well, perhaps for you, I will give a practical piece of advice. You should probably try to get rid of that dead code.

do less and think more at the start.

program and design the system based on principles. Can I remove something from what I have designed?

Is everything I wrote necessary? Can I make what I wrote shorter? Can I cut out anything unnecessary?

Try not to do anything in advance,

do not add to the repository what is there.

may be useful in the future.

Don't do that, only write what is needed right now.

That is, no...

for future developments, I would not recommend doing. That is, such minimalism,

healthy minimalism, it should ideally help you.

do a good service,

help get rid of this dead code. A few years ago, we published an article,

I mean, there is one author here, but someone helped me a bit with this work, but mostly it was, of course, my idea.

I proposed a metric called volatility.

of the source code. I'll tell you in a few words how it works, maybe you would find it interesting.

Imagine that we are on the horizontal axis, in this picture,

placed

placed

classes,

files, files in the repository, and on the vertical axis, we placed the number of changes made to these files

throughout the entire history of the repository

in units. For example, let's say we have a total of 20 files in the repository, like these

vertical bars, that's how many files I have in the repository, 20 in total.

We changed this file

a hundred times, we changed this file seven times, we changed this file three times, we changed this file fifteen times, and so on, and

then we sort them so that we get approximately this kind of normal distribution, and

after that, we try to calculate

the arithmetic mean of the

this

construction, here it is, this red bar represents the arithmetic mean, and then we calculate

variance - which, I believe, in Russian is called quadratic deviation

or something like that in proper Russian, meaning we take the difference between each

point,

we take the difference between this value and the value of mu, square it, then sum it all up and divide by the total number.

We get a certain variance. So, it is assumed that this variance can be used as a metric for the cohesiveness of the repository.

That is, if all files in the repository are changed with approximately the same frequency,

then your variance will be equal to

zero.

If you have, imagine, 100 files in the repository and you changed each file once,

then this formula will give you

zero. And the higher this number is,

the greater the variation between the files that are changed frequently in the repository and

the less

frequently changed files in the repository.

And this is bad in my

understanding.

I believe that this variance or volatility of the repository should be lower. We should try to bring it down to zero.

And here, let me show you some statistics from this article that we collected on

several repositories, and we saw that as the number of files in the repository increases, on the horizontal axis we have the number of files in the repository, and it is

logarithmic. So, the number 4 means that there are

10,000 of them. That's the number 4. And on the vertical axis, there is also a logarithmic scale, which shows that very variance.

There is a quite obvious, visually obvious relationship between the size of the repository and the variance.

The smaller your repository in terms of the number of files, the less variance you will have. That is, they will be more,

they will be closer to each other in terms of frequency of changes.

And this, in my understanding, is good. Let's move on to the second topic of our session, namely monolithic repositories.

If you have a repository, you probably understand the difference between monolithic repositories and

monorepositories.

Let's not confuse monolithic architectures and

microservices.

This is different. A monolithic architecture is an architecture in which the product is contained within a single unit.

all functions are presented simultaneously. It can access the database, it can display the user interface, and

I don't know, it includes a large language model within itself, it can also

it measures the weather, it manages a bank account. Everything in one. This is called a monolithic architecture. When the architecture consists of microservices,

then we have one server with its own web interface, it can

store data. The second service can calculate the weather, the third service can

support a language model, and so on. And they communicate with each other through some HTTP requests or through some data bus, or something like that.

This is different. We're not talking about that right now. I suggest you think about

the repository device, conditionally on GitHub.

You can do it.

store your product, either in the form of a monolithic repository, that is, a repository where everything is together, you have both the UI,

and the user interface, and the back-end, and the front-end, and

functionality, and auxiliary libraries, and documentation. Everything in one large repository. This is a monolithic repository.

The second approach is when you have many repositories on GitHub. For example, imagine that your storage point is GitHub, and there you have many micro-repositories.

In one you have documentation,

in another repository you have the front-end, in the third you have the back-end, in the fourth you have the database and its

environment, and so on.

Which is better?

How is it better to organize the source code storage: in a monolithic repository or in a large number of different repositories?

I believe that in different repositories. I am convinced of this. But

companies like Google and

others think the opposite. I will now show you what they say and why they think the opposite, and we will see who is right.

Well, first of all, they say, engineers at Google, this was published by Google in 2018, we prefer,

strongly prefer.

They strongly prefer a monolithic repository.

Well, because visibility is higher and simple dependency management.

The arguments are clear. The first argument is — we can see everything in front of us as if it were

on the palm of our hand; we open one repository, we can search through it, we can find all the files,

here is the structure of all directories, everything is in front of our eyes. And the second is dependency...

management. If your backend depends on the frontend and your frontend is at version 12, and...

your backend is at version 10, then you will have mismatches, they may conflict with each other.

each other. If they have different life cycles, if they are stored...

in different repositories and are released differently, if they have different...

versioning, one increases versions at such a pace, the other versions...

is slow to increase, and it even has a different team that handles it.

versioning, then of course the integration of these two components will be, well, a certain...

task. It's fine if you have two of them, but if you have 200, as is likely the case in a company like Google...

and any other large company, but in Google, I don't know, because they have a lot...

component, but imagine some large company that has one...

single product, let's say Uber, for example, or Yandex Taxi, well, Yandex also...

it's very multi-product, well, imagine some service like Uber...

taxi ordering service, everything they do is taxis, they have one front-end and they have one...

backend, but inside that backend, there are, well, hundreds, hundreds of services, hundreds of components,

that need to somehow integrate with each other, and of course, they all have...

different versions, and bringing it all together will be difficult if you have...

different repositories. That's their argument, the company Google. I don't agree with that.

argument, it seems to me that this complexity is necessary, it is right, it should be there.

this complexity, that is, it shouldn't be easy to connect components between...

each other, and this integration, and the difficulty that arises during integration, it...

leads to quality in the end. Since it will be difficult for programmers to...

coordinate components with each other, they will have to write more integration...

tests, they will have to write more continuous integration systems, continuous...

delivery, deployment systems, they will have a more complex and, therefore, more thought-out and...

deeper system of bringing everything together than if they had everything in one...

large namespace, in a large deployment space, and everything is deployed...

with one button, everything is deployed. They just run some...

small scripts, these scripts gather everything together and deploy it as one large...

package. I wouldn't do it that way; on the contrary, I would try to extract as many...

independent libraries from the repository as possible and...

components. In the last lecture, I told you to look for...

opportunities, look for moments where you can extract something from your library, from your...

application and make it an open-source component, a standalone one, with its own...

release cycle, its own tests, its own documentation, its own support system, its own...

backlog, its own pull requests, its own...

quality assessment system, everything separate. If you can do this...

so that your central system remains small and it depends on...

hundreds of different small components, each of which has its own repository,

its own support system, and ideally, all will be open source, then you will have a much...

more elegant and competent system than at Google, which strongly prefers...

Well, here is my summary. I have an article on my blog that I also wrote quite...

a long time ago, back in 2018, roughly parallel to Yandex, and my arguments in favor, I have some...

I have already mentioned them, so let's list 7 points. The first is encapsulation,

each repository, if you do it my way, with many repos, not a mono repo, but many repos,

that is, many small repositories, but first, you hide the details, just like in...

object-oriented programming, one repository knows nothing about another,

you hide the implementation details, which is good. If I don't know how you operate internally...

something is implemented, I start interacting with your interface, which you...

control. However, if I have direct access to your source files, then you...

you don't even know what I will take from these files and how I will call them. It turns out...

intense coupling, which we discussed, we start to heavily depend on each other,

because we are close together, and naturally, my programmers are tempted

to use what your programmers accidentally left in random places.

The second point is fast builds. The build process is faster. When you have a small

repository, you can build the entire library in just a few seconds.

When you have a huge repository, you will be waiting for the build to finish for hours.

How do you develop in this case? It's inconvenient for you; you want to be quick.

You make a change - build it, make a change - build it, deploy it to CI. CI shows the build on 12

different operating systems. That's it, you check, two or three minutes have passed, and you have

a new version ready. The speed of development is much higher.

Thirdly, the metrics are more accurate. You can determine the size of the repository,

what your coupling is, what your cohesion is, what your OP parameters are,

what your class parameters are. All those metrics that we have already covered in

this course and will continue to cover will be accurate in your case because

they will pertain exclusively to your repository, not some abstract

jumble of files that includes two million files that are not yours. You can measure,

everything is very convenient. Of course, you can say that in a huge repository there is my

directory, and I work on that directory, applying all the metrics there.

But there will still be some overlap with what is nearby,

one way or another. Well, homogeneous task tracking - I think that is also very important.

when you have a backlog, and this backlog is tied to your codebase, and each

each ticket is linked to a pull request, and only your tickets are in your backlog. For example,

on GitHub, if I create a huge repository with dozens of different modules, then

the backlog will also be very noisy. I will need to search for tickets that concern me.

concern me, it will take a long time, and I will need to attach some badges, some sorting.

to use. In this backlog, there will be 10,000 tickets, for example, instead of two hundred.

tickets, as in a small repository. And with these ten thousand, I will need to

constantly search, and there will be something noisy nearby, some other tickets will be

discussions, other people, other tickets. In general, all of this will concern others.

technologies. I program in Java, they program in Python, and here we are.

discussing completely different things in the same backtracker.

It seems inconvenient to me. Well, a unified coding standard. We talked about this, where

it is easier to enforce it in a small repository than in a huge codebase, where your

coding standards simply won't be accepted because they have different ones. And that's it, there will be

a conflict, and in the end, you will settle for the minimally acceptable, that is, the worst

coding standard. You will just have three basic rules left - do not

use tab characters, do not make file names longer than 100 characters, and at

the end of the file, do not put three empty lines, but only two and no more. These are all your

rules that you will be able to enforce in a huge codebase because

everything else you try will encounter conflicts with.

other teams. And that's it, these conflicts are irreconcilable. Short names are also

very important; if you have a huge project scope, you end up with long names,

long file names, long directory names, long class names, because

of extensive namespacing. Yes, the namespacing is very large, and you need to

stand out against this background so that your file is different from all the others. You

can't just name it user.java because there are already 400 such user.java files

existing, and searching will be very inconvenient for you. You will have to name it

superdetailedmyserverinmicroservicexuser.java. You will have a name of 40 characters because

otherwise, by searching for user.java, you will find 20 or 200 other files, and you will get confused.

And lastly, tests become simpler because you have fewer dependencies.

so you have fewer dependencies, and testing becomes much more compact and manageable.

and convenient. So you write that manyrepo is a kind of object-oriented type.

oriented approach. Yes, in many ways, it is very similar to object-oriented.

programming; you are just doing the same thing but for packages. Consider

think of it as each of your repositories being a kind of object; it has its own

life, its own life cycle, its own tests, its own build, its own purpose.

existence, its own responsibility, why it was created, what it does,

what function it performs, and then you will get... Moreover, many

the repositories that we use in the project are created, debugged,

are released, and we forget about them. They have a stable version, and we use this

we use this stable version for many years in a row. And in this repository, the volatility,

that metric I mentioned to you, is low because all the files, let's say there are 20 of them,

were all changed at the moment the repository was created. They were

modified, configured, debugged, covered with tests, and then forgotten, and this

version was stabilized. And it stands. We use it in the main repository where all the active

development takes place. But this module, it... That's it, there are no more... Its

life cycle no longer concerns us. And that's right. It should be that way. In 1998,

the Cotcher metric was proposed. That's its name. Interestingly, we will discuss

why it is called that. But before we discuss why, let's talk about what it

is. The authors of this metric suggest, as they say, that we can...

measure the decrease or increase, that is, the increase or decrease in system

complexity as measured by the selective metric CotDelta. Or there is the CotDelta metric.

Let's start from that. CotDelta is how much the code has changed from version to version.

Either over a time interval or from version to version.

Yesterday we had 50 lines of text in the code, today we have 20 lines of text. This means,

our delta is -30. For our version, relative to the previous version, our

delta is -30. A quite understandable metric, called CotDelta. They also suggest

measuring the total amount of change the system has undergone between builds as CotCher. Total change.

Total change is both the positive and negative changes combined into one metric. So, if in the first

version I had 50 lines of code, and in the second version that followed it there are 20 lines of code, then this is not...

that means my delta is -30. Delta -30, yes. But we need to look at what happened there. For example, if...

if I went from 50 lines to 20 lines, then the delta here will be -30. But, for example,

I started with 50, I added 100 lines here and deleted 130 lines from there. As a result, yes, indeed,

20 at the output. But I added 100, deleted 130. So, my total change will be, my delta will be -30,

and my total change will be 100 + 130 = 260. Total change is always positive. That is, total change is the sum of these...

two numbers without signs, ignoring the sign. The idea, I think, is clear. Total change is the total of what has happened overall,

the total number of events with the codebase. Each event is a change to a single line or

its deletion, or its addition, or its modification. Whatever happens, we look at how many lines

were involved. And in the end, we sum it up and find that our delta seems to indicate we went into the negative,

but our total change is quite large. These researchers proposed such a metric. So,

Sorry, yes, I misled you. Not 260, 230. Sorry, you were right to point that out. 100 + 130 = 230. What is total change?

the word. I don't think you know it; I didn't know it, at least. I didn't know it before these lectures.

about this word. It turns out that "total change" is actually a noun referring to a device for cooking.

oil, that is, in Russian it is "маслобойня" (oil mill). So, in such a wooden apparatus, it was a long time ago,

it's an ancient, medieval device, a wooden barrel, into which on top...

milk is poured in, and then the drum spins at high speed. After some time, as they write,

after 40-50 minutes, you will have butter inside this drum. The milk will be churned together, like...

that's how they write, I don't know, we need to try, I'm not sure it will work, maybe on the first try,

but after prolonged and intense churning, you will end up with butter,

it will gather into clumps, and you will be able to collect this butter from the walls of the drum and...

to spread on bread. This is "черн" - an oil mill. But there is the term "черн рейд," Wikipedia says...

that "черн рейд" is the quantity or proportion of what, so you decided to draw me here,

how to turn this off for you, this is the quantity of the volume of clients that we cannot serve, I think...

I understand, and those who leave the client base over time, that is, a certain volume of losses from management...

of the business or during the course of business, that is, if we simplify it completely, this is the quantity, for example...

of programmers who were in our team and then left, and over the course of a year, if we...

we had 100 people, and by the end of the year, we had, say, 80 people, but during that time, 40 left, and 20 came in.

so this "черн рейд" would be 40, I understand, who are moving out, even though we still have...

it might seem like 100 minus just 20, but the movement was much greater, many of us left.

then some number of new people came to us, but the "черн рейд" shows the maximum loss,

that we experienced over the year. Apparently, they based this term on "черн рейд" and took it...

into programming, saying that we have "черн code." Let's look at a motivational example, although...

I think I already told you in detail, but nevertheless, let's read from left to right. Imagine...

that we have, how can I remove your drawings, like this, imagine...

that you have a commit on the left, the first commit, you made it like this, a classbook, with one attribute...

one constructor, everything is good, at this point, your "черн" is zero because we...

are just starting. Next commit, some artificial intelligence, you have it at zero...

we make commit number two, we add these lines, we added four lines and removed the word "final."

Look, the word "final" is gone from here, which means we made changes in this line and...

added these four, so we have "черн code" plus five, there were no deletions, we added five lines,

And finally, commit number 3. We will...

add the word "final," meaning we modified this line, here we again returned...

the word "final" back, so we also modified this line, and removed two lines below...

these two lines are gone, so we have -1 line here, -1 line here, +1 modification...

+1 modification, in total we have +2, -2, two lines added or modified, it's the same...

and two lines removed. What is our delta from the first commit to the third commit?

Our delta is +3, look at how many lines were added, or rather how many lines changed...

if you compare this code on the left with this code on the right, you will see that one line at the top changed...

and two were added at the bottom, so we have a total of +3 and 0 removed, that's our delta +3...

and our "черн" is +7, -2, because we will sum all the pluses and sum all the minuses,

+7, -2 equals 9, because remember we are adding without signs, +7 and 2 together make 9...

our "черн" is 9, and the delta is 3, the "черн" is usually greater than the delta, and sometimes equal, but it cannot be less than the delta, you understand why...

Look at the table they showed in their article...

look at the picture, they show us the build number, the first column is the build number...

that is, the system changes, they show it as builds, not as versions, but as builds, let it be so...

you can easily imagine that these are versions in the version control system, then r7...

I don't know what that is, I don't remember, it's not essential, we are interested in the delta and the "черн," the delta sometimes, as you see, can be negative...

and for some reason, it is fractional for them, not whole, it doesn't matter...

it could be in thousands, for example, 5000 lines of code, a million lines of code, and so on, it’s not important...

and the "черн," you see, is always greater than the delta and always positive, even when we...

the delta is negative, meaning that at this moment in time, in this build, lines were deleted; nevertheless, the "черн"...

always remains positive, the "черн" always grows upwards, it accumulates the longer we work with the code...

the more of this "черн" we have, and the number of lines of code, they can, as we went through in the first...

lectures, the number of lines of code can be less, it can be more, it can go down to very small...

numbers, but at the same time, the "черн" itself will continue to grow, inexorably moving towards infinity, the more...

the more we work with the codebase, the larger this "черн" becomes, and they say here that...

The problem with the code delta is precisely that it doesn't give us an indicator of how much change...

the system has undergone, meaning that when we look at deltas or look at lines of code, we cannot always or...

we can almost never accurately say what changes the system has undergone, how much has changed there,

we only see the final result, and we can take a system with a thousand lines of code as input, the team...

programmers will work for a month on this system, and at the output, there will be...

two thousand lines, but that doesn't necessarily mean that the team just wrote another thousand.

lines, it most likely means that they wrote maybe 20 thousand lines, but wrote...

deleted, wrote, deleted, and ended up with some new 2 thousand; it’s important to try to look through...

what changes this system has undergone, and look at what the researchers said in 2015, again...

Based on their analysis, they say that they conclude from their data that committing...

files with a large overall, with cumulative code changes, that is, those with a longer change history are more likely to...

result in negative maintainability change, here I like the word, or rather the important word is cumulative,

it presumably refers to the accumulated code changes that you have locally while you were editing.

file, when you send a commit, you send a pull request, or rather, each file will have some kind of...

set of changes that have accumulated during the time you were working on your branch, and the total number of...

changes that you made in a file, in some single one, it will be cumulative across all those commits,

that you made to this file, or GitHub will simply show you the overall cumulative code changes for the file,

In general, here the cumulative aspect is that GitHub performs this function; GitHub will gather the overall code changes and show them.

what the overall changes are, so the takeaway from here is exactly what we just mentioned,

The more you change within a single pull request, the harder it will be for people to understand what you did,

and overall, you are more likely to harm the repository than help it, and let's include a couple of quotes from the technical...

literature, not scientific for now, just popular technical literature, the authors say,

that technical debt is harmful, it causes problems, well, let's take the classic book by Martin.

Fowler, our favorite, the book is called "Refactoring." For those who haven't read it, I strongly recommend it.

I recommend it; it was these authors who introduced the term "refactoring," and you probably know,

what refactoring is: it is the modification of code without changing its semantics. We take the same sorting algorithm,

it still sorts as it did before, but we make small changes to it so that the code works

in a more interesting way, or looks better, or has less technical debt, meaning it has

less of what we don't like, what we would not have written if we could have avoided it the first

time we wrote it. And he says, this is a quote from the book, he says that you see,

they even call it interest payments, meaning the interest; they use banking terminology quite well,

interest. He says that you can tolerate some interest, but if these payments are too

large, as Artem correctly pointed out, there will be bankruptcy, overrun, meaning you will be overloaded

with these payments, and you will have too much need for refactoring. And then he goes on to say,

that it is important to manage this debt, meaning to constantly do a bit of refactoring, to continuously

contributed, improving your code, even where it might not be necessary, then in the end you will have

the overall pressure of this debt on you and your repository will decrease. The first article that

is interesting; they are all quite recent, from 2014, which means literally 10 years ago, the term was proposed.

self-admitted technical debt, meaning the technical debt that a programmer consciously creates and more.

Moreover, it is also acknowledged, and often acknowledged in writing, meaning it refers to this.

the most common to-do and fix-me markers, which you already mentioned today, when I do something wrong,

I'm not just making this mistake by accident; I understand that I'm making a mistake and consciously place this mistake.

in the repository and often write on top of it, like, here in this place it should be done differently,

but there is no time, so later, someone will come in and do it, this is called self-admitted.

technical debt. And here are some interesting statistical observations they made; they say,

that programmers with more experience create more of this very technical debt, and in this,

acknowledges it. It would seem that it should be the opposite; the more skilled the specialist, the more likely they are to...

should do less of this, but no, and I understand this logic; it is indeed the case.

probably, this is related to the fact that higher-level technical specialists feel

more comfortable in the codebase; they understand that the mistake they make today, through

for some time, they will be able to fix it, it's accessible to them, they understand how to fix it, while juniors...

programmers are wary of making such mistakes because they do not understand what consequences it may lead to.

this holds them back; they would rather do it correctly than risk making a mistake. This is the first point, and secondly, I think...

that this is also largely related to the fact that senior developers most often review junior developers,

but not the other way around, and when a junior developer submits this very self-admitted technical debt, it is simply...

rejected; such changes are not accepted, and he is asked to revise them because his...

time is cheaper; he can be asked to finish it, he is an inexpensive specialist, so let him redo it,

and the one who is more expensive, the senior developer, wrote bubble sort; he doesn't have...

the time to write another one, and he is lazy, he is expensive, and he says, for now, let it be this way, I take responsibility for it.

responsibility; I am ready to take all the consequences upon myself, I understand that it will be acceptable here as it is.

here this sorting doesn't play any significant role, we just need its speed somehow, so it's okay.

that is, people who are more capable of bearing greater responsibility tend to do so, which is probably why it is like this.

happens. And the second interesting conclusion is that they say time and code complexity are not related in any way.

correlate with the amount of this self-admitted technical debt; complexity does not correlate, meaning that in different...

places, this very technical debt, these todo markers can appear in all parts of the code.

can be found, regardless of whether the code is complex or simple. Another article, more recent, literally...

A couple of years ago, they conducted a study, analyzing the opinions of different...

programmers, a certain focus group, and they found that the presence of technical debt in the codebase reduces...

morale, this is the fighting spirit; I would translate this word as the fighting spirit of programmers, their motivation and their...

desire to engage in work, because it hinders, that is, complicates, slows down, or somehow...

hinders the completion of their tasks and achieving their goals and making progress. Look,

how puzzle-driven development works, this whole story in one picture, a person makes commit number...

one, reading from left to right, commit number one, I am a programmer, I am sending a Fibonacci function for review,

a function that should calculate the Fibonacci number, it takes a number as input, and then if it is less than...

or equals two, then we return one, and after that, I don't know how to implement the function.

Fibonacci, I am a programmer, I don't have time to figure it out, I'm lazy, I'm on vacation, what can...

...be the reasons, I wrote half of the functionality and even wrote tests for this functionality, and they even...

...pass these tests, I even wrote another test that fails because I don't know how...

...to calculate the Fibonacci number for the digit 9, I don't understand this, maybe someone else will figure it out.

...another, I left, the workday is over, take at least this from me, this is my technical debt and I...

describe my technical debt using the so-called puzzle, we call it a puzzle, this is...

can be called to do, fix me, we use the word puzzle, that is, a puzzle task that should...

like those puzzles that people assemble on a table, where each puzzle piece should represent its own part of the picture.

to fill in, this is one of the puzzle pieces, we have a picture that exists, we have drawn the picture, but we are missing...

...one puzzle piece in the middle, we need to put it there, and we describe it in English with the marker to do.

...first, we describe what needs to be done and write I don't know how to do this, and so on, and then we leave.

...the commit goes into the master branch, all tests pass, the build passes, the code is written correctly,

...cleanly, it just doesn't work completely, it is incomplete, I have technical debt, commit number two.

...another programmer comes in, whether it's the same one or a different one, it doesn't matter, they open this code, and for this...

...programmer, why they come in, just a second, let's take a step back, we put this commit into the codebase,

...there it is, and as soon as it hits the codebase, this text that is here in the repository,

...is scanned by a special tool, I'll show you what it is now, it scans the codebase,

...finds this block of text and creates a ticket from it, for example, ticket number, I don't know, 250, and says...

...we have a new task, we have technical debt in the code, we know its location, we know...

...where it is located, we know its conditional identifier, and we create a new ticket 250 and assign it.

...this ticket is assigned to a programmer, the programmer starts task 250 and begins to implement it,

...he opens this code and sees, look how interesting, he read here that it should work.

...for the number 9, he writes a new test for the number 9, here he added it and makes such a pointless...

...implementation, if the input number is 9, then return 34, it works, it works, but he also doesn't know what to do next,

He knows how to make this test pass, but he doesn't know how to make all the tests pass.

...the other tests, he adds another puzzle, so we end up with the code that you see here...

...in the second commit, it's already a bit flawed; if the first code from the first commit was still...

...okay, it had a part of the implementation that was working, alive, the second part was just removed and it...

...it simply wasn't there, so in the second commit the programmer made a clear mistake, he actually made, for...

...he hardcoded the return 34, you understand that this is defective functionality, and yet we...

...accept this commit, we put it back into the master branch, why? We forgive the programmer for this...

...defect because he is taking a small step forward, he is pushing the development further, he is not slowing...

...it down, yes, he didn't succeed, yes, he doesn't understand how it should work, we forgive him, as long as...