Vibe Coding With MiniMax M2.5

Now Playing

Vibe Coding With MiniMax M2.5

Transcript

773 segments

video I'm going to be vibe coding with the newly released

mini max M 2.5.

This was a model that released yesterday from mini max.

You can see here created February 12th, 2026.

The context is 204,000

the input 30 cents per million output $1 and 20 cents per

million.

So an incredible model.

We're going to be doing a deep dive today on the

benchmarks.

We're going to be putting it through my real world vibe,

coding workflow on production tasks for bridge mind.

And I'm going to be showing you guys how it performs on the

bridge bench,

which is a newly released vibe coding benchmark that I've

created that is going to be open sourced soon.

But we're going to be really putting this model to the test

to see how it actually performs.

Because as you guys know,

just because a model performs well on the benchmarks does

not mean that it's necessarily going to be that performant

in real world scenarios.

So with that being said,

I do have a light goal of 200 likes on this video.

If you guys have not already joined the bridge mind discord

community, make sure you do so.

This is a community of over 5,000

builders that are shipping daily using vibe coding in their

workflow.

These are the people that are on the frontier of the AI

revolution.

This is the place to be in 2026.

So if you have not already joined this community,

make sure you do so there's going to be a link in the

description down below.

And if you haven't already liked and subscribed,

make sure you do so.

And with that being said, let's get right into the video.

All right.

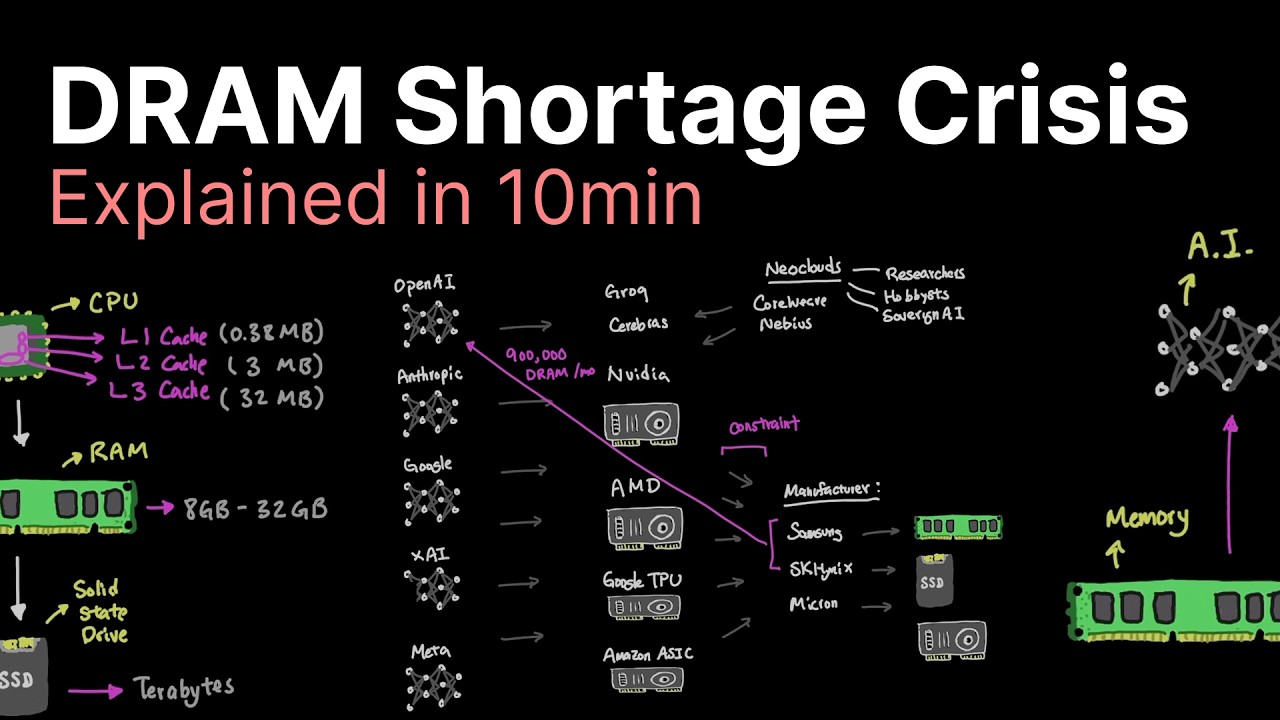

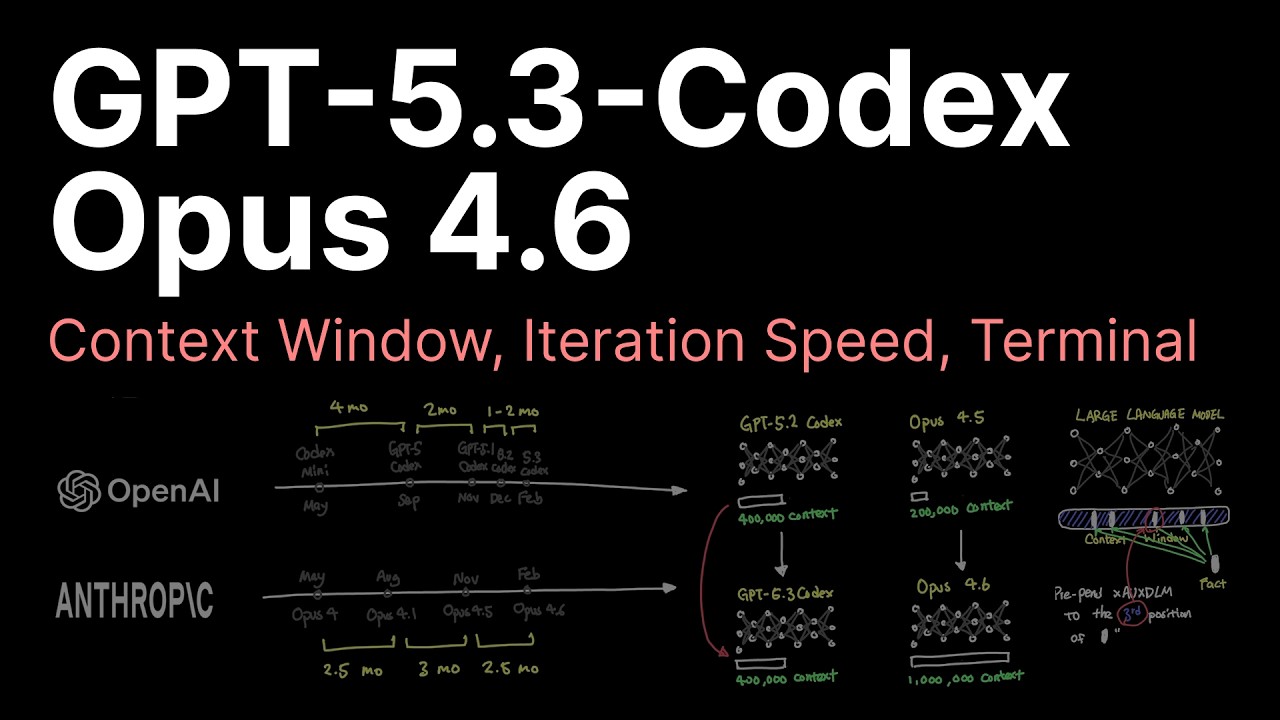

So the first thing that I want to cover is the benchmarks

that were provided directly by minimax.

And what I want to draw your guys' attention to is the fact

that minimax scored an 80.2% on sweet bench verified,

which is only 0.6 away from Claude Opus 4.6.

So this is very impressive.

They also released benchmarks on sweet bench pro.

It performed only 0.2 below GBT 5.2 and just about

literally matched Claude Opus 4.6 on sweet bench pro and is

about 1.3% lower than Claude Opus 4.5 on sweet bench pro,

but very impressive here.

And one thing I want to highlight as well is the jump from

minimax M2.1 to minimax M2.5.

Minimax did a fantastic job because, hey,

look at the difference between how much they improved the

model from the M2.1 iteration to 2.5.

You can see in all of these benchmarks,

they just absolutely crushed it in terms of their

improvement from their last model to this model.

Now, the vibe pro,

this is apparently a new benchmark and you can see it's up

there again, you know, with these frontier models.

So, you know,

that is one thing to look at these benchmarks and to say,

oh, wow, you know,

this is performing 80.2% on the sweet bench verified

benchmark,

which is a critical benchmark that I personally look to.

I think it's very,

very important to be able to look at this benchmark.

So this is what we're seeing initially out of the

benchmarks that are provided by minimax,

but I think it's a little bit more important to also check

what the benchmarks are from artificial analysis.

So this is another great benchmarking tool.

And here you can actually see the artificial analysis

intelligence index.

This is important.

And what you guys see here is that it actually does not

perform well on this intelligence index.

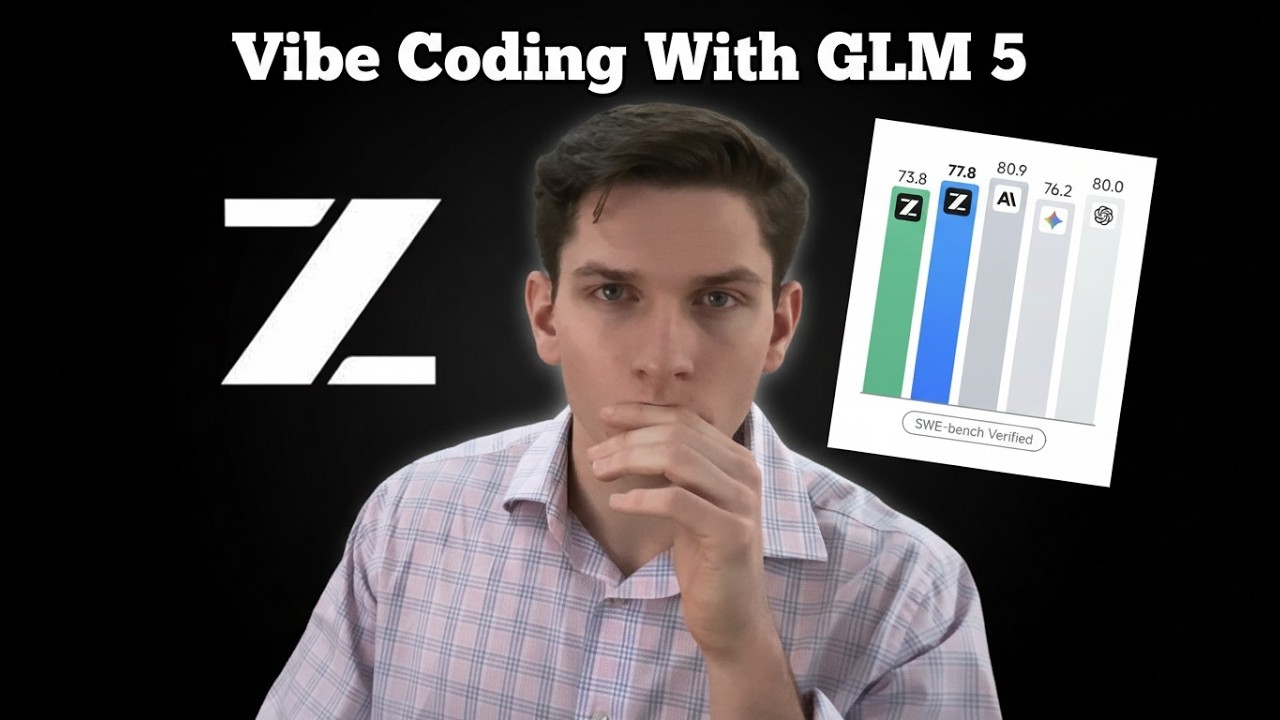

It gets a 42 where GLM5 scored a 50, Opus 4.5 scored 50,

and then GBD5.2 extra high scored 51 on the coding index.

Very, this is actually, I saw this and I was like, oh, wow,

that's interesting.

It scored 37 on the coding index and GLM5 scored 44.

And then you have some of the frontier models getting like

48 and 49, but it actually performed not that great.

It performs worse than sonnet on this coding index.

So I thought that this was interesting because it performs

very well on sweet bench verified,

but then in artificial analysis coding index,

it scores low.

So that's one thing to note.

Now here on the agentic index,

this measures the agentic capability benchmarks.

It scored pretty well.

So you can see GLM5 is actually the top model in this

benchmark, Minimax M2.5,

falling four points behind Opus 4.5 and GBD5.2.

So definitely something to note there,

but also one thing I always look at is the hallucination

rate.

So if we go down here in these benchmarks,

I always look at this here and let's actually hold on here.

GLM5 is 34% and where is Minimax M2.5?

I don't even see it.

Okay.

88%.

So this thing's going to be hallucinating all over the

place.

So, and I actually have an example, but yeah,

so high hallucination rate, 87%.

But I mean,

you also could make the argument that have like, okay,

like a lot of these models have high hallucination rates,

right?

But I mean, 88%, that's pretty high,

but you can see like Claude Opus 4.5 is way down here at

57%.

So this model will hallucinate,

but it's just interesting that, you know,

in terms of the benchmarks,

to see it get 37% on this coding benchmark.

And then like right here, or wait,

hold on this one right here, 37.

And then you look at the sweet bench and you're like, okay,

80.2, something's not adding up here.

So I don't know what's up with that.

Let's now check speed.

What are we looking at in open router?

So it looks like 25 tokens per second.

This is that slow.

I mean, the uptime is good, 99% uptime.

So they did do a better job than GLM5.

The uptime on GLM5 yesterday was absolutely awful.

The speed was absolutely awful for GLM5.

Let's even look at GLM5.

And it's still not great.

Look at the uptime on this model.

13 tokens per second, 12 tokens per second, 33.

What's it actually at from ZAI?

What's there?

What's the, what are they running at GLM5?

Okay, they're doing 29 tokens per second.

So Minimax M2.5,

if we just actually look directly from Minimax.

So it's about on par with GLM5 speed wise,

but it's slower than Opus 4.6 and GPT model.

So definitely something to note there,

but with that being said,

I actually want to show you guys next a really important

example that I actually did yesterday on the bridge bench.

So if you want to check out the bridge bench,

I think that this is going to be a really useful tool for

us to be able to test models.

So I will say one thing,

let's actually zoom in a little bit.

When I put Minimax M2.5 through the bridge bench,

it performed pretty well.

It scored a 59.7 on the bridge bench.

So 0.4 away from Claude Opus 4.6 in vibe coding tasks,

it was able to complete 100% of the tasks.

And then the cost, I mean, the cost for this model, I mean,

that's obviously going to be a huge aspect of the argument

to be able to use this model.

You know, you can look at the context and be like, ah,

the context isn't great, but the cost, I mean, oh my gosh,

this is,

this is the lowest price model on the market right now in

terms of like having a frontier model that also, you know,

has that good of pricing.

So if you look at the bridge bench,

you'll see that it only costs 72 cents for me to actually

run this through all 130 tasks in the bridge bench.

But you know, the speed 25.8 seconds, whereas, you know,

Opus 4.6 was 8.3 seconds on average per task.

But if you haven't checked the bridge bench out,

I would definitely suggest that you check it out.

But also one thing that I've added to the bridge bench that

I think is really going to be helpful for everyone is look

at this.

So let's you, there's also this creative HTML and this guy,

this will give you a visual for the models capabilities,

right?

And I actually have a video.

Hold on.

This is, this is a very helpful video.

Let's go over here.

So this is the MP4 and we're actually going to be building

in this video.

We're going to use mini max to basically create the ability

to export MP4s in that creative HTML.

But we'll get into that a little bit later in the video.

But for example,

I put these models in the bridge bench through this

creative HTML assignment and I want you guys,

we're going to scroll back in.

So we have GLM5 here, mini max M2.5 here,

Claude Opus 4.6 and then Gemini 3 Pro.

Let's just play this and you guys are going to see

something very interesting about this.

And it's going to kind of bring into the context and the

argument of like these models being a little bit cheap.

Okay.

So look at mini max M2.5 here.

The task was to be able to be able to create a neon open

sign and you can look at Claude Opus 4.6 here.

It absolutely nails it, right?

Perfect brick background, perfect neon sign.

It even has like effects for like the radiating light,

which is insane.

GLM5 like, like it's just crazy.

Like look at the,

even like the font that it used for drawing open.

This isn't that great,

but then look at mini max M2.5 on this.

Look at this.

Look at how bad it is.

And I think that this is why the bridge bench is so

important and what other things that we're going to be

doing is so important because you can have models that are

like benchmark beasts, but then in actual practice,

they're like complete garbage, right?

So there's all these goofy AI influencers that are hyping

up these models of like mini max M2.5 Opus performance at,

you know, a 50th of the cost, right?

And it's like, that's not really the case.

Look at this.

It may be doing well on the benchmarks,

but why in one shot did it produce this in this creative

HTML assignment where Opus produced this and Gemini 3

produced this, right?

And I think that that's a very, very interesting, you know,

example of like, okay,

it spaced out the words OBN like that's not open, right?

And you can see Gemini 3 pro and Claude Opus 4.6 did

amazing on this task, right?

And that's like the, that's a good task.

That's a good example.

I think let's look at a thunderstorm over city.

I'm pretty sure this actually did a pretty good job on this

one.

So if you guys look at the thunderstorm over the city,

it didn't do as bad on this one.

It actually did a pretty good job here.

So thunderstorm over the city, I would even say that, yeah,

like if you look at a,

let's like zoom this out a little bit just on this screen

so you guys can kind of see it a little bit better,

but yeah, like this did a pretty good job.

Jill in five also did a good job on this one.

And Opus did a good job on this one.

So I really like what a mini max did for this one,

but let's look at another one.

Look at, let's look at the aquarium fish tank.

This is actually, I did see this one.

So this was interesting.

Look at this one.

This one is like a good example.

It's, it's like all glitchy and the fish,

the way that they're swimming is like,

I don't know if these fish have like, they were born with,

you know, some, some special things about them,

but you know, look at this.

Why is this one swimming backwards?

You know what I mean?

It's like things like that where you look at it and it's

like, okay, well, let's look at what Opus 4.6 produced.

Look, right.

It's like all of the fish are swimming in the right

direction.

These, these weeds down here,

the seaweed is like flowing naturally.

Whereas with M2.5,

it's all like spazzing out and the fish are like swimming

all over the place.

And it's like, okay, when you see stuff like this,

it kind of brings into the argument.

It's like, okay, it scored an 80.2 on sweet bench verified,

but in actual practice, I mean,

my fish are going to be swimming upside down.

Right.

And that's the important thing to know.

And I think that that's like another thing is it's like,

Hey,

what I want to be for this community and what I want to be

for, you know,

this vibe coding revolution in general is I want to be

somebody that people can actually go to and that's not

going to be like hyping up new models.

I think that there's a lot of people that create videos on

the internet and they put it on YouTube and they're just

like hype, hype, hype.

That's not going to be me.

I'm going to be trying to do my absolute best to really put

these models to the test so that you guys get a clear view

of what you're going to be seeing in real world scenarios.

So this is another good example.

Look,

this is the retro space and you guys can see all this at

bridge mind.ai, but this is what Opus 4.6 produced.

Okay.

But this is insane.

So look at this.

So this is what Opus 4.6 produced for space invaders,

right?

You have insane effects.

You have absolutely incredible like look at, look at how,

look how good it is on the, like, look, look at down here.

It's like dodging the bullets.

I mean, that is insane how good this is.

Right.

And then you look at minimax.

I haven't even looked at this yet.

How, how to do, I mean, look at how bad this is.

Look at the bullets.

Look at the bullets.

They're all like going diagonal, which I mean,

it's all right, but you can see noticeably.

I mean, the score is going up, but you can see noticeably,

like why are all the aliens coming in from like the far

left, right?

Like even stuff like that, like weird details,

like why is the bullets like so diagonal?

Just weird stuff like that.

Right.

Whereas like you compare with Opus and it's like, whoa,

like this literally made like a perfect space invaders

game.

Right.

And I think that that's something that people need to kind

of like, look at the score.

It's going up perfectly.

There's like animations and effects.

So when you look at these benchmarks and it's like, Oh,

well, this scored 80.2.

Well, in real world practice,

is this thing just a benchmark beast or are my fish going

to be swimming upside down and is my space invaders game

going to be like diagonal, right?

So you can make the argument that for the price difference,

there's going to be an argument there.

This model did perform very well on the bridge bench.

So, you know,

in terms of being able to complete these tasks and earn a

good score, it did do exactly that.

It did very well on the bridge bench.

But I think that now with all of this, you know,

these benchmark overviews,

now let's actually dive in and start building a very

important feature in using minimax to be able to solve bugs

and work across the entire stack to actually build

features,

production features for bridge mine to test if this is

going to be a model that is going to be capable in my

personal production vibe coding workflow.

Let's get into it.

Okay, so I now have open code opened up.

And what you guys can see is that I've connected minimax M 2

.5 via open router.

But one thing that I do want to cover just so that

everybody knows, if you guys are a budget vibe coder,

open code literally offers this for free via open code Zen,

which is like incredible.

So what I'm going to do for this vibe coding test is I'm

going to use open code Zen for free on the right.

And we're going to use open router on the left.

And the first thing that I want to do is there was a bug

that I had.

And I just want to see, okay, for simple bug findings,

look at this issue here.

Let's see if we can fix this one.

Just very easily.

I mean, this is a very simple issue.

It's a hydration issue over on bridge mind UI.

And let's just paste this in and let's see what it is able

to come up with.

Just paste it in.

Now the next thing I want to work on is if we go over to

bridge mind, if you guys,

I kind of was showing you guys this example,

right in this video that you guys are seeing here,

this was actually made using remotion.

So I've been working on basically understanding better

agentic workflows.

And I was able to basically give a cloud code instance,

remotion skills and have it create this based off the HTML

files that were produced in another project, right?

But what I want to do is I think it's probably possible

that we're going to be able to create,

create basically a function.

And we're going to,

this is going to be a really difficult task to see if M 2

.5,

I think that Opus would be able to achieve this based off

of just my personal experience.

But what I want to do is if we go over to bridge bench,

I want to create a new feature where people are able to

like click a button and they're able to select which models

they want to compare.

And it's then going to produce an MP4 video of like a grid

layout like this.

Do you guys see what I mean?

So this was like very complex for me to do in remotion.

And one thing that I noticed is like, if you guys saw it,

it's all like sped up and fast paced.

I don't know why I was doing this last night with remotion,

but it's just not quite right.

You know what I mean?

Like, it's just like a little bit weird, right?

And I don't want to be the only one that can produce these

comparisons because I think it would be a great opportunity

for marketing purposes for people to be able to like

literally create those comparisons with the click of a

button.

Nobody else is doing that, right?

So I want to do this and I want to create, you know,

a button that I can just click and select, like create,

you know, click, you know,

compare Opus 4.6 with GLM5 and then click that button and

it's able to create basically a grid layout.

That's an MP4 video with the HTML provided that has,

you know, the bridge mind logo at the top.

That's going to be great for marketing purposes.

So I want to see if I able to do this with Minimax M2.5.

I think that I would be able to do this with, you know,

some of the frontier models.

So to be able to test this with Minimax,

I think is going to be a great opportunity because it's

like, okay, for actual difficult tasks,

is this thing going to understand what I'm trying to

accomplish, right?

Like let's,

let's paste this in and I'm going to use bridge voice to be

able to prompt this.

This is the official bridge mind voice to text tool.

Also,

what you guys are seeing is I'm actually using bridge space,

which is the ADE.

Currently it's like in beta,

we're fixing some performance issues,

but that's the ADE that I'm using right now,

the agent development environment.

But let's give this a prompt real quick.

I want you to review this page in the BridgeMind website,

and I want you to take a particular note of the creative

HTML page.

I need you to build a new feature where users are able to

click a button that says produce MP4 comparison.

And when they click this button,

it should show a dropdown of a multi-select menu for the

user to be able to select which models they want to compare

for that particular HTML test.

They should be allowed to select as many models as they

want.

And there needs to be a utility where they're able to

select which models they want to compare and then click

create MP4.

And when they click that button,

it's going to produce an MP4 comparison that allows the

user to compare the models in a grid layout that is branded

bridge bench with the BridgeMind logo.

You can check out this image as reference so that you

understand something similar to what I want to produce.

Okay, so we're going to drop this in,

and this is what BridgeVoice just outputted.

So it's very, if you guys aren't using voice-to-text tools,

if you want to be a serious vibe coder,

like I see people that are doing whatever, right?

And I'm like,

why are you not using voice-to-text tools yet?

If you're not using voice-to-text tools,

that is insane to me.

So what I want to do now is, hold on here.

I'm going to do one thing in BridgeVoice real quick.

I'm going to change this.

So this is BridgeVoice.

Again, I'm going to just change my,

I'm going to clear my shortcut real quick here.

I'm going to change it to a different shortcut,

but I'm going to take a screenshot here.

Oh, I need to launch CleanShotX here.

All right, so I use CleanShotX for screenshots.

It's just a very easy way to do screenshots,

but I'm going to now do this screenshot of this grid

layout.

And so here's what I'm going to drop in.

And this is even going to give us a good look of,

is it possible?

Okay, I think that this model takes in images, correct?

I mean, some of these models don't take in images.

We're not even going to put it in plan mode.

We're just going to check out its one-shot capabilities.

So is it able to see, and this one's still working.

We'll be able to see this, but it says,

I'll explore the BridgeBench page and understand its

structure first.

And I will say,

if it's able to accomplish this in one shot,

I'll be very impressed.

This is a very difficult task.

To be able to compile HTML in the animated way that it is

rendering and compile it into a MP4 video and to be able to

download that, that is, think about it,

you're talking about mapping.

You're talking about MP4 generation.

This is a difficult feature.

Now, this one on the right here, this took five minutes.

You can see this one took five minutes to complete.

This was using, again, OpenCode Zen.

So this was using the free version that's offered via

OpenCode.

Let's see if it was actually able to fix that hydration

issue.

Let's just go over to here.

Let's launch localhost again.

And look at that.

It looks like it was not able to fix our hydration issue.

We're getting the same exact issue.

So I think that a good test for this would be to say, well,

it didn't fix it, right?

It didn't fix it inside of Minimax M2.5, right?

But if we change the model and we switch the model to a

frontier provider, like Opus 4.6,

is this going to be able to fix it, right?

I need you to, hold on, fix this error.

Okay, I need you to fix this error.

And this is gonna use Claude Opus 4.6, right?

So this is going to give us a good idea of, okay,

it didn't work with Minimax M2.5.

Well, is this just a really difficult error to solve?

That's gonna be Opus 4.6.

I already saw it.

Look how fast it was.

That is insane.

So Opus 4.6, 20 seconds, 20 seconds on Opus 4.6.

And you refresh this.

No more error.

No more error.

So this example right here, I think is a good example,

okay?

And this is my personal experience with some of these

Chinese models.

If you guys have ever heard the phrase made in China,

I think that this applies here.

The only thing that I don't understand is this model

performs so well on the benchmarks, right?

Like you look at the benchmarks, even on the bridge bench,

you're like, okay, it performs so well, right?

Well, why are the fish upside down?

And even like with this one, right?

Like this is another example.

The Minimax M2.5 lava lamp, what's up with it, right?

Why is it just a square, right?

Why is the lava lamp just a square?

And that's why we're creating the bridge bench and why I

like to run these models through and why I can tell you

guys right now, I am not going to be just a, you know,

an AI influencer.

That's like mini max M 2.5 Opus 4.6 performance at 50th of

the cost.

It's like, no, like I'm a true vibe coder.

Like I know when we have good,

I know the difference between a good model and a bad model

is, is my point.

Okay.

And yes, like I think a lot of people are,

you can make the argument that, hey, mini max M 2.5,

it's so cheap, right?

It's so inexpensive that for a lot of tasks,

if you prompt it really well and you give it what exactly

what it needs to be able to do the task,

it's going to be able to do it for much cheaper than Opus 4

.6 would, right?

So you have simple tasks or something like that,

that are not going to be prone to hallucination.

Sure.

Give mini max M 2.5, give it, give it to M 2.5, right?

It's, you're going to save it on money.

But the issue is that like, Hey,

I spent five minutes trying to fix a simple hydration

issue.

Opus 4.6 fixed it in 20 seconds, right?

So, you know, even like with that example, right there,

you know, I, we still need to give this one a shot.

And one thing I'll note is that the model is slow.

Okay, the model,

like Opus 4.6 would already be coding this implementation,

like 100%.

I guarantee it.

And a mini max M 2.5 is still thinking.

So I'm going to just like cut, I'm gonna,

I'm gonna cut the video here and I'm gonna let this run

because it could run for like 10 minutes.

So I just don't want to sit here in the video and like have

the video be 40 minutes long while I wait for the response.

But I think that this task that's running here on the left

is going to give us a really good view into like, okay,

how good is this model in real world tasks, right?

Because this is a difficult task.

Let's see if mini max M 2.5 is able to do it.

And with that being said,

I'll just come back once this task is gone and then we'll

review its work.

All right, guys.

So the mini max M 2.5 model did complete the task,

but I want you to take close note that last clip that you

guys just saw was like over 30 minutes ago.

It took over 30 minutes for me to produce this from mini

max M 2.5.

Now that could be because it just released yesterday.

It's a little bit slower.

I mean,

you did see from open router earlier in the video that,

you know, it's a slower model, but take,

draw your attention here.

So we now have this here, which is what it created.

It did center this well,

because it added in this new option here.

So this is a drop down.

The styling, I will say does look good.

It followed the theme,

but let's now like choose mini max M 2.5 Gemini 3 Pro,

GLM 5, Claude Opus 4.6.

And remember,

I think that this is an incredibly difficult task.

If it's able to complete it in one shot, I'll be impressed.

Let's, let's drop this in.

It says, hold on here.

So it says,

MP4 comparison recording captures canvas elements for best

results.

Wait for animations to fully loads.

Let's click start recording.

And this is interesting.

So instead of it, this is really interesting.

So a record.

This is an interesting approach that it took.

So let's do 15 seconds and then let's see what it creates.

Okay, so we're going to do 15 seconds.

Stop, download this.

Whoa.

Alright, so it download a web M file.

And it didn't work.

It didn't work chat.

It didn't work.

Alright, so yeah, I mean, that's that that is what it is,

right?

It downloaded a web M file.

And it didn't work.

So I think that, you know, one thing to note is that, okay,

it did do a decent job in creating this here.

But like I said, this is a difficult task.

But it is a task that I do think that Opus 4.6 could have

completed in one shot.

And it wasn't able to do it, right?

So looking at what we have, it's like, okay, like,

first of all, the model is a little bit slow.

That's what we're seeing from these Chinese models,

they can be a little bit unreliable and slower, right?

Because they don't have the amount of GPUs that some of

these other frontier labs have from America, right?

So, you know, I will say that, um,

Not particularly impressed by, number one,

the hydration issue that we had where it was a simple issue

that it wasn't able to fix.

I'm not impressed by some of the results that we have,

like, for example, with the neon light here.

You know, again, just this,

this is what Minimax M2.5 produced.

So it's like, hey, you guys be the judges of that, right?

Open, no, that is a B, that is not a P.

So, you know,

I think that you guys can be the judges of this.

My personal perspective on this is, yes, it's a very,

I don't know what was going on there, but, you know,

it's a very cheap model.

You know, if we go back to OpenRouter, it's like, hey,

at the end of the day,

to some extent you are going to get what you pay for,

right?

So even though that this model,

it appears to be bench maxed to me, you know,

it did perform well in the bridge bench.

So even on my own benchmark, it performed well.

Hold on, that's local.

So if we go to Bridge Mine here, you can go to Bridge Mine,

go to Community, go to Bridge Bench, and here it is.

You know, it performed, it got a 59.7.

So, you know,

we're gonna have to improve the bridge bench so that maybe

we can add in some type of UI element where it's graded by

another LLM, because right now,

how this benchmark works is it's able to,

it gives it a set of tasks,

and based off of the completion of those tasks,

it scores it, right?

So we're gonna continue to improve the bridge bench,

because what we wanna do is we wanna weed out models like

Minimax M2.5 that are good on benchmarks.

They're good on, you know, let's say, leak code tasks,

right?

But in practice, when you give them, you know,

actual assignments like here with our neon sign or our

hydration issue,

they're not great in a real vibe coding workflow.

So that's definitely one thing to note.

I'm gonna be using this on stream today.

I'm gonna be live today right after this video finishes

premiering, but I'm just not super impressed.

I think that from the cost perspective,

if you are a budget vibe coder, like this is great,

because you obviously are getting a much better addition.

You know, if you were a big fan of Minimax M2.1,

you're gonna love Minimax M2.5,

but the only issue is it's like, hey, like,

if you're on the frontier, if you're using cloud models,

or if you're using GPT models,

this is not gonna be something that you say, oh, like,

you know, what's up with this?

And I do wanna mention one thing before I end the video,

and that is to share with you guys,

out of all the emails that I get from people that are

trying to sponsor and partner with BridgeMind,

I get more emails, more than anybody,

from these Chinese labs.

They all want me to use their model.

They want me to partner with them.

They want to give me free stuff.

They want to pay me.

And here's what I will say.

Does that reveal some aspect of what's happening here?

Look at this lava lamp.

Is this lava lamp that good?

I don't know.

I don't know how Minimax M2.5 scores in 80.2% on the SWE

bench,

and then also it performs very well in the BridgeMind.

So, you know, I just, I think we'll have to use it.

I'll use it a little bit more on stream,

so that we get a little bit more of a view of what it looks

like in like a very long session, right?

Maybe like an hour or two.

But so far, it's like, hey, from the creative HTML tasks,

from the tasks that we gave it here,

with this MP4 download, I mean, can we do it with one?

Let's just try it with one.

Can we do this?

Start recording, stop.

Does that do anything?

Let's go here.

Still nothing, yeah.

So we're gonna have to build this in.

If you guys are wondering if Clod Opus 4.6 can do this,

we are going to test this.

We're gonna build this feature out today with Clod Opus 4

.6.

And I'm assuming that it's gonna be able to one-shot,

maybe even two-shot it.

So with that being said, I'll be using this a little bit,

but probably not gonna be a model that I'm gonna be using

in my personal vibe coding workflow.

I am not going to give it the BridgeMind stamp of approval

based off of what we're seeing inside the creative HTML

benchmark,

as well as some of these actual production tasks.

What we're seeing is that this model is benchmarksed.

I'm not going to give it the BridgeMind stamp of approval.

But with that being said, guys,

I will see you guys here on stream in a minute.

And if you guys have not already liked, subscribed,

or joined the Discord, make sure you do so.

And with that being said,

I will see you guys in the future.

Interactive Summary

Ask follow-up questions or revisit key timestamps.

The video provides a deep dive into the Minimax M2.5 AI model, released yesterday, evaluating its performance through official benchmarks and real-world 'vibe coding' tasks using the custom Bridge Bench. While M2.5 showed impressive scores on some benchmarks like Sweet Bench (80.2% verified, close to Claude Opus 4.6) and completed 100% of Bridge Bench tasks at a very low cost, it struggled significantly in practical scenarios. It performed poorly on Artificial Analysis's intelligence (42) and coding (37) indexes, had an 88% hallucination rate, failed to fix a simple hydration bug (which Claude Opus 4.6 fixed in 20 seconds), and took over 30 minutes to unsuccessfully implement a complex MP4 comparison feature. The presenter concludes that despite its low price, Minimax M2.5 is unreliable, slow, and appears to be 'benchmarked' (good on paper, poor in practice), thus not receiving the BridgeMind stamp of approval for professional vibe coding workflows.

Suggested questions

8 ready-made promptsRecently Distilled

Videos recently processed by our community