GPT-5.3-Codex and Opus 4.6 in 6min..

Now Playing

GPT-5.3-Codex and Opus 4.6 in 6min..

Transcript

151 segments

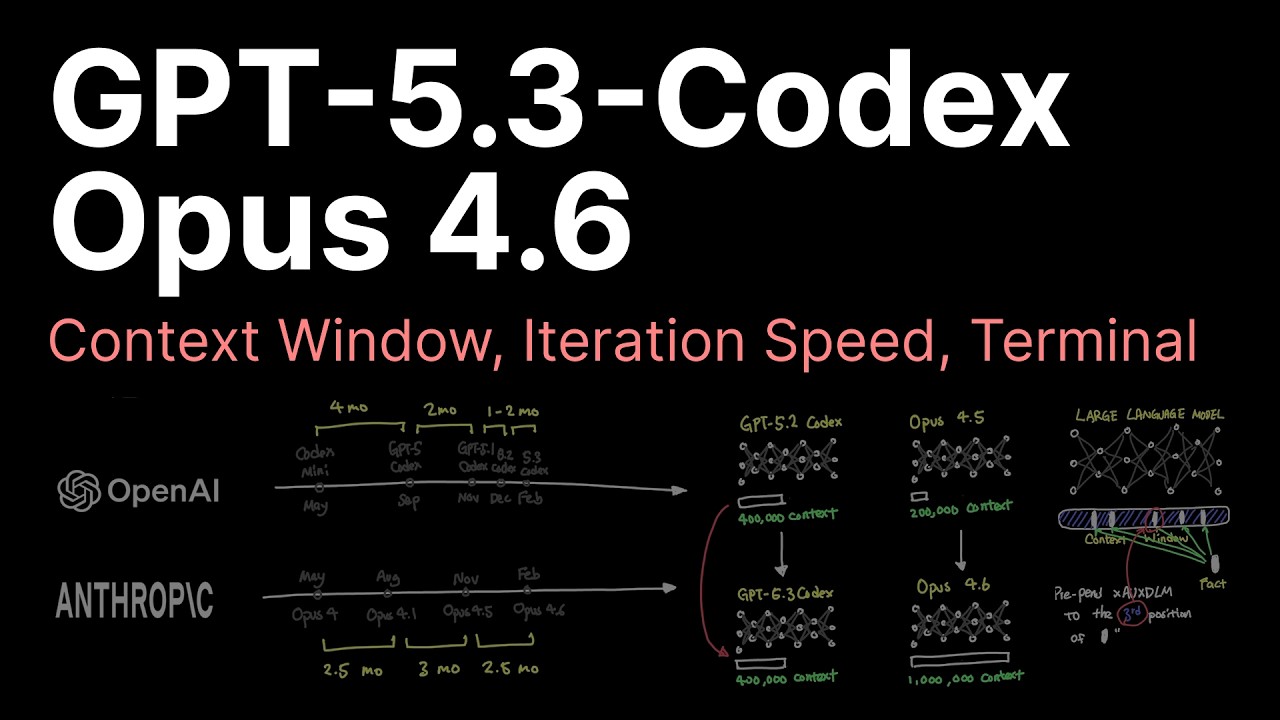

OpenAI didn't even give Enthropic more

than 10 minutes of glory by releasing

GPT 5.3 Codeex just minutes after the

release of Opus 4.6 model. And honestly,

when you factor in all the major

releases that we've had in just these

two companies in the past year, it can

be quite exhausting to keep track of all

of them. So today, we're going to get

straight to the heart of why this

matters and discuss the technical

breakthroughs. Welcome to Caleb Wright's

Code, where every second counts. One of

the biggest gripes that developers have

when it comes to large anguish models is

when it comes to context window. For

example, Google's Gemini offers 1

million tokens, OpenAI GPT with 400,000

tokens, while Anthropic's previous model

only offered 200,000 tokens. And while a

Gent applications like Cloud Code does

help manage its own context pretty

efficiently, 200,000 tokens was

certainly lagging behind in comparison

to the rest of the pact. And you might

think scaling the context window should

be easy, but in reality, there are

drawbacks along the way. For example,

while Google's Gemini offers 1 million

tokens, as you can see, the accuracy of

the model's ability to actually retrieve

its context well decrease as more of the

context gets used up. Here, you're

seeing how Gemini 3 actually scored

around 25% in accuracy by the time it

hits the 1 million context window. And

you might think this is quite pathetic,

but the highest score at the time was

only 32.6% accuracy for 1 million

context, which is not that high when you

think about it. Well, until Enthropic

released Opus 4.6 that scored a whopping

76% on the 1 million context window. So,

not only was Anthropic able to jump

their context window from 200,000 to 1

million tokens in their model upgrade,

they also more than doubled the

performance in preventing what's called

context rot. Just to briefly touch on

this metric, which is called MRCR. It's

often referred to as a needle in the

haystack metric where you place repeated

facts embedded throughout the context

window and ask the model to identify

correctly the position of that fact

buried somewhere in that context window.

And the eight needle here refers to the

difficulty of the problem where eight

identical facts are embedded directly in

the context making it that much harder

for the model to keep track. So scoring

76% accuracy while using 1 million

context usage is extremely impressive

for Enthropic. Let's quickly switch to

OpenAI's GPT 5.3 codeex release. While

OpenAI maintained its 400,000 context

window for this model, there were

certainly few noteworthy achievements

that OpenAI made on this release. First

is a 25% increase in speed when it comes

to inference. One of the biggest gripe

that people had about the codeex model

in comparison to Opus was just how slow

the model actually was in real time.

Another impressive achievement that

OpenAI made was its ability to actually

navigate itself well in the terminal. We

know that ever since Cloud Code was

released back in February 2025 and even

codec cli, which was released in April

2025, terminal based agents have sort of

been the de facto in where these models

actually lived and breathe. So being

able to actually navigate itself in that

environment is critical. One of the ways

to test this very thing was having a

series of isolated environment where we

can just drop the model to these

isolated environments and give it an

objective. Then we can test the model to

see if it can solve its way out by

running the necessary terminal commands.

This kind of benchmark is called

terminal bench where the second edition

had 89 of these isolated environments

and models are dropped into each docker

containers to see if they're able to

solve varying tasks like building

repositories, setting up a server,

training LLMs, or just about anything

that people would actually use them for

in real life. OpenAI jumped from 64% in

their previous GPT 5.2 2 codecs to 77%

in their GPT 5.3 codeex model. Well,

while the official record show that they

actually scored 75%, the difference is

quite huge in comparison to Opus 4.6.

OpenAI also said that they used the GBT

5.3 codeex to assist in building itself,

which certainly points one step toward

singularity, where someday AI could

train itself faster than we can. And the

pace of innovation at that point will

spiral so fast that AI will outpace our

ability to contribute towards its

self-improvement. Let's now compare the

iteration speed from OpenAI and

Enthropic. While Anthropic's Opus line

has been innovating at a speed of 2 to 3

months per each iteration, OpenAI has

been narrowing down its iteration speed

from 4 months to two months and now to

one to two months in iteration speed. I

think seeing model releases in this

frequency of every month or even every

other week is something that we could

see in the near future and the

competition at that point will get even

more fierce between all the frontier

labs. Pricing is also something that

OpenAI certainly has huge advantage over

Enthropic where the GPT 5.3 codeex is

offered at $1.75 per million input

tokens and $14 for a million output

tokens while Opus 4.6 6 is offered at $5

per million input tokens and $25 per

million output tokens. And the word on

the street is that Opus 4.6 is actually

very token hungry. So while most people

use Chachi BT's $20 plan to access their

codeex models with enough room for

credits, it's likely that Opus 4.6, six,

especially given its large 1 million

context window. The same $20 plan from

Anthropic might not actually suffice

given their 5-hour limit window that

refreshes each time. What do you think

about these releases in the juxaposition

between OpenAI's GBD 5.3 codeex against

the anthropic OPUS 4.6? Which do you

prefer more?

Interactive Summary

Ask follow-up questions or revisit key timestamps.

The video compares recent large language model releases: Anthropic's Opus 4.6 and OpenAI's GPT 5.3 Codeex. Opus 4.6 made a significant leap by increasing its context window from 200,000 to 1 million tokens and achieved an impressive 76% accuracy in preventing 'context rot.' GPT 5.3 Codeex, while maintaining a 400,000 token context, improved its inference speed by 25% and showed substantial advancements in terminal navigation, scoring 77% on the Terminal Bench benchmark. The video also highlights OpenAI's use of Codeex to build itself, faster iteration speeds from both companies, and a notable pricing advantage for OpenAI's model.

Suggested questions

5 ready-made promptsRecently Distilled

Videos recently processed by our community