DRAM Shortage Crisis explained..

Now Playing

DRAM Shortage Crisis explained..

Transcript

278 segments

Samsung is rumored to raise their memory

price across all memory products by 80%.

And if you look at the broad market

outside of Samsung, it's not just the

consumer and server grade DRAM, but the

contagion spreads to nan storage as well

when it comes to pricing. For example,

if you look at this Corsair Vengeance

RAM, it easily tripled in price in the

past 4 months alone. And solidstate

drives like the Samsung EVO also had a

huge price jump recently. But why? Why

is this happening all over the market? I

think the first culprit that most people

look at is AI. If you follow the huge

spike in Nvidia's revenue, mostly driven

by data center grade graphics cards, it

may lead you to say this is why DRM

prices are going up like crazy. But at a

closer look, DM prices have mostly been

going up at around October 2025, while

demand for AI chips have been going on

for years, dating all the way back to

2024. So, how does all of this make

sense? Today, we're going to look at the

DRM shortage crisis that's happening

globally and also understand what DRM

really is in the first place and look at

the broad market impact that it's

causing. Welcome to Kobe's code where

every second counts. Quick shout out to

Zo Computer. More on them later. Let's

first look at the impact that AI is

having on the memory industry. We know

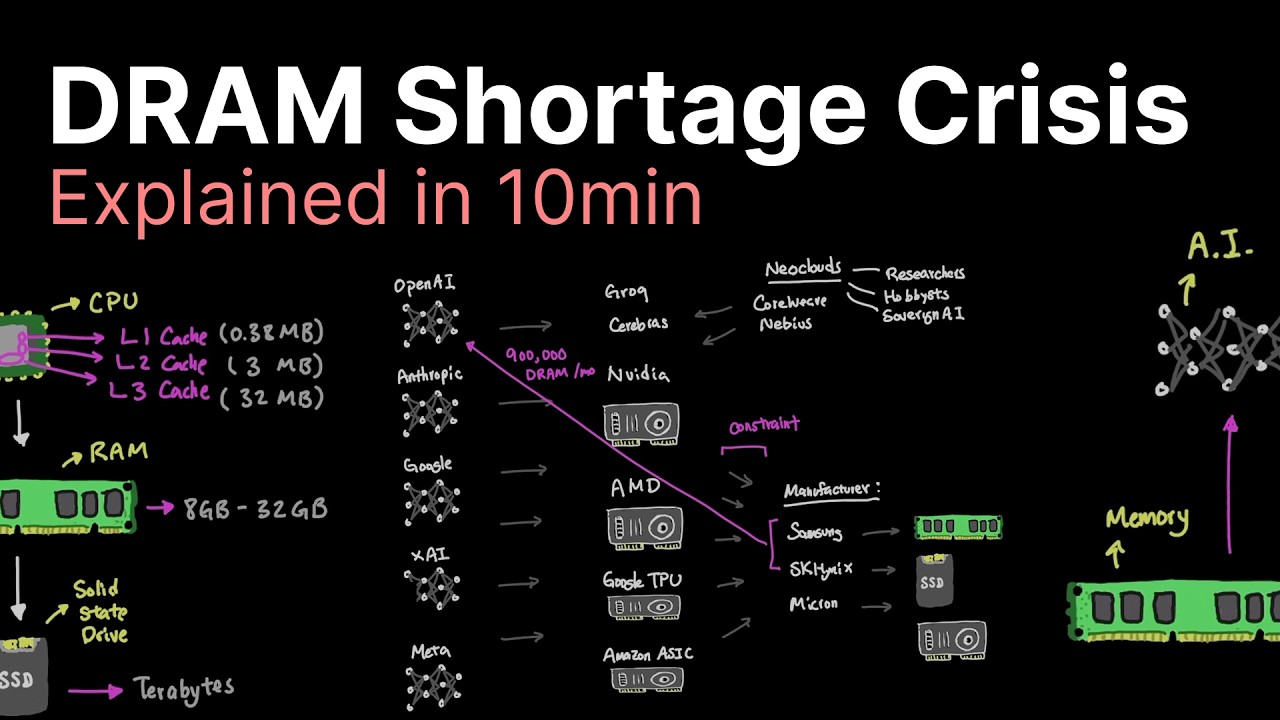

that modern computers have a hierarchy

of memory starting from the CPU that has

L1, L2, and L3 cache. For example, my

computer at home uses the AMD Ryzen 5

that has 384 kilobyt of L1, 3 mgabyt of

L2 cache, and 32 megabytes of L3 cache.

And the real estate of these memories

would look something like this in the

physical form. But we all know that we

can't just run an AI model directly on

my CPU. Modern large language models

like DeepSync R1 that are 671 billion

parameters in size need a lot more

memory than what my CPU can provide from

L1 to L3 cache. The DeepSync R1 model

for example even at a low 1.58bit

quantization needs at least 130 GB of

memory to run them. And since my CPU

can't offer that kind of memory, we have

to work our way down the hierarchy of

memory until we can. So the next stack

we look at is RAM. Typical consumer

grade hardware have somewhere around 8

to 32 GB of RAM which is still nowhere

close to being able to run our DeepSync

R1 model. So we have to go even further

down the stack in our memory hierarchy.

Solid state drives offer a huge amount

of space easily in the terabytes. So we

could load our 130 GB of model in here,

but the throughput is often slow. As you

can see, depending on what stack you

load the model, you're limited by the

upward bound of memory bandwidth between

each stack, where the further down the

stack you go from the CPU, the slower

the communication between them will be.

But this kind of setup is rather

unorthodox since you'll be lucky to get

more than 10 tokens per second like

this. That's why most AI models run

directly on the graphics cards instead

of CPU, RAM, and SSD stack. And here's

why. Graphics card that you see in the

server like the Nvidia H100 offer speed

up to 819 gigabytes per second for a

single memory stack. This kind of

throughput allows an insane amount of

speed in comparison to our regular

computer stack. For example, one of my

graphics card at home, I use the Nvidia

4080 Super, which uses GDDR 6X that

offers 23 GBs per second with 256bit

memory bus. And this gives me access to

a throughput of around 736 GB per

second. But unlike my consumer grade

graphics cards, which is the Nvidia 4080

Super, that is GDDR6X based memory where

the memory chip is placed around the GPU

on the PCB. The Nvidia H100 uses what's

called HPM or high bandwidth memory that

stacks them vertically, which means our

earlier figures of H100 being 819 GB per

second is actually closer to 3.3

terabytes per second, factoring in

overheads and other bottlenecks, which

is still huge. So immediately we went

from 50 to 60 gigabytes per second in

our CPU/RAMM configuration to now

upwards of 3 terabytes per second in our

throughput. And since neural

network-based applications rely heavily

on insane matrix multiplications, you

can see why memory configurations like

this is the buzz around the AI industry.

And this kind of difference that we see

in performance will dictate the user's

experience that can go from few tokens

per second to use deep 1 to upwards of

maybe a 100 tokens per second depending

on the graphics card. So now that we

outlined the broad ecosystem, the next

question is why? Why are pricing for

consumer grade RAM and NAND memory

affected? if AI data centers are

spending hundreds of billions of dollars

in the HBM based memory instead. Let's

look at the supply chain of the memory

industry. But first, let's talk about Zo

computer. Here's the problem. You have

files stored across Gmail, Google Drive,

notion, notebook, LM, and whatever other

applications you use. But there's no

good way to really unite them into one

place. Zo is a private cloud computer

that you can own which means you can

store all your data in the cloud and own

that instance yourself and you can also

leverage AI agent on top of that so that

you can use AI to manage your files

build automations build apps and store

your code there. Unlike traditional

applications that lock your data away,

Zo gives you a persistent workspace

where everything is stored which means

you have control over your own apps and

files. And because it's a cloud

computer, you can access it anywhere,

including sending and receiving text

messages, which is one of my favorite

features. And there are other features

like email and of course the ability to

vibe code directly on the machine and

have customuilt personas so that you can

make your computer more personalized.

Try out Zo today and their always on

computer that you can use to simplify

your computer needs. Link in the

description below. Consumers like

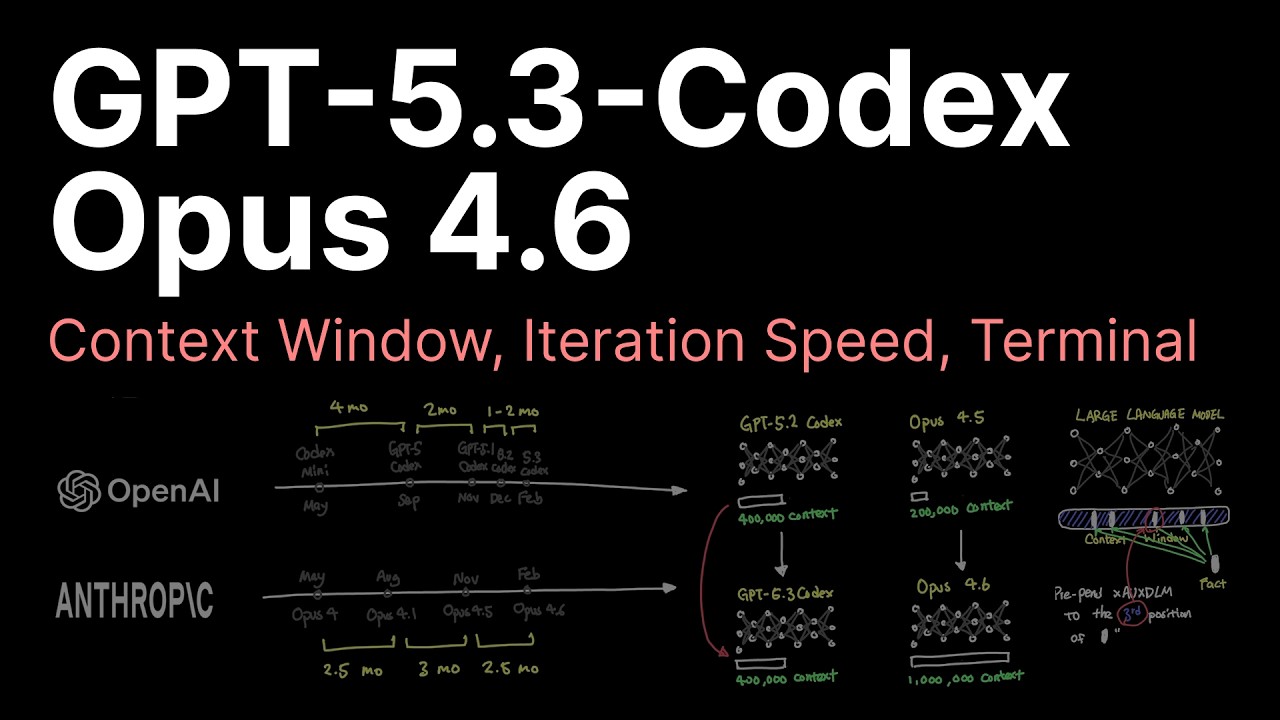

OpenAI, Enthropic, Google, XAI, and Meta

create huge pressure on the supply

chain. So much that Nvidia and AMD have

seen huge rise in revenue in the past

few years. And we also have Google's TPU

that's also in the mix as well as

Amazon's own proprietary chip like their

application specific integrated circuit

for inference. And let's not forget

Grock and Cerever. As you can see, this

level of pressure that AI puts on the

supply chain points directly from

graphics cards to memory requirements.

We just saw how important the

interconnect bandwidth plays a role in

user experience when it comes to serving

the model. So, while the bottleneck from

being computebound can largely be

overcome by just throwing more graphics

cards at it, being able to serve the

model for faster inference is more

bandwidthbound. And Frontier Labs aren't

the only players around that are putting

huge demand on memory. We also have

Neoclouds that are serving auxiliary

demand from Frontier Labs as well as

researchers, hobbyists and sovereign

clouds like Corewave, Nebus as well as

emerging Neoclouds that are listed here

in the semi analysis diagram. All of

this puts a strain on manufacturing and

production of the memory in the supply

chain. Memory manufacturers like

Samsung, SKH Highix, Micron, Western

Digital and more all serve various kinds

of memory. Which means to serve this

kind of demand from AI requires them to

either expand their operation or change

their operation to serve AI based memory

instead or both. And we're actually

seeing both of them happening from

memory manufacturers like Samsung,

Skhinx and Micron. But the problem with

expansion is that fabs take anywhere

between two to 5 years which creates a

huge delay in manufacturing let alone

the amount of investment that needs to

go into it. And despite this constraint,

OpenAI secured up to 900,000 DM wafers

from Samsung and SKH Highex every month

to meet their target of constructing the

Stargate facility. And some project that

this could be around 40% of the global

output for memory. In other words,

OpenAI is buying up nearly half of the

world's DRAM production, which creates

the shortage even more. So, blending all

the demand spikes from Frontier Labs,

hyperscalers like Microsoft, Oracle, and

Amazon, as well as inference trip like

Grock and Cerebrus and Neocloud and side

deals that are being made from OpenAI.

The level of shortage that's created

from this is causing prices on consumer

grade memory to also spike across

desktops, laptops, smartphones, storage,

RAM. Well, anything that really uses

memory of some type. Impacts like this

is why we're seeing Samsung recently

increasing memory products pricing by

80% across all memory products. Another

angle that's worth mentioning here is

the memory industry in general because

they don't really have a great track

record when it comes to price fixing

scandals in the past. Back in 2016, the

Department of Justice charged Samsung

executives who plead guilty where each

paid a4 million dollars for price

fixing. And many other allegations have

been made throughout the history given

how so few companies actually supply the

large demand. And the entire memory

industry is known to be more cyclical

than others. Even going back to 2017, we

had a boom cycle largely driven by the

falling inventory and lack of production

from the manufacturers. And of course,

coming out of 2020, we had the supply

chain shortage as well, which ended up

overproducing with insane inventory that

led to prices actually going down by a

large degree. And now the inventory is

decelerating again, which explained a

more recent spike in DM prices pairing

with AI demand that started to occur

around October 2025. As you can see,

even though we had many super cycles of

DM in the past, what we're experiencing

here is somewhat different than the

past. Given that SKH Highix is now

making 10% less nan memory, likely to

ramp up their HPM production instead.

Micron is also taking a conservative

stance in their production. Kyoxia

reducing their production from 4.8

million last year to 4.7 million. All

seem to point to an extended period of

time until we start to see a normal

pricing for DM again. China, on the

other hand, is still 3 to 5 years behind

in terms of technology in comparison to

the rest. One of their biggest fab CXMT

is targeting mass production of RAM at

$138,

which is still decently cheap on face

value, but their technology is still at

the DDR4 in their production line. But

they are targeting to have their IPO at

$4.2 billion soon, which could fuel

their growth in innovation. What do you

think about the DM shortage? Do you

think we will soon see prices of DM

returning back to normaly or that the

prices will continue to go up even

Interactive Summary

Ask follow-up questions or revisit key timestamps.

The video explains the global DRAM shortage and the significant price hikes across all memory products, including consumer RAM and SSDs. While AI demand is a primary driver, the timing of price increases (Oct 2025) vs. AI demand (years) shows a nuanced connection. Large AI models require immense amounts of high-bandwidth memory (HBM) primarily found in specialized GPUs, not traditional CPU/RAM/SSD setups. The colossal demand from major AI labs, hyperscalers, and emerging cloud providers, intensified by OpenAI securing an estimated 40% of global DRAM output for its Stargate facility, is straining the supply chain. Memory manufacturers are expanding or shifting production to HBM, but fab construction takes years. This, coupled with the memory industry's cyclical nature and current inventory deceleration, results in a substantial shortage and higher prices for all memory types, with no immediate return to normal expected.

Suggested questions

5 ready-made promptsRecently Distilled

Videos recently processed by our community