NVIDIA's $660 Billion Problem Just Got Worse

Now Playing

NVIDIA's $660 Billion Problem Just Got Worse

Transcript

407 segments

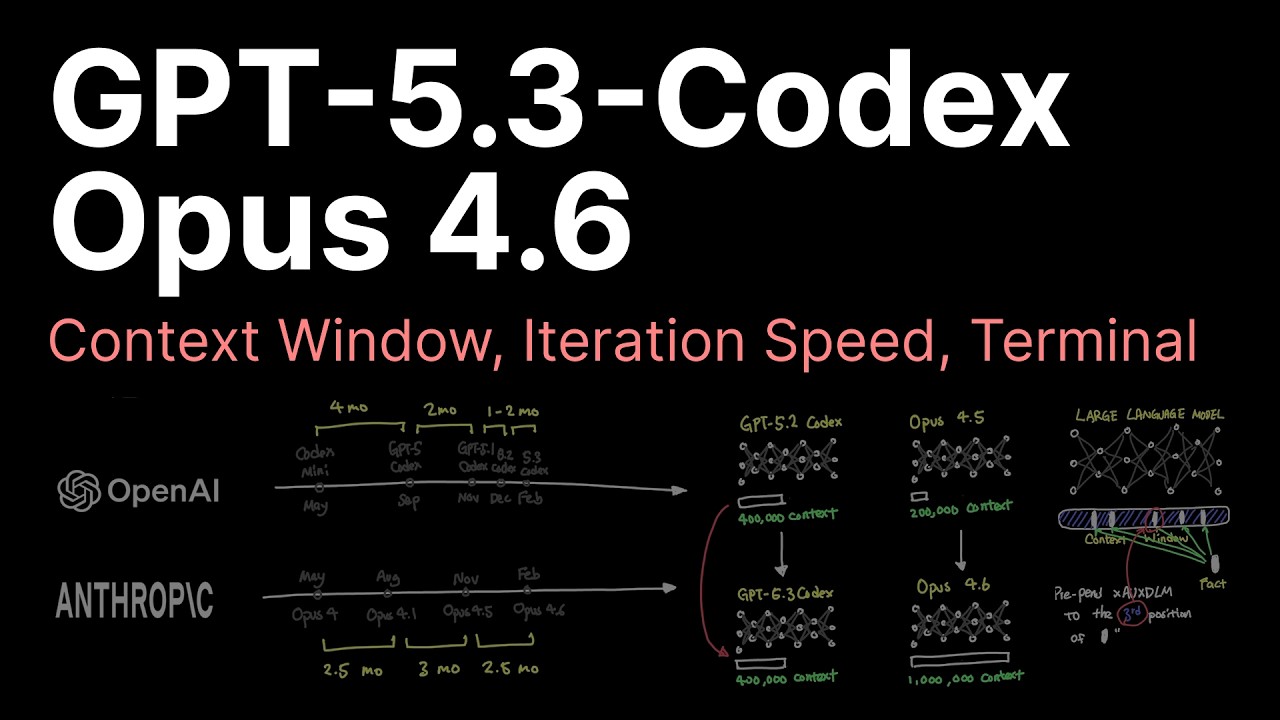

OpenAI just launched a new model that,

get this, does not run on Nvidia

hardware.

[music]

Finance bros and PR dudes covering this

are going to miss this because you need

to understand the underlying technical

architecture to understand why this is

massively significant for Nvidia's

valuation, especially with their

earnings coming on February 25th. If you

own Nvidia stock or if you're on the

sidelines waiting to buy, you need to

pay attention to this. You need to know

this. I'm going to break it down for you

in this video. By the way, you like the

hat? It's from abandoned wearer. Link

down there in the description. So,

here's what went down. Open AI in a

continued effort to get worse SEO for

their model names releases GPT 5.3

Codeex Spark. It is a lighter version of

their existing coding model called

Codeex. What's different about this one

is it's designed for real-time coding.

If you have ChatgPT Pro, you can use it

right now through their app on the

Codeex tab. You can use it in the Codeex

CLI and you'll al also have access in

the VS Code plugin. The headline number

is a,000 tokens per second, which might

not mean anything to you, but tokens per

second is effectively how fast a model

can produce a response. to put that in

relative terms for you. Any other

frontier model, whether that be any of

the other codeex models, chachi,

claude, etc., etc., they usually do

about 30 to 100x tokens per second. So,

even the fastest Frontier models on the

market that you have access to right

now, this is 10 times as fast. And

here's this the part that should make

Jensen really nervous. This does not run

on Nvidia silicon. It runs on the

Cerebrris wafer scale engine 3. So not

H100's but different manufacturer

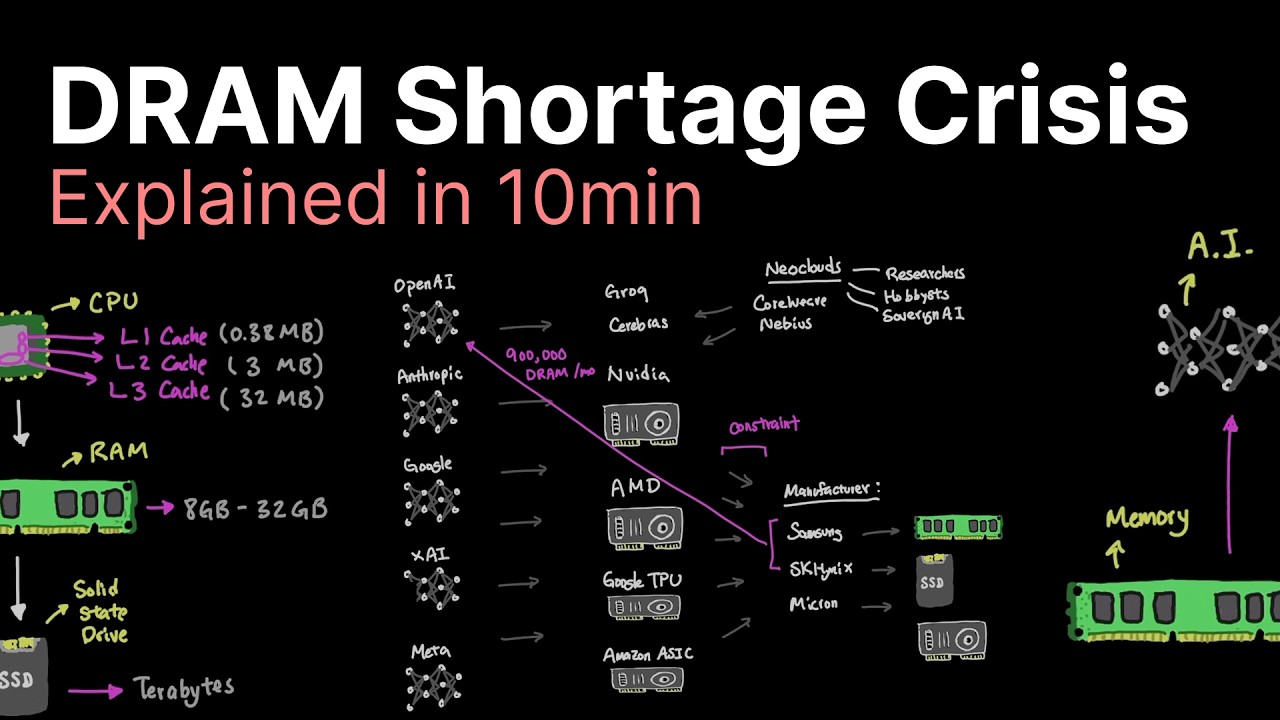

entirely. And the WSC3 is a massive feat

of engineering. It's truly impressive.

It is a thick chip. It is big. It's

actually the size of a typical silicon

wafer. 46,000 mm to be precise. And for

comparison, Nvidia's H100 is about 814

square mm. The WSC3 by the numbers has

four trillion transistors, 900,000 AI

cores, and 44 GB of onchip SRAMM. That

is an insane amount crammed onto one

chip. Even if it is big, the memory

bandwidth out of those numbers is 21

pabytes per second, which is

extraordinary. Again, if that doesn't

make any sense to you, let's compare it

to an H100, which only does about 3

terab per second. So, we're talking

7,000 times more bandwidth than Nvidia's

best option. This partnership between

OpenAI and Cerebrris was announced in

January. This is the first real product

to emerge out of that partnership. And

Open AI Sammy Boy was real clear about

the strategy. They said, quote,

"Diversifying compute infrastructure

beyond GPUs for latency sensitive

workloads." It's a direct quote. You can

read between the lines there, but if you

need it spelled out, it's basically like

Sam and Jensen are in a romantic

relationship. Jensen goes out to the

grocery store, hears a weird knocking in

the bedroom as he walks in. And he walks

in and Sam is in bed with somebody else.

It's cerebrous. And he sits up and

instead of saying, "It's not what it

looks like," he says, "It's exactly what

it looks like. We're diversifying." Now,

if this was just one ship deal, one

company making this, I'd say, you know,

probably some PR or publicity stunt,

probably inflated numbers, doesn't

really matter, but all of the

hyperscalers are doing this, or they're

at least attempting it. Google has been

running inference on their own TPUs for

years now. They're on version six now.

Amazon built the regrettably named

Trrenium. They're on Trannium 3. I'm

told that the first two movies weren't

very interesting. Meta built MTIA for

their own internal workloads, which

nobody has ever heard of. Microsoft is

building Maya. Nobody's ever heard of

it. But my point is, every hyperscaler

is at least trying to get off of

Nvidia's monopoly. And you might say,

"Dr. J, it's publicity stunt. It's a

publicity stunt. You don't know what

you're talking about." And that's not

true. The best numbers we have for this

are that these hyperscalers are running

about 10 to 15% of their current

workloads on custom silicon. Whether

that's developed in house in the case of

Google or with somebody like Cerebrris

for OpenAI. And it doesn't sound like a

lot. It's just 10%. you can kind of

shrug it off, but remember in these

videos for AI infrastructure, we're

talking about this year a proposed spend

from the hyperscalers alone of about

$660 to $690 billion.

So yes, 10% of that is a significant

amount of money. That's empire building

money. So Nvidia still dominates about

90% of the AI accelerator market, but

the dominance doesn't matter. It's is

that number moving up or is it moving

down? That's what Wall Street cares

about. Are you signaling strength or are

you beginning to lose? And they're

beginning to lose out. And part of that

is model dynamics. Again, you're not

going to understand this if you are not

deep into the technicals of it. But

model development and training has

slowed down a little bit. The frontier

models are getting better every year,

but we're not seeing the massive gains

like we saw with this last generation

compared compared to the uh previous

generation. And so when you're training

a model, when you're building a model

like Opus 4.6, 6, you need these big

heavy lifting GPU clusters and Nvidia

has a lock on that market and

specifically their CUDA infrastructure

is the the best in class for this. It

cannot be rivaled currently. What we are

beginning to see though is this shift

from an emphasis on training these

models to doing more inference at least

in a percentage breakdown. And I can

prove this very easily. You watching,

how many times have you trained an AI

model? probably never. I'm pretty

technical and I'm pretty into this stuff

and I have never trained a full-on AI

model from the ground up. I've never

I've never done it and I'm very very

interested in this stuff. I spent a lot

of time on it. But I'll tell you what I

do use and you probably use you probably

use claude or chat GPT. You probably

Google search and sometimes you use that

little AI summary. All of that is what's

called inference when you're actually

using the model to get a result out of

it. And so we've seen percentage-wise a

big shift from yes, the labs are still

training models, but there's a good

approach for training. People are doing

things largely the same way. There are

discoveries being made, but a huge

percentage-wise effort is now going

towards inference because everybody is

using these models. Very few people are

training them. So Nvidia's moat which

was training especially with those CUDA

drivers is starting to diminish in tech

like the WSC3 are starting to dominate

in the inference space. So it begs the

question why does Nvidia have so much

market share if most of the use of AI

chips is now inference and they don't do

inference as well as other companies.

They do training very well. The numbers

are bold here and we'll talk about that

in a minute. But Cerebrris claims

20 times faster inference than GPU

clusters and seven times better

performance per watt. Let's break that

down. Let's first of all, let's assume

that those are PR numbers, right? Those

are PR numbers. Those aren't real

numbers. And so you got to divide them

at least let's say let's say let's be

generous. Let's divide them by half. So

we'll say 10x faster and 3.5x better

performance per watt. The the faster is

just like right on the nose of it. I

mean, it's like, yes, it would be great

if these models responded faster. I

think everyone could agree that's the

biggest use case issue with a lot of

these models is they take forever to

respond. If you're going to use an Opus

4.6, it's correct every time, but it

takes a long time to get back to you.

And then the performance per watt, I

mean, you environmentalists, you might

be getting stoked about this, and I I am

too. I know that AI is pretty bad for

the environment, but even from a

business perspective, it's incentivized

to build more energyefficient chips

because if you are in the data center

building and purveying business, you're

paying a boatload of energy electricity

costs to power your data center. And so

if you can get those gains by using

other chips and the chips can do it

faster, man, that's an obvious choice.

It's kind of like when the Tesla came

out originally and the 0 to 60 time, you

know, if you were parked at a light next

to a a Corvette, some kind of a boomer

hot rod car, it could just smoke it off

the line and it probably cost half the

price as the Corvette. It's that kind of

big paradigm shift that we're looking

at. That bullcase for Nvidia has always

been their moat. And right now, the moat

is starting to show some cracks in it.

It's not like Nvidia was doing really,

really well before this. They had

something kind of nefarious in their

latest quarterly earnings that they try

to cover up. And if you read the

earnings, it's really clever how they

kind of couch it and try to push this

aside like, no, it's totally not a

problem. And that's the China problem.

It's an $8 billion problem to be

precise. And the issue with that is in

April 2025, the Trump administration

banned their export of H20 chips to

China. They partially reversed that at

some point over the summer. There's some

insider baseball going on there, but it

seems like they are going to be able to

ship those H20 chips to China at a much

reduced capacity. So, that's taken a

huge chunk out of their sales. And

that's not all. It's not just sales on

the nose. Like, we could turn around and

sell those H20s to someone else. The

H20s were developed specifically to be

neutered and underpowered enough that

they would meet the export regulation

guidance. So, if you're an American data

center looking to buy some Nvidia chips,

it's like, "No, I don't want the H20

chips, like they're super underpowered.

They're deliberately neutered because

they were going to go to China." So,

they actually have a lot of these

sitting in inventory. There's

specifically they're looking at a $4.5

billion inventory charge. They had

buyers lined up from Alibaba,

10-centent, Bite Dance. They placed

orders worth over $16 billion and all of

that, or at least most of it, went up in

smoke. So the total rev impact for that

across 2025 and 2026 like I mentioned is

about 8 billion. That's not a small

number for a company even if they are

doing 50 billion quarters. So that

future revenue outlook for uh Chinese

business for Nvidia mm- not looking

good. So on the inference side you got

people starting to develop their own

chips. On the China side you got people

starting to develop their own chips. So

going into a Feb 25 earnings man I would

hate to be Jensen right now. Those

earnings will be on Feb 25. The

conference call is going to start at

2:00 p.m. Pacific time. Let me tell you

the four signals I'm watching out for in

that call going into it. First are the

headline numbers versus what Wall Street

is guiding. Literally, if they do not

beat, if they miss, nothing else in the

call matters and can save them. The

stock is going to tank in a bad way.

Nvidia always beats. This is one of the

most reliable things. It's like so

reliable. You can set your watch to it.

They beat. That's what they do. They're

Nvidia. And so if they don't beat, I

mean, right off the bat, everybody's

going to tune out. Don't care what

you're saying. Like, all I hear is you

you're losing. You're losing. And I

don't want to invest money in you and I

want my money back. Second is the

guidance. Jensen is in a horrifically

bad position here because he has two

options, right? He can say that our

guidance is going to be lower. He can

lower the guidance compared to

expectations and the stock is going to

dip because it's like you're not

confident that your company is going to

keep growing at least at the same rate

it is. Or he can guide that it's going

to grow in line with historical

performance in line with what the

analysts are guiding. And even that

might not be enough given where the

stock is trading at. Third, we got to

hear about inference diversification.

And I'll tell I'll let you in on a

little secret on this. If Jensen gets

hit with a question or proactively

mentions inference diversification, and

when I say inference diversification, I

mean basically, what are you doing to

address the fact that a bunch of these

other companies are building their own

custom silicon to compete with you and

they're not going to need you for as

much inference work anymore? If he gives

some answer about how their proprietary

CUDA is the best, it's unassailable,

they're sunk. They are so sunk. If he

mentions CUDA in that response in a

favorable way for inference,

man, wrecked. Wrecked. He's just trying

to block and tackle and he's banking on

the fact that the analysts are too dumb,

which might work out actually, to see

past what he's saying. The only correct

answer he can give here is about

Blackwell specific performance. And when

I say give an answer about Blackwell's

specific performance, if he says

anything like might be, could be,

should, we expect, any of those weasel

words, he's also in trouble. He's also

in trouble. The only correct answer he

can give is concrete data on Blackwell

performance. Concrete, real data on how

this is going to save them on the

inference front. And if that doesn't

manifest, you know that their

development isn't far enough along to

mount a serious threat to these new

contenders. Fourth, watch the data

center revenue breakdown. Nvidia is

classically shady about how much of

their product is going towards inference

versus training. But if they mention

that inference revenue is growing slower

than the overall market for inference,

which is my hypothesis, this would all

but confirm that the alternatives are

winning. Stock has been trading around

188 for 6 months. Technicals point to

maybe 195 to 200 on a beat. But if any

of these risk factors that I just

mentioned hit, the downside is a lot

more than $12. I can tell you that right

now. Look, I'm not saying to sell your

Nvidia, but what I am saying is that the

narrative is changing and they haven't

addressed this properly. And I don't

think they can address it. The monopoly

narrative that got them from $15 to $188

is starting to show some real cracks.

Open AAI running their new model on

Cerebrus is a signal. The $8 billion

China hole is a signal. and the

hyperscalers building out their own

custom silicon across the board is

another signal. I'm going to do a full

Nvidia earnings analysis after that

earnings call on Feb 25. That's going to

pop up on the channel 1 to two hours as

fast as I can edit it and post it after

those earnings. And we're going to have

the best analysis on this channel. I

know what's going on at the technical

level. I've worked in software. I've

worked in hardware. I've been an

engineering director. And I know what's

going on at the financial level as well.

I'm going to be able to call them out if

there's any BS. I'm going to be able to

explain to you what a lot of those

technical decisions and guidance means

and I'm also going to inform you on the

financial ramifications of those things.

If you want the analysis before anyone

else, join us on the newsletter. Link is

down there in the description. Thank you

for watching.

Interactive Summary

Ask follow-up questions or revisit key timestamps.

OpenAI has launched GPT 5.3 Codeex Spark, a real-time coding model that runs on Cerebras's wafer scale engine 3 (WSC3) instead of Nvidia hardware. This is significant for Nvidia's valuation, especially given its upcoming earnings. The WSC3 boasts superior inference capabilities, being 10 times faster than other frontier models and offering significantly higher memory bandwidth than Nvidia's H100. This move by OpenAI signals a broader industry trend where hyperscalers are diversifying their compute infrastructure beyond GPUs, particularly for inference workloads, which are growing faster than training workloads. Nvidia's dominance in AI accelerators, particularly with CUDA for training, is being challenged as the market shifts towards inference, where alternative chips like Cerebras excel in speed and energy efficiency. Additionally, Nvidia faces an $8 billion "China problem" due to export bans on its H20 chips, leading to inventory issues and lost sales. The speaker advises watching four key signals during Nvidia's February 25th earnings call: headline numbers, guidance, their response on inference diversification, and the data center revenue breakdown.

Suggested questions

6 ready-made promptsRecently Distilled

Videos recently processed by our community