Vibe Coding With GLM 5

Now Playing

Vibe Coding With GLM 5

Transcript

805 segments

video,

I'm going to be vibe coding with the newly released GLM5,

which is a model that just got released from ZAI just a few

hours ago.

This is the new flagship open source model that we've been

waiting for.

And in this video,

I'm going to share everything that you need to know about

this new model.

We're going to dive deep into benchmarks.

We're going to give it a full stack test and we're going to

put it through the new bridge bench to see how it scores on

the bridge bench relative to other frontier models.

But with that being said,

I have a like goal of 200 likes on this video.

And if you have not already liked,

subscribed or joined the discord, make sure you do so.

And with that being said,

let's drive straight into the video.

All right.

So the first thing that I want to dive into is the context

of this model.

So this is the staple from ZAI, but it's again, 202,000

in context,

which is consistent with other models that we've seen,

other GLM models that we've seen.

But the really interesting factor here is the cost.

You know,

this is one of the biggest things from GLM models is the

cost.

You know, these are very affordable models.

$1 per million on the input in $3 and 20 cents per million

on the output.

That's like very, very affordable.

When I was using this on stream for day 132 of vibe coding

and app until I make a million dollars,

I was able to work with it for about an hour and I only

spent about like $6.

So in terms of cost,

this is an incredibly affordable model.

Now here's what ZAI actually says about the model.

I want to highlight a couple of them and read the whole

thing.

But one thing that they do say is that it's delivers

production grade performance on large scale programming

tasks, rivaling leading closed source models.

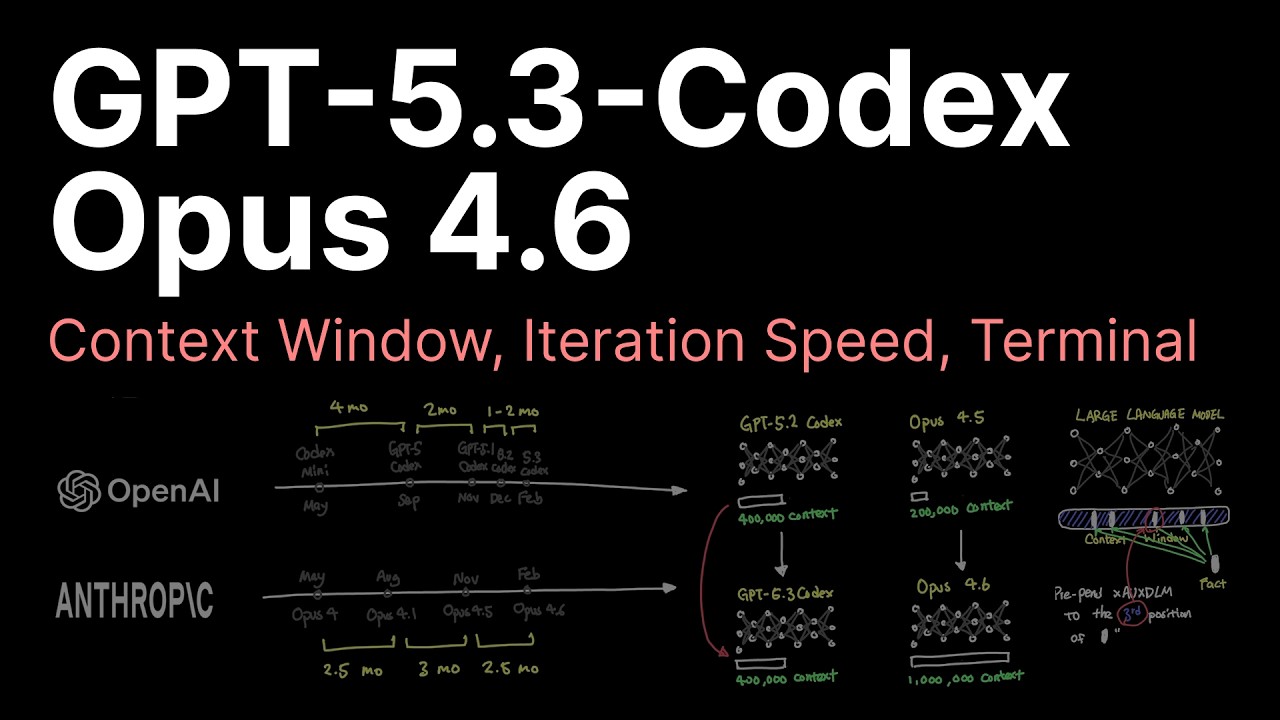

So they are talking about Opus 4.6 and GBD 5.3 when they

say that.

So the one thing that I do want to highlight though,

is their throughput, the tokens per second,

you can see that it's actually running quite slow right

now.

I did see it earlier today running at about 36 tokens per

second when I was on stream,

but that's since been slowed down a little bit.

They're probably experiencing a ton of demand since it's

launch day.

I'll be interested to see if that speeds up or slows down.

This again is only based off of the data from the last 30

minutes.

So you guys can see that they're only based off the data

from the last 30 minutes.

So it's a little bit slow,

but I did see it at 36 tokens per second.

If we compare this with Opus 4.6,

what you're going to see is that best runs at about 33

tokens per second.

So right now for the past 30 minutes,

at least Opus 4.6 has been running faster than GLM 5.

But in terms of cost, that is the biggest thing, Opus 4.6,

$5 per million and $25 per million respectively,

it's just in GLM 5 is in a league of its own in terms of

cost.

And that's what we continue to see out of some of these

frontier models.

But in terms of cost and speed,

I will say right off the bat,

incredibly affordable model speed kind of, I mean,

on par with Claude,

we'll see how it fluctuates over the coming weeks as they

come online and get a little bit more stable,

but kind of mid on the speed, but in terms of cost,

this is an incredibly affordable model.

But with that being said,

let's take a look at some of the benchmarks.

Okay.

So one quick thing to note is that GLM 5 has not yet been

added to the leader boards in Ella Marina,

but in artificial analysis,

which is a great benchmark to take a look at,

we do have access now to GLM 5 it's been benchmarked in

artificial analysis.ai.

And that's what we're going to take a look at here.

So again, do we just covered speed,

but you can see it's kind of reflective of what we already

saw over an open router, you can see 47 tokens per second.

Whereas Opus 4.6 is at 66 and Claude 4.5 Sonnet is at 78.

And then you have some of the Gemini models,

which are just very, very fast.

You also have GBD 5.2 extra high,

which is ranking at 95 now.

So speed, it lacks a little bit behind the frontier models.

But the biggest thing that I want to draw guys attention to

very,

very impressive here is the artificial analysis intelligence

index.

So I'm going to zoom in a little bit so you guys can see

this better, but check this out.

GLM 5 literally matches Claude Opus 4.5 on the intelligence

index.

It scores a 50 Claude Opus 4.5 also scored a 50 Claude Opus

4.6 scored a 53 GBD 5.2 scored 51,

but GLM 5 actually beats out Gemini 3 Pro preview,

which is just absolutely incredible.

You have an open source model with the pricing that it has,

and it beats out some of the frontier labs.

Very, very impressive.

Now, one thing I do want to draw your attention to though,

is the coding index.

So this is something that we saw.

If we take a look over,

I'm going to go over to my X real quick because you're

going to see a little bit more information here.

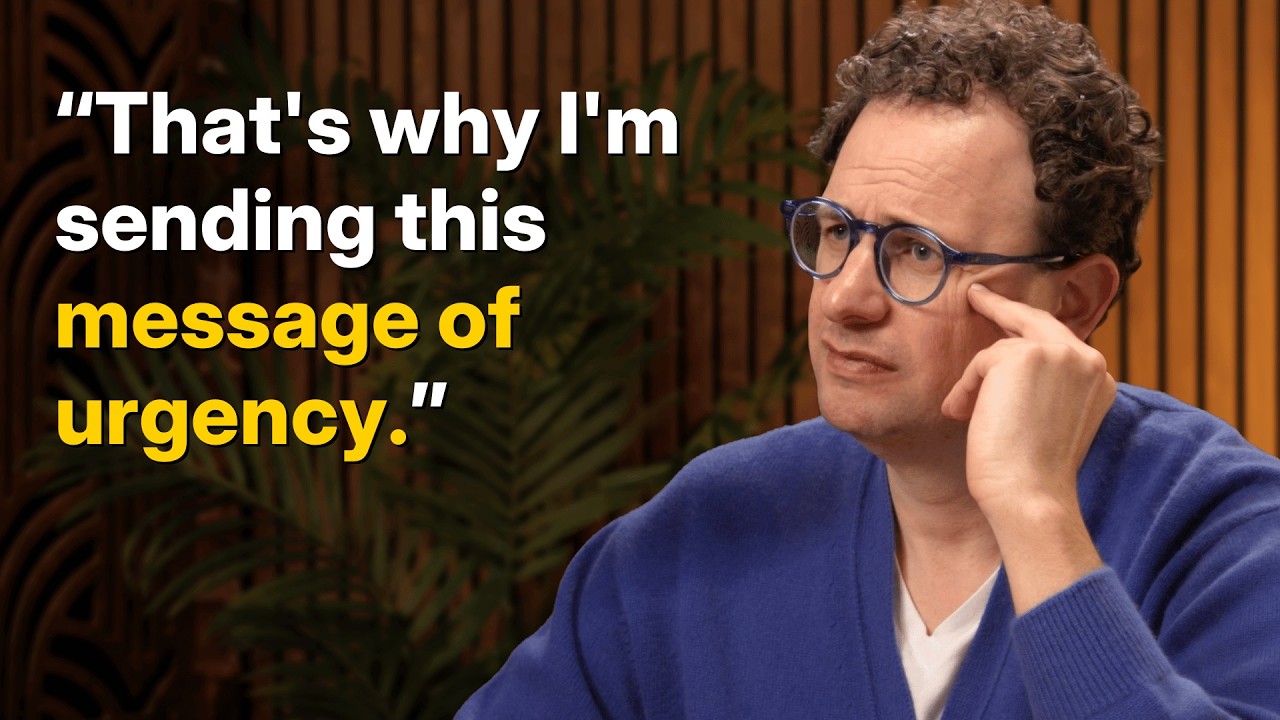

So here is the benchmarks that ZAI shared.

So these are benchmarks.

You guys can pause the video to take a look at this as

well,

but this is going to be reflective of what I'm about to

show you too.

So if you look at sweet bench verified right here,

you can see that it only scores a 77.8,

which is actually like, I mean, it's obviously good.

It beats out Gemini 3 pro,

but in terms of comparing that to some of the frontier

coding models like Opus 4.6, or in this case,

it's Opus 4.5, but you know,

still like three points behind that,

which actually is like a pretty large difference.

But if you look at the artificial analysis coding index,

the same thing gets reflected here.

You can see that it actually scored only a 44 on the

artificial analysis coding index,

which is still high up there, but still, you know,

a decent amount below some of the frontier models.

So if you are on the frontier, if you're using, you know,

Opus, you're using Claude models, you're using Claude code,

you're using codex, you know,

this is still not going to be up to par in terms of coding

as to what you're used to, right?

If you're using Claude or if you're using GPT,

but the thing is, is that when you do look at the pricing,

that's when things get a little bit interesting, right?

It's like, Hey,

are you going to spend $10 on a task to have Claude Opus 4

.6 do it?

That may do it a little bit better.

Are you going to spend, you know,

20 cents to have GLN 5 do it, right?

It's like kind of an interesting trade off here.

Also,

one thing that you guys should note is it performed very,

very well on the artificial analysis agentic index.

So it scored a 63 beating out GPT 5.2 beating out Claude

Opus 4.5 beating out can be K 2.5 and beating out all the

Gemini models.

I mean, that is incredible for an open source model.

The next thing that I want to show you guys is very,

very impressive.

And this is something that we will definitely be learning

more about.

Let me just find it's down here.

Okay.

Check this out guys.

If we go to this one here,

this is a very important benchmark.

And what this is,

is this is the basically the hallucination rate index.

So the lower score is better.

And what I want to highlight to you guys in this benchmark

is that GLN 5 has scored the lowest hallucination rate out

of any other model.

It scored a 34% whereas Claude 4.5 sonnet which is next.

It's second scored 47.

You can see that even some of the Gemini models, I mean,

they hallucinate like crazy,

but they're at 90% 88% whereas Claude Opus 4.6 75% but GLN

5 34.49%.

So in terms of hallucination,

I am incredibly impressed by what they were able to achieve

here.

This is the best score that's ever been produced on this

index.

Very, very impressed for this benchmark.

But with that being said,

I think that this now is going to sum up.

If we just take a look at the price,

what you can see here as well is like for this here,

lower is better on this price 1.6.

Whereas Claude Opus 4.6 scored a 10.

GBD 5.2 scored a 4.8.

So this gives you the look at like if you're using GLN 5,

you know, 1.6 versus a 10.

I mean, that is just insane, right?

I mean,

you're talking about a model that is just incredibly

affordable and incredibly capable.

So if you are a budget vibe coder,

this is going to be a model that you are definitely going

to want to take a look at.

It performs very well on the benchmarks and it's very

affordable.

Okay,

so the next thing that we're going to do is we're actually

going to be vibe coding using GLN 5 here inside of open

code and we're going to give it some real world vibe coding

tasks in production for BridgeMind.

So one thing I do want to highlight,

you guys may have noticed it's currently 8 30 a.m.

The last clip that you guys just watched was yesterday and

I want to tell you guys why.

So I was putting GLN 5 through the bridge bench and here

are the results.

These are the preliminary results.

They're not yet available on bridge mind.ai.

I'm going to push this here in a minute,

but GLN 5 after I used it yesterday and actually put it

through the bridge bench,

it made it so that I couldn't even put out this video that

you're watching right now.

Yesterday,

it was my initial plan to put this video out that you're

watching yesterday, but it's now, you know, 16, 14,

16 hours into the future.

It's 8 30 a.m.

February 12th and you can now see that we do have the

results from GLN 5, but look at this guy.

So here are the initial results from the bridge bench.

So overall, you can see the score of Opus 4.6.

It scored a 60.1 GPT 5.2 Codex.

It got a 58.3 and then GLN 5 a 41.5.

And I want to highlight the average response time for each

of these tasks.

So what the bridge bench is,

is it basically puts it through six different categories,

UI, code generation, refactoring, security, algorithms,

and debugging.

And it launches all the tasks concurrently and runs the

model on all these tasks.

Right.

And what I want to highlight is the average response time

for each of these models.

So Opus 4.6, 8.3 seconds, GPT 5.2 Codex 19.9 seconds,

and then GLN 5 156.7 seconds was the average response time

for this model.

So another thing is the completion rate.

Look at this.

It only completed 75 out of 130 of its tasks.

So even though this thing is a benchmark beast, you know,

we were just looking at the artificial analysis benchmarks

inside a bridge bench and real world task when you're

actually using the model with open router or with open

code.

This is what we're seeing very, very slow,

very bad completion rate.

So even though this thing is benchmark maxing, Hey,

an actual practice, it's not super reliable.

It literally caused me to have to delay this video.

So I just want to show you guys, this is the bridge bench.

I'm going to post this on the website,

but in order to do that,

we're actually going to be vibe coding with GLN 5 in this

video.

And we're going to be basically using GLN 5 to help us with

the bridge bench to get it deployed.

So if we look here, here's the public leaderboard.

Here's how you can find it.

You can go to bridge,

mine.ai and you can go to community and you go to bridge

bench.

Okay.

This is placeholder data.

We're running this locally.

But what I want to do is I want to make it so that first of

all, this table is going to be like have much better UI,

right?

So let's go over to GLN 5 here.

Let's go here.

And I'm going to use bridge, bridge voice.

This is the voice to text tool that I use.

It's the voice to text that I use.

So let's go here.

Bridge mine UI localhost bridge bench.

I want you to review the bridge mine UI and the bridge

bench page,

specifically the table that is in the bridge bench page.

And right now this table is has horizontal scrolling.

It's terribly styled.

I want you to completely reinvent the styling of the page

to make it better, make it more modern,

make it professional and compact the table and make it so

that there's no scrolling and so that it's just better

overall styling.

Okay.

So let's drop that in.

We'll see what it comes up with in the styling.

And then now let's go over to bridge bench and I want to

show you guys another thing that I did last night because I

did actually have a lot of time to work with the model

since the last time that you guys saw this.

And what I did is we're also creating something called a

creative HTML benchmark.

And you can see here that I have ZAI right here, GLN 5.

And what this is, is you can see if I go here,

we have these MD files, right?

And these MD files are prompts that we're going to start

benchmarking each of these models with, right?

So we give basically GLN 5 this prompt here,

it's able to generate a singular HTML file so that we can

compare how these models actually are with styling in a

very simple task, right?

So this is create a single HTML file with a full screen,

animated lava lamp, use only HTML, CSS and JavaScript.

So it's pretty good in terms of like just being able to do

that, right?

It's like, okay, like do this real quick.

But let's go over here.

And what I want to do is I'm going to drop in the bridge

bench, and then I'm going to drop in the bridge mind UI.

And what I want to do is I'm also going to drop in,

let's grab this here,

it's going to be that same open code is decent sometimes,

but other times no.

So let's paste this in.

I want you to add another section to this page that will

basically like there should be tabs at the top that allows

people to choose between which benchmark or which

leaderboard they want to see.

So there's the bridge bench,

which is the scores that you see and the current existing

table that you see.

That is the most important.

That should be by default what people land on.

That is the official bridge bench.

But if you look at bridge bench,

you will notice this creative HTML styling and all of the

prompts that are included with this.

This is going to be an additional layer of bridge bench.

And for this,

I want you to update this page so that it has this tab

system and so that it has this new tab.

And in this new tab,

I want you to display all of the system prompts that we're

putting to the test.

So there's gonna be a section for this.

So let's go here and let's actually just paste this in.

Okay, it's pasted.

So let's do that and then I'm gonna give it,

so you need to review bridge bench and review the system

prompts for the HTML and just make sure that you update the

page that has this tab system and then it also includes the

system prompts and allow people to copy the system prompts

as well.

So we'll just start there.

Now the one thing that you do wanna look out for is these

are working on the same pages, right?

So they could overlap,

but we're just gonna send it anyway and see if it can do

it.

But one thing that's very important in that you guys are

even seeing like right now, this task that I just gave it,

right?

Like improve the styling, this is a super easy task.

And even though at the start of the video,

and I already see a lot of people hyping this model up

because it is a benchmark beast.

It performs very well in the benchmarks like artificial

analysis.

You know, it tops things out,

performs well in not hallucinating,

but the issue here is that, hey, in practice,

what I'm seeing about this model, and hey, it is day two,

we can't be too hard on it, right?

But the problem is that it's like super,

super slow and it's not very reliable.

And that'll probably change over time.

Obviously it's a day two of them launching this,

but it's like, hey, if Opus 4.6 launches,

it's gonna be reliable right out of the gate.

These frontier models like, hey, we get GBD 5.3 codecs,

it's usable, it's workable.

It's usable in practice.

Whereas with GLON 5, I'm like, honestly,

it's just not doing that great of a job for me.

So that's what I've noticed so far in terms of like how it

actually works in practice.

And it's kind of disrupted this video, to be honest,

because it's so slow and it's so unreliable that I'm like

having a hard time even making this video.

I mean, yesterday I was like,

I was already making the video that you're watching right

now.

And I was like, okay,

now we're gonna put it through the bridge bench and look

at, I mean, look at this.

It was taking almost three minutes to do every single task.

So, you know,

you'd compound that over 130 tasks and you guys can do the

math real quick.

Like I was literally waiting for this benchmark to complete

for hours and yeah, it's ridiculous.

It did not do a good job.

So, you know, it's a little bit disappointing to see that,

right?

But I mean, we'll keep giving it a shot.

It's just slow.

It's just very slow.

So, that's a little bit frustrating.

Whereas, you know, hey, like, let's like go here, right?

I wanna show you guys this.

So, this here,

this is a cursor instance that has been actually going

through and it looks like it's done now, which is,

thank goodness.

But look at this.

This is a perfect example of this.

So, I basically go back over here, right?

And the prompt initially was test this model in this,

right?

And it was,

I was testing it in this creative HTML benchmark, right?

And you can see if we go here,

it's basically putting GLM5 to the test and we're using

Opus 4.6 to be able to guide GLM5 in this test, right?

And it's saying, good, two down, prompt two was 22,000

characters in 150 seconds.

Let me keep waiting.

This model is taking over two minutes per prompt.

So, it slept for 180 seconds, then 300 seconds,

then 600 seconds, then 600 seconds,

and then another 600 seconds.

You can now see that all, you know,

10 HTML files are complete.

But hey,

it literally just took me 30 minutes to get 10 HTML files.

So, we can now, you know, stop this task because it's done.

But I want to show you guys something just in practice to

just like compare models,

because I know that there's going to be a lot of goofy

content creators that are hyping this up, which yeah,

this thing's a benchmark beast.

It's very affordable.

But hey, in practice, if you're a serious vibe coder,

this thing is just not very reliable because watch this.

So, I just put this one through, right?

And what I want to do is I'm going to say, hey,

here's the prompts, here's our output.

Review this.

We now have our 10 completed HTML files from GLM5.

I now want you to test it and put it through the benchmarks

with this model.

And now I'm going to go over to open router here and I'm

going to drop in the Opus,

I'm going to drop in Opus 4.6 and you guys are going to

see,

I literally guarantee you this model is going to be insanely

fast.

So watch this.

Anthropic 4.6, okay?

Watch how fast this goes.

I bet this will complete in under 60 seconds.

I bet it'll complete in under 60 seconds.

That's going to be my guess.

So it's sleeping for 60 seconds.

So it's going to check on it periodically,

but it's going to,

I bet it'll complete in under 60 seconds.

So we'll come back to that.

But my bet is that that's going to complete in under 60

seconds.

And now if we go back here,

what you guys are seeing is look at this.

Error, file not found.

Users, desktop, bridge mind, bridge mind UI styling guide.

This thing is still looking at the styling guide and is

like thinking about its approaches.

This one's finally actually writing code.

But hey, if you're a serious vibe coder,

you are not going to be using GLM5,

at least in this current state that it is.

And I'll even show you guys like, hey,

one of the reasons that we're seeing this,

if we pull up OpenRouter here, look at the uptime.

Look at the uptime.

Look at how bad it is.

Uptime, 62%, 94%, ZAI, 97%.

It's better from ZAI, but the uptime, uptime not available,

77%, 73.69%.

Whereas if you look at Opus 4.6,

it's going to be like a hundred percent across the board.

Yeah, 99.6, 99, 99.99.

Like you're just not going to have those issues with some

frontier models.

Like these Chinese laboratories, like whatever, they can't,

I said laboratories, they can't get their,

they can't get their act together.

You know, they just launched.

So we'll give them some,

I think looking at GLM 4.7 would probably give us a little

bit of a better reference, right?

Let's look at GLM 4.7.

So uptime, 94%, 91%, 99.6.

That's better, better.

What about from ZAI directly?

ZAI, are they not even offering this anymore in OpenRouter?

Oh, six more, okay.

ZAI at the bottom here, ZAI, 97%.

So it's like a 97%, like that's not 99.9, right?

Like that you're seeing from Claude.

So it is a thing that sometimes like they struggle with the

uptime with these models.

I'm not sure why, but I mean, yeah,

this is what we're seeing now.

It's like, yeah, this is a, this model is slow.

So I may have to like pause this video so that it can

actually go through.

Now it looks like I was incorrect.

It looks like this is a deep thinker.

Let me give it more time.

So it's done with the lava lamp.

It's done with the lava lamp.

And I do,

I'm gonna give this some time because what I want to do is

I want to make it so that these actually finish so that you

guys can see what at least it created.

But if we were waiting here, I kid you not,

this is probably gonna take 10 minutes to even get through

two prompts.

So I'm gonna cut to the point where these actually finish.

Right now it's 841.

We'll see how fast these are,

but definitely slow and unreliable right now.

I think we'll give it a little bit of a break,

but I'll come back to you guys once these are actually

complete and more review what it comes up with.

All right, guys, so it's been about another 10 minutes.

It's now 850 and these are still working and they weren't

able to make some changes.

Here's what we're looking at.

It basically did nothing.

And I don't know.

I don't think that this is a really great model in

practice.

So even though these are working,

I do want to kind of like just can't,

I want to interrupt these because they're just,

we'll use Opus 4.6 for that.

It'll not be that difficult with Opus 4.6.

So in practice,

not seeing GLM5 produce the results that I need as a vibe

coder in practice, not reliable and not fast enough,

at least that's my perspective on it right now.

Now for back to this here.

So I will say I was wrong.

Opus is taking longer than 60 seconds for this HTML

benchmark.

But since this is done,

I think this will be a good opportunity for you guys to see

the differences between the HTML files and the creativity

produced by Opus 4.6 versus GLM5.

So you can see that in the past.

What has it been?

I guess it's been 10 minutes about.

But Opus 4.6 was able to create six of these so far and it

even created a nice table for us.

So you can see that GLM5 was able to do this in 103

seconds, Opus 4.6 95, 150 versus 117, 146 versus 106,

128 versus 116.

So you can see that the time Opus 4.6 is much faster than

GLM5.

Now the big question here is let's actually now compare

some of these HTML files, right?

So let's drop this one in and then let's drop this one in.

So let's do the lava lamp.

Let's check out coffee being poured.

I want you to start these so that I can give you the URL so

that I can actually see these HTML files for review.

I'll put them in a list.

Okay,

so we're gonna output this in a list and then what we're

gonna do is we're gonna actually take a look at these so

that we at least have something to go off of for GLM5 of

what it's actually able to produce.

So what we're seeing is like, okay,

benchmark beast in practice though, just not great.

So, okay, let's check out this lava lamp.

So here is the, we'll do side by sides.

Let's take a look at the Opus 4.6 lava lamp.

Okay, wait, hold up here.

I put in the wrong thing.

Okay, there we go.

So here is the lava lamp produced by Opus 4.6, okay?

Here's the lava lamp, pretty cool.

And then let's now take a look at the lava lamp produced by

GLM5.

So let's just go over here, go over here.

Oh, let's cancel out of this and let's go here.

and we'll drop this in and there we go.

All right, so lava lamp.

Oh my gosh.

All right lava lamp.

Sorry guys.

All right, there we go.

This good.

Oh my gosh, this is killing me.

Okay, hold up here.

Lava lamp.

There we go.

Okay, so let's drop this in.

There we go.

Okay, so I think this is actually a good example.

Okay,

so here's the difference in the lava lamp between GLM5 and

Opus 4.6.

You guys can be the judge here of what you think is

better.

I think another one is this coffee being poured.

Let's look at that one.

And again, like hey,

I did have like literally 30 minutes for GLM5 to come back

with all these.

So we're now finally being able to see it.

Here is the coffee being poured by Opus 4.6.

And here is the coffee being poured by GLM5.

Let's take a look at these.

Yeah, so I don't know guys.

I mean, you guys can be the judges of this, right?

But even like right off the get go, in my opinion,

I think that I mean, even look at like, look at the flow.

Let's refresh this, right?

I mean, I don't know.

You guys can be the judge of it.

Let me know what you guys think in the comment section down

below.

Let's also see this aquarium fish tank.

I want to see this one.

Let's see this aquarium fish tank.

Same thing.

Only give me the URL to go to.

Okay, same thing.

Only give me the URL to go to.

So what do you guys think so far?

We'll take a look at the aquarium,

but here's the lava lamp.

Here is the coffee being poured.

Let's now take a look at this aquarium fish tank.

This will be available.

I'm literally going to have to use,

I'm going to have to use Opus 4.6 to be able to get this

available.

Here's the aquarium fish tank from Opus 4.6.

And then here's the aquarium fish tank from GLM5.

Similar here.

You guys can be the judges of this,

but even like stuff like this,

like look at the flow of these down here.

Do you guys see how these are flowing?

And just how these are like, kind of like still, right?

Where, okay, this is, these are moving like really well.

I mean, you guys can be the judges of it.

I won't, I won't, you know, give too much here,

but here is now the solar system.

Same thing.

Okay.

Solar system.

And then we'll also do the thunderstorm over the city.

Okay.

Solar system.

Here is the solar system from, okay, great.

All right.

Same thing.

Okay.

All right.

Solar system.

Oh wow.

Okay.

So solar system from Opus.

Solar system from GLM5.

I mean, uh, there was more here,

a vision of celestial mechanics as like mechanics.

I don't know why I did the mechanics in the sun, but, uh,

you guys can be the judges of these.

So you have solar system, tropical fish tank,

morning coffee.

and lava lamp.

In my opinion, I think that Opus 4.6 is better.

And if you do go back to the benchmarks,

that is what you see.

You know, you do have that cost trade off where, yes,

GLM5 is much more affordable.

But in practice,

no serious vibe coder is going to be using GLM5,

at least right now, with the speed that it has.

Like speed is becoming more and more important.

And even though GLM5 was able to create a better model that

is, yes, it's much, it's like, it's just cheap.

It's cheap, in my opinion.

Opus 4.6 is incredibly reliable, it's much faster.

And it's actually going to be able to, you know,

do the tooling correctly so that it can get what you need

done.

GLM5, on the other hand, at least today,

and what we're seeing so far,

is that it has not produced and been able to help me in

actual tasks, right, in actual vibe coding tasks of, hey,

I need you to update this UI, I need you to add this page,

I need you to add this tab.

Nope.

20 minutes later, I still have nothing to show for it.

So, you guys can be the judge of this.

I think that we're definitely going to be wanting to use

GLM5 over the coming week to be able to, like,

see if this gets better.

But, I mean, you guys saw it firsthand.

I was trying to make this video yesterday,

and if you go back to the bridge bench,

this is what I experienced, okay?

Massive response time, very slow, and the completion,

it wasn't able to complete my tasks for the bridge bench.

So, this is what I'm seeing.

You guys can let me know what you think in the comment

section down below.

But GLM5, I think if you're a serious vibe coder,

it's probably just going to be a little bit hard to use

right now.

So, we'll continue to stay up to date with this.

I am impressed by the trajectory of open source models.

You know,

one thing that I'll kind of share with you guys before we

finish off the video here is I'm going to show you guys a

chart that somebody from the bridge mind community actually

shared with me.

And I think that it does tell a really,

really interesting story about these open source models.

Let me find this real quick and show it to you guys,

because I think it is important to understand the

trajectory of these open source models.

Here it is.

So, look at this.

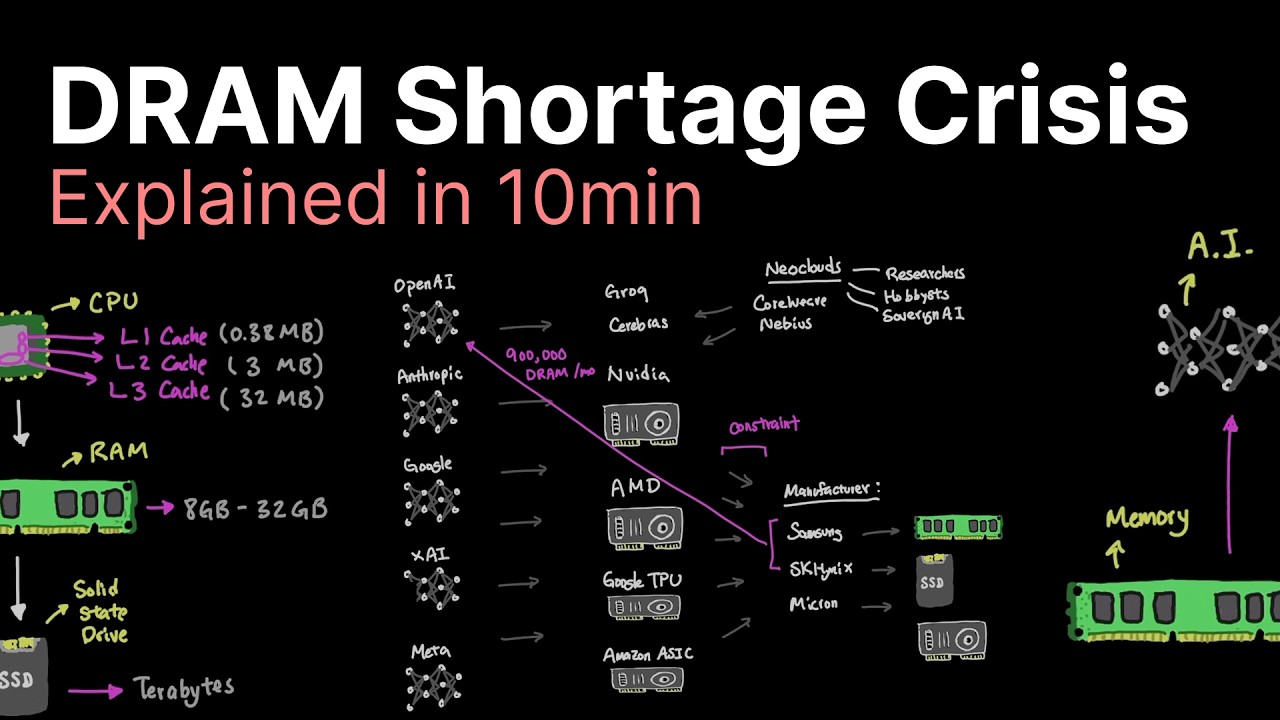

So,

this is the frontier lag analysis that people are anticipating,

and what you're looking at is the open versus closed source

model.

So,

what you can see here is that it goes all the way back to

April 2025.

Here's where some of the closed,

the open source models come in.

So, here's deep seek, here's the AI,

and basically what this model is projecting that there is

going to be an inflection point in June of this year where

people really think that the open source models are

actually going to be outperforming the closed source

models.

I don't know what you guys think about this.

It says that the projected crossover is June of 2026,

but I actually don't agree with this.

I think that this is going to change.

I think that the frontier models are actually going to get

way better.

And yes,

it's possible for you to be able to create these benchmark

beasts.

But when we actually use the model in practice,

sometimes they just are not very performant.

They don't perform as well as some of these frontier

models.

So, you can put it through a benchmark,

and you can see how it performs on SWE bench and all these

other artificial analysis benchmarks.

But the most important thing is, hey,

how does it work in practice for a real vibe coder?

And as a vibe coder, it's like I notice immediately,

I'm like, hey, slow, unreliable, unusable,

for me personally.

So, that's what I'll share.

I think that that will be better.

I think that GLM5 will become faster and more reliable as

they get more GPUs online or whatever they need to do.

But so far, not impressed in my actual use case.

And I do think that Opus 4.6 is better,

especially when you do look at some of these examples that

we have.

And in real practice,

Opus 4.6 is actually able to work with me.

Whereas GLM5, not reliable.

So,

I'm not going to certify GLM5 as a bridge mine certified

model,

but I will be putting this model in the bridge bench.

You guys can go check it out at bridgemine.ai.

And if you guys liked this video, make sure you like,

subscribe, and join the Discord community.

And with that being said,

I will see you guys in the future.

Thank you.

Interactive Summary

Ask follow-up questions or revisit key timestamps.

The video introduces GLM5, a new flagship open-source model from ZAI, highlighting its features and performance. While GLM5 boasts a large 202,000 token context window and incredibly affordable pricing ($1 per million input, $3.20 per million output), its real-world performance raises concerns. Benchmarks show GLM5 matching Claude Opus 4.5 on the intelligence index and achieving the lowest hallucination rate among models (34.49%). However, its coding index is lower than frontier models. In practical application, specifically during the Bridge Bench test, GLM5 exhibited very slow response times (156.7 seconds average) and a low task completion rate (75/130), proving unreliable and delaying the video's production. A comparison with Opus 4.6 in creative HTML generation tasks further demonstrated GLM5's slower speed and perceived lower quality outputs. The speaker concludes that despite its benchmark strengths and affordability, GLM5 is currently too slow and unreliable for serious developers, favoring more stable frontier models like Opus 4.6 for practical 'vibe coding' tasks. The video also touches on the anticipated future trajectory of open-source models versus closed-source models.

Suggested questions

6 ready-made promptsRecently Distilled

Videos recently processed by our community